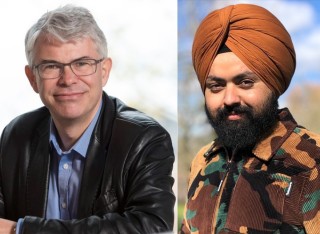

Professor Mark Plumbley FIET, FIEEE

Academic and research departments

Centre for Vision, Speech and Signal Processing (CVSSP), School of Computer Science and Electronic Engineering.About

Biography

Mark Plumbley is Professor of Signal Processing at the Centre for Vision, Speech and Signal Processing (CVSSP), School of Computer Science and Electronic Engineering, at the University of Surrey, in Guildford, UK.

After receiving his PhD degree in neural networks in 1991, he became a Lecturer at King’s College London, before moving to Queen Mary University of London in 2002. He subsequently became Professor and Director of the Centre for Digital Music, before joining the University of Surrey in 2015.

His current research concerns AI for Sound: using machine learning, AI and signal processing for analysis and recognition of sounds, particularly real-world everyday sounds. He led the first data challenge on Detection and Classification of Acoustic Scenes and Events (DCASE 2013).

He holds an EPSRC Fellowship in "AI for Sound", recently led EPSRC-funded projects on making sense of everyday sounds and on audio source separation, and two EU-funded research training networks in sparse representations and compressed sensing. He is a co-editor of the Springer book on "Computational Analysis of Sound Scenes and Events". He is a Fellow of the IET and IEEE.

Previous roles

Affiliations and memberships

News and events

2024

- 3-7 Sept 2024: See you at the 27th International Conference on Digital Audio Effects (DAFx24), University of Surrey, UK.

Paper submission deadline: 20 Mar 2024 - 14-19 Apr 2024: See you at the International Conference on Acoustics, Speech, and Signal Processing (ICASSP 2024), Seoul, Korea. Accepted papers from the group:

- 20-27 Feb 2024: Xubo Liu will be at 38th AAAI Conference on Artificial Intelligence (AAAI-24), Vancouver, Canada, presenting our paper: Haohe Liu, Xubo Liu, Qiuqiang Kong, Wenwu Wang and Mark D. Plumbley. Learning Temporal Resolution in Spectrogram for Audio Classification. [arXiv]

- 31 Jan 2024: Attending the University of Surrey Annual Open Research Culture Event, University of Surrey, UK.

Haohe Liu presenting, shortlisted for an Open Research Award for his case study: Haohe Liu, Wenwu Wang, Mark D. Plumbley: Building the research community with open-source practice. - 16-17 Jan 2024: Attending Workshop on Interdisciplinary Perspectives on Soundscapes and Wellbeing, University of Surrey, UK.

(Day 1: Workshop & Webinar; Day 2: World Café & Sandpit Sessions)

2023

- 26 Oct 2023: Attending Speech and Audio in the Northeast (SANE 2023), NYU, Brooklyn, New York.

Presentations from the group: - 22-25 Oct 2023: Attending IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA 2023), New Paltz, New York, USA. Presentations from the group:

- 20-22 Sep 2023: Attending DCASE 2023 Workshop Workshop on Detection and Classification of Acoustic Scenes and Events, 20-22 September 2023, Tampere, Finland. [Twitter/X]

Papers from the group:- Thu 21 Sep, P1-6: Jinbo Hu, Yin Cao, Ming Wu, Feiran Yang, Ziying Yu, Wenwu Wang, Mark D. Plumbley, Jun Yang: META-SELD: Meta-Learning for Fast Adaptation to the New Environment in Sound Event Localization and Detection

- Thu 21 Sep, P2-20: Yi Yuan, Haohe Liu, Xubo Liu, Xiyuan Kang, Peipei Wu, Mark D. Plumbley, Wenwu Wang: Text-Driven Foley Sound Generation with Latent Diffusion Model

- Fri 22 Sep, P3-25: Peipei Wu, Jinzheng Zhao, Yaru Chen, Davide Berghi, Yi Yuan, Chenfei Zhu, Yin Cao, Yang Liu, Philip JB Jackson, Mark D. Plumbley, Wenwu Wang: PLDISET: Probabilistic Localization and Detection of Independent Sound Events with Transformers

- Also participating in Discussion Panel (16:00 Thu 21 Sep)

- 19 Jul 2023: Great to see everyone on the CVSSP Summer Walk! [Twitter/X]

- 9-13 Jul 2023: Presenting Distinguished Plenary Lecture at 29th International Congress on Sound and Vibration (ICSV29), Prague.

- 5-10 Jun 2023: See you at ICASSP 2023, Rhodes Island, Greece.

Presentations from the group:- Arshdeep Singh and Mark D. Plumbley: Efficient similarity-based passive filter pruning for compressing CNNs. [arXiv] [Open Access] [Twitter/X]

- Xubo Liu, Haohe Liu, Qiuqiang Kong, Xinhao Mei, Mark D. Plumbley and Wenwu Wang. Simple pooling front-ends for efficient audio classification. [arXiv] [Code]

- Haohe Liu, Zehua Chen, Yi Yuan, Xinhao Mei, Xubo Liu, Danilo Mandic, Wenwu Wang and Mark D Plumbley. Generating sound effects, music, speech, and beyond, with text [Show and Tell Demo, presenting AudioLDM]. [Twitter/X]

- 31 May 2023: Team placed first in DCASE Challenge Task 7 Track A "Foley Sound Synthesis" for submission: Yi Yuan, Haohe Liu, Xubo Liu, Xiyuan Kang, Mark D. Plumbley, Wenwu Wang: Latent Diffusion Model Based Foley Sound Generation System for DCASE Challenge 2023 Task 7 [Paper] [Code]

- 24 Apr 2023: Paper accepted for ICML 2023: Haohe Liu, Zehua Chen, Yi Yuan, Xinhao Mei, Xubo Liu, Danilo Mandic, Wenwu Wang, Mark D Plumbley. AudioLDM: Text-to-Audio Generation with Latent Diffusion Models [OpenReview]

- 19-21 Apr 2023: Presenting invited plenary talk at Urban Sound Symposum 2022, Barcelona, Spain. [Twitter/X]

- 20 Mar 2023: Open Research Case Study with Arshdeep Singh: Efficient Audio-based CNNs via Filter Pruning

- 14-21 Mar 2023: Presenting series of talks during visits to Redmond, WA & San Francisco Bay Area, CA, USA. Visits include:

- 14 Mar: Microsoft Research Lab, Redmond - with thanks to host: Ivan Tashev

- 15 Mar: Meta Reality Labs, Redmond - with thanks to host: Buye Xu

- 16 Mar: Adobe Research, San Francisco - with thanks to hosts: Gautham Mysore, Justin Salamon & Nick Bryan

- 16 Mar: Dolby Laboratories, San Francisco - with thanks to host: Mark Thomas

- 17 Mar: Stanford University, Center for Computer Research in Music and Acoustics (CCRMA) - with thanks to host: Malcolm Slaney

- 20 Mar: ByteDance, San Francisco - with thanks to host: Yuxuan Wang

- 20 Mar: Apple, Cupertino - with thanks to hosts: Miquel Espi Marques & Ahmed Tewfik

- 21 Mar: Amazon Lab126, Sunnyvale - with thanks to host: Trausti Kristjansson

- 29 Jan 2023: New paper on arXiv: Haohe Liu, Zehua Chen, Yi Yuan, Xinhao Mei, Xubo Liu, Danilo Mandic, Wenwu Wang, Mark D. Plumbley. AudioLDM: Text-to-Audio Generation with Latent Diffusion Models. arXiv:2301.12503 [cs.SD]. [Project Page] [Code on GitHub] [Hugging Face Space] [Twitter]

- 18 Jan 2023: Invited talk at LISTEN Lab Workshop, Télécom Paris.

- 12 Jan 2023: Invited talk at RISE Learning Machines Seminar series, RISE Research Institutes of Sweden. [YouTube] [Twitter]

- 9 Jan 2023: Welcome to Thomas Deacon, joining the AI for Sound project today!

2022

- 26 Dec 2022: Annamaria Mesaros awarded First Prize in the IEEE Finland Joint Chapter on Signal Processing and Circuits and Systems (SP/CAS) Paper Award 2022 for our paper: A. Mesaros, T. Heittola, T. Virtanen and M. D. Plumbley, Sound Event Detection: A tutorial, IEEE Signal Processing Magazine, vol. 38, no. 5, pp. 67-83, Sept. 2021 [arXiv] [Open Access] [Twitter/X]

- 12 Dec 2022: Congratulations to former PhD students Qiuqiang Kong and Turab Iqbal on being selected for the IEEE Signal Processing Young Author Best Paper Award, for the paper: Qiuqiang Kong*; Yin Cao; Turab Iqbal*; Yuxuan Wang; Wenwu Wang; Mark D. Plumbley: "PANNs: Large-Scale Pretrained Audio Neural Networks for Audio Pattern Recognition" IEEE/ACM Transactions on Audio, Speech, and Language Processing, 28, 2880-2894, 19 October 2020. DOI: 10.1109/TASLP.2020.3030497 [IEEE SPS Newsletter] [IEEE award page] [Paper] [arXiv] [Open Access] [Code]

- 1 Dec 2022: Virtual Keynote talk at the Amazon Audio Tech Summit, on "AI for Sound"

- 29 Nov 2022: Talk at the Centre for Digital Music at Queen Mary University of London, plus external PhD examiner.

- 21 Nov 2022: Welcome to Gabriel Bibbó, joining the AI for Sound project today!

- 3-4 Nov 2022: Attending (virtually) the DCASE 2022 Workshop on Detection and Classification of Acoustic Scenes and Events. Papers from the group:

- Arshdeep Singh, Mark D. Plumbley. Low-complexity CNNs for Acoustic Scene Classification. [arXiv]

- Yang Xiao, Xubo Liu, James King, Arshdeep Singh, Eng Siong Chng, Mark D. Plumbley, Wenwu Wang. Continual Learning For On-Device Environmental Sound Classification. [arXiv]

- Haohe Liu, Xubo Liu, Xinhao Mei, Qiuqiang Kong, Wenwu Wang, Mark D. Plumbley. Segment-level Metric Learning for Few-shot Bioacoustic Event Detection. [arXiv]

- Jinbo Hu, Yin Cao, Ming Wu, Qiuqiang Kong, Feiran Yang, Mark D. Plumbley, Jun Yang. Sound event localization and detection for real spatial sound scenes: event-independent network and data augmentation chains. [arXiv]

- 21-24 Aug 2022: Attending 51st International Congress and Exposition on Noise Control Engineering (Inter-noise 2022), Glasgow, Scotland [Full Programme]

- 22 Aug: Presenting our paper: Creating a new research community on detection and classification of acoustic scenes and events: Lessons from the first ten years of DCASE challenges and workshops (Mark D. Plumbley and Tuomas Virtanen)

Session: MON9.1/d - Advances in Environmental Noise (14:40-17:00)

- 22 Aug: Presenting our paper: Creating a new research community on detection and classification of acoustic scenes and events: Lessons from the first ten years of DCASE challenges and workshops (Mark D. Plumbley and Tuomas Virtanen)

- 17-23 Jul 2022: Attending (virtually) 39th International Conference on Machine Learning (ICML 2022).

- 13 Jul 2022: On CVSSP Summer Walk to Albury [Twitter]

- 11-12 Jul 2022: At two-day face-to-face workshop on "Designing AI for Home Wellbeing"

- 12 Jul: "Designing AI for Home Wellbeing" Seminar Day, supported by the Surrey Institute for People-Centred AI [LinkedIn]

- 11 Jul: "Designing AI for Home Wellbeing" World Cafe, supported by the University of Surrey Institute of Advanced Studies [LinkedIn]

- 30 Jun 2022: Dr Emily Corrigan-Kavanagh co-organizing in-person "Designing AI for Home Wellbeing" World Cafe at the Design Research Society conference DRS 2022 Bilbao (25 Jun - 3 Jul)

- 27 Jun - 1 Jul 2022: Interviewed by Tech Monitor (27 Jun) and BBC Tech Tent [0:17:20-0:22:00] (1 Jul) on virtual reality sound.

- 16 Jun 2022: Paper accepted for 32nd IEEE International Workshop on Machine Learning for Signal Processing (MLSP 2022), Xi'an, China, 22-25 Aug 2022:

- Meng Cui, Xubo Liu, Jinzheng Zhao, Jianyuan Sun, Guoping Lian, Tao Chen, Mark D. Plumbley, Daoliang Li, Wenwu Wang. Fish Feeding Intensity Assessment in Aquaculture: A New Audio Dataset AFFIA3K and A Deep Learning Algorithm

- 15 Jun 2022: Three papers accepted for INTERSPEECH 2022, Incheon, Korea, 18-22 September 2022:

- Xubo Liu, Haohe Liu, Qiuqiang Kong, Xinhao Mei, Jinzheng Zhao, Qiushi Huang, Mark D. Plumbley, Wenwu Wang: Separate What You Describe: Language-Queried Audio Source Separation [arXiv] [Code] [Demo]

- Xinhao Mei, Xubo Liu, Jianyuan Sun, Mark Plumbley and Wenwu Wang. On Metric Learning for Audio-Text Cross-Modal Retrieval [arXiv] [Code]

- Arshdeep Singh and Mark D. Plumbley. A Passive Similarity based CNN Filter Pruning for Efficient Acoustic Scene Classification [arXiv]

- 16 May 2022: Papers accepted for 30th European Signal Processing Conference (EUSIPCO), Belgrade, Serbia, 30 Aug - 2 Sep 2022:

- Xubo Liu, Xinhao Mei, Qiushi Huang, Jianyuan Sun, Jinzheng Zhao, Haohe Liu, Mark D. Plumbley, Volkan Kılıç, Wenwu Wang: Leveraging Pre-trained BERT for Audio Captioning [arXiv]

- Jianyuan Sun, Xubo Liu, Xinhao Mei, Jinzheng Zhao, Mark D. Plumbley, Volkan Kılıç, Wenwu Wang. Deep Neural Decision Forest for Acoustic Scene Classification [arXiv]

- 7-13 May 2022: At IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2022) virtual sessions

- 13 May: Bin Li presents our Journal of Selected Topics in Signal Processing article: Sparse Analysis Model Based Dictionary Learning for Signal Declipping (Bin Li, Lucas Rencker, Jing Dong, Yuhui Luo, Mark D. Plumbley and Wenwu Wang) [Presentation] [Paper] [Open Access] [Code]

- 11 May: Xinhao Mei presents our paper: Diverse Audio Captioning via Adversarial Training (Xinhao Mei, Xubo Liu, Jianyuan Sun, Mark Plumbley and Wenwu Wang) [Presentation] [Paper] [Open Access] [arXiv]

- 10 May: Zhao Ren presents our IEEE Transactions on Multimedia article: CAA-Net: Conditional Atrous CNNs With Attention for Explainable Device-Robust Acoustic Scene Classification (Zhao Ren, Qiuqiang Kong, Jing Han, Mark D. Plumbley and Bjorn W Schuller) [Presentation] [Paper] [Open Access] [arXiv] [Code]

- 8 May: Annamaria Mesaros presents our Signal Processing Magazine article: Sound Event Detection: A Tutorial (Annamaria Mesaros, Toni Heittola, Tuomas Virtanen and Mark D Plumbley) [Presentation] [Paper] [Open Access] [arXiv]

- 7 May: Jinbo Hu presents our challenge paper: A Track-Wise Ensemble Event Independent Network for Polyphonic Sound Event Localization and Detection (Jinbo Hu, Ming Wu, Feiran Yang, Jun Yang, Yin Cao, Mark Plumbley) [Presentation] [Paper] [Open Access] [arXiv] [Code]

- 5 Apr 2022: At UKAN Workshop on Soundscapes, including leading the afternoon in-person World Cafe, at Imperial College London. [Flyer (PDF)] [Twitter] [2] [3]

- 7 Mar 2022: At Virtual World Cafe on Exploring Sounds Sensing to Improve Workplace Wellbeing, organised by Dr Emily Corrigan-Kavanagh

2021

- 3 Dec 2021: Dr Emily Corrigan-Kavanagh presents our paper: Envisioning Sound Sensing Technologies for Enhancing Urban Living (Emily Corrigan-Kavanagh, Andres Fernandez, Mark D. Plumbley) at Environments By Design: Health, Wellbeing And Place, Virtual, 1-3 Dec 2021

- 15-19 Nov 2021: At the DCASE 2021 Workshop on Detection and Classification of Acoustic Scenes and Events, Online

- 19 Nov: Moderating the Town Hall discussion

[Twitter] [Webpage] - 18 Nov: Turab Iqbal presents our poster: ARCA23K: An Audio Dataset for Investigating Open-Set Label Noise (Turab Iqbal, Yin Cao, Andrew Bailey, Mark D. Plumbley, Wenwu Wang)

[Twitter] [Paper] [Poster] [Video] [Code] [Data] - 18 Nov: Xinhao Mei presents our poster: Audio Captioning Transformer (Xinhao Mei, Xubo Liu, Qiushi Huang, Mark D. Plumbley, Wenwu Wang)

[Twitter] [Paper] [Poster] [Video] - 17 Nov: Andres Fernandez presents our poster: Using UMAP to Inspect Audio Data for Unsupervised Anomaly Detection Under Domain-Shift Conditions (Andres Fernandez, Mark D. Plumbley)

[Twitter] [Paper] [Poster] [arXiv] [Video] [Code] [Data] [Webpage] - 17 Nov: Xubo Liu and Qiushi Huang present our poster: CL4AC: A Contrastive Loss for Audio Captioning (Xubo Liu, Qiushi Huang, Xinhao Mei, Tom Ko, H. Tang, Mark D. Plumbley, Wenwu Wang)

[Paper] [Poster] [Video] [Code] - 17 Nov: Xinhao Mei presents our poster: An Encoder-Decoder Based Audio Captioning System with Transfer and Reinforcement Learning (Xinhao Mei, Qiushi Huang, Xubo Liu, Gengyun Chen, Jingqian Wu, Yusong Wu, Jinzheng Zhao, Shengchen Li, Tom Ko, H. Tang, Xi Shao, Mark D. Plumbley, Wenwu Wang)

[Paper] [Poster] [Video] [Code]

- 19 Nov: Moderating the Town Hall discussion

- 12 Nov 2021: At "Envisioning Future Homes for Wellbeing" online event, as a "virtual world cafe". [News] [Graphic]

- 2 Nov 2021: At "Technologies for Home Wellbeing" in-person event, including "Research and Innovation in Technologies for Home Wellbeing" and "Future Technologies for Home Wellbeing" [Programme (PDF)] [News]

- 18-20 Oct 2021: At IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA 2021) (virtual)

- 15 Sep 2021: Francesco Renna presents our paper: Francesco Renna, Mark Plumbley and Miguel Coimbra: Source separation of the second heart sound via alternating optimization, Proc Computing in Cardiology - CinC 2021, Brno, Czech Republic [Abstract] [PDF Abstract] [Preprint] [Preprint]

- 23-27 Aug 2021: At 29th European Signal Processing Conference (EUSIPCO 2021) (virtual)

- 27 Aug: Andrew Bailey presents our paper: Andrew Bailey and Mark D. Plumbley. Gender bias in depression detection using audio features. [Paper]

- 25 Aug: Lam Pham presents our paper: Lam Pham, Chris Baume, Qiuqiang Kong, Tassadaq Hussain, Wenwu Wang, Mark Plumbley. An Audio-Based Deep Learning Framework For BBC Television Programme Classification. [Paper]

- 30 July 2021: Presenting invited talk on "Machine Learning for Sound Sensing" in the Workshop on Applications of Machine Learning at the Twenty Seventh National Conference on Communications (NCC-2021) Virtual Conference, organized by IIT Kanpur and IIT Roorkee, India, 27-30 July 2021 [Twitter]

- 6-11 June 2021: At the 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2021), Toronto (Virtual), Canada

- 10 June: Yin Cao, Qiuqiang Kong and Turab Iqbal present our poster: An Improved Event-Independent Network for Polyphonic Sound Event Localization and Detection (Yin Cao, Turab Iqbal, Qiuqiang Kong, Fengyan An, Wenwu Wang, Mark D. Plumbley) [Twitter] [Paper] [arXiv] [Code]

- 8 June: Jingzhu Zhang presents our poster: Weighted magnitude-phase loss for speech dereverberation (Jingshu Zhang, Mark D. Plumbley, Wenwu Wang) [Twitter] [Paper] [Open Access]

- 19-21 April 2021: See you at the Urban Sound Symposium

- Accepted poster with Dr Emily Corrigan-Kavanagh: "Exploring Sound Sensing to Improve Quality of Life in Urban Living"

- 24 Mar 2021: Presenting Connected Places Catapult Breakfast Briefing: Enabling Thriving Urban Communities through Sound Sensing AI Technology, with Dr Emily Corrigan-Kavanagh [Twitter] [LinkedIn] [Video]

- 23-24 Mar 2021: See you at The Turing Presents: AI UK 2021

- Tue 23 Mar (Day 1) at 16:00 Presenting a Spotlight Talk on "AI for Sound"

- 19-22 Jan 2021: At EUSIPCO 2020: 28th European Signal Processing Conference, Virtal Amsterdam.

- Wed 20 Jan: Emad Grais presents our paper in session ASMSP-7: Speech and Audio Separation: Multi-Band Multi-Resolution Fully Convolutional Neural Networks for Singing Voice Separation (Emad M. Grais, Fei Zhao, Mark D. Plumbley) [Paper]

- Fri 22 Jan: Attending meeting of EURASIP Technical Area Committee on Acoustic, Speech and Music Signal Processing (ASMSP TAC)

2020

- 2 Dec 2020: Plenary lecture at iTWIST 2020: international Traveling Workshop on Interactions between low-complexity data models and Sensing Techniques, Virtual Nantes, France, December 2-4, 2020

- 12 Nov 2020: Invited talk with Dr Emily Corrigan-Kavanagh at #LboroAppliedAI seminar series on Applied AI, Univ of Loughborough (virtual)

- 2-4 Nov 2020: At Workshop on Detection and Classification of Acoustic Scenes and Events (DCASE 2020) Virtual Tokyo, Japan

- Yin Cao presents our paper: Event-independent network for polyphonic sound event localization and detection (Yin Cao, Turab Iqbal, Qiuqiang Kong, Yue Zhong, Wenwu Wang, Mark D. Plumbley) [Paper] [Video] [Code]

- Saeid Safavi presents our poster: Open-window: A sound event dataset for window state detection and recognition (Saeid Safavi, Turab Iqbal, Wenwu Wang, Philip Coleman, Mark D. Plumbley) [Paper] [Video] [Dataset] [Code]

- Our team wins Judges’ Award for DCASE 2020 Challenge Task 5 “Urban Sound Tagging with Spatiotemporal Context”!

Congratulations to Turab Iqbal, Yin Cao, and Wenwu Wang [News Item] [Twitter] - Our team wins Reproducible System Award for DCASE 2020 Challenge Task 3 “Sound Event Localization and Detection”!

Congratulations to Yin Cao, Turab Iqbal, Qiuqiang Kong, Zhong Yue, and Wenwu Wang [News Item] [Twitter] [Paper] [Code] - Joint 1st place team in DCASE 2020 Challenge Task 5 “Urban Sound Tagging with Spatiotemporal Context” with entry: Turab Iqbal, Yin Cao, Mark D. Plumbley and Wenwu Wang: Incorporating Auxiliary Data for Urban Sound Tagging [Paper] [Code]

- 14-16 Oct 2020: Attending BBC Sounds Amazing 2020

- 1 Oct 2020: New award of £2.2 million Multimodal Video Search by Examples (MVSE) [2][3], collaborative project including Hui Wang (Ulster), Mark Gales (Cambridge) and Josef Kittler, Miroslav Bober & Wenwu Wang (Surrey)

- 1 Oct 2020: Welcome to Marc Green, joining the AI for Sound project

- 24 Sep 2020: New award of £1.4 million UK Acoustics Network Plus (UKAN+) [News Item]

- 21 Sep 2020: Welcome to Andres Fernandez, joining the AI for Sound project

- 16 Sep 2020: Presentation at Huawei Future Device Technology Summit 2020

- 2 Sep 2020: Welcome to Dr Emily Corrigan-Kavanagh, joining the AI for Sound project

- 27 Aug 2020: Presentation at online meeting of the Association of Noise Consultants (ANC) [LinkedIn]

- 1 Jul 2020: Presentation to Samsung AI Centre Cambridge, partners of the EPSRC Fellowship in "AI for Sound".

- 17 Jun 2020: Recruiting for three researchers on AI for Sound project (deadline 17 July 2020):

- 5 Jun 2020: Starting new EPSRC Fellowship in "AI for Sound". Press Release: Fellowship to advance sound to new frontiers using AI

- 27 May 2020: Recruiting for Research Fellow on Advanced Machine Learning for Audio Tagging (deadline 27 June 2020)

- 4-8 May 2020: (Virtually) at 45th International Conference on Acoustics, Speech, and Signal Processing (ICASSP 2020)

- [Postponed to 2021: 24 Mar 2020: Talk on "AI for Sound" at The Turing Presents: AI UK, London, UK.]

- 24 Jan 2020: Papers accepted for ICASSP 2020:

- Turab Iqbal, Yin Cao, Qiuqiang Kong, Mark D. Plumbley and Wenwu Wang. Learning with out-of-distribution data for audio classification.

- Qiuqiang Kong, Yuxuan Wang, Xuchen Song, Yin Cao, Wenwu Wang and Mark D. Plumbley. Source separation with weakly labelled data: An approach to computational auditory scene analysis. [arXiv:2002.02065]

- 15 Jan 2020: At PHEE 2020 Conference, London, UK

2019

- 22 Nov 2019: Zhao Ren presented our paper at 9th International Conference on Digital Public Health (DPH 2019), Marseille, France: Zhao Ren, Jing Han, Nicholas Cummins, Qiuqiang Kong, Mark D. Plumbley and Björn W. Schuller. Multi-instance learning for bipolar disorder diagnosis using weakly labelled speech data. [Paper] [Open Access]

- 1 Nov 2019: At UK Acoustics Network (UKAN) 2nd Anniversary Event, London, UK [Twitter]

- 25-26 Oct 2019: At DCASE2019 Workshop on Detection and Classification of Acoustic Scenes and Events, 25-26 October 2019, New York, USA [Twitter]

- Our team wins the Reproducible System Award for DCASE2019 Task 3!

Well done to Yin Cao, Turab Iqbal, Qiuqiang Kong, Miguel Blanco Galindo, Wenwu Wang.

[Paper and Code (reproducible!)] - Yin Cao presenting our poster: Polyphonic Sound Event Detection and Localization using a Two-Stage Strategy.

[Paper] [Code] [Twitter] - Francois Grondin presenting our paper with Iwona Sobieraj and James Glass: Sound event localization and detection using CRNN on pairs of microphones [Paper] [Twitter]

- Our team wins the Reproducible System Award for DCASE2019 Task 3!

- 24 Oct 2019: At Speech and Audio in the Northeast (SANE 2019), Columbia University, New York City, USA [Twitter: #SANE2019]

- Yin Cao and Saeid Safavi presented our demo on sound recognition, generalisation and visualisations [Twitter] [Slides] [YouTube]

- Turab Iqbal presented our poster: Learning with Out-of-Distribution Data for Audio Classification [Twitter]

- Yin Cao presented our poster: Cross-task learning for audio tagging, sound event detection and spatial localization: DCASE 2019 baseline systems [Twitter]

- 20-23 Oct 2019: At IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA 2019), New Paltz, New York, USA [Twitter: #WASPAA2019]

- 16 Oct 2019: Talk at Sound Sensing in Smart Cities 2 at Connected Places Catapult, London, on "AI for Sound: A Future Technology for Smart Cities"

- 30 Sep 2019: Opening Keynote at the 50th Annual Conference of the International Association of Sound and Audiovisual Archives (IASA 2019), Hilversum, The Netherlands [Photos]

- 5 Sep 2019: Emad Grais presented our paper at EUSIPCO 2019: Grais, Wierstorf, Ward, Mason & Plumbley. Referenceless performance evaluation of audio source separation using deep neural networks [Session: ThAP1: ASMSP PIII, Thursday, September 5 13:40 - 15:40, Poster Area 1] [PDF] [Open Access]

- 15 Aug 2019: Wenwu Wang presented our paper at IJCAI-19: Kong, Xu, Jackson, Wang, Plumbley. Single-Channel Signal Separation and Deconvolution with Generative Adversarial Networks [Session: ML|DL - Deep Learning 7 (L), Thu 15 Aug, 17:15] [Abstract] [Proceedings] [PDF] [arXiv:1906.07552] [Source Code]

- 23-25 Jul 2019: Co-organized AI Summer School at University of Surrey [Twitter: #AISurreySummerSchool] [Videos]

- 28 Jun 2019: Great result in DCASE2019 Task 3 "Sound Event Localization and Detection" for CVSSP Team (Yin Cao, Turab Iqbal, Qiuqiang Kong, Miguel Blanco Galindo, Wenwu Wang, Mark Plumbley). Top academic team and 2nd overall [Abstract] [Report] [Source Code]

- 26 Jun 2019: At Human Motion Analysis for Healthcare Applications Conference, IET London. [Twitter: #Human_Motion_Analysis]

- 25 Jun 2019: At Soundscape Workshop 2019, London [Twitter: #SoundscapeWorkshop2019]

- 20 Jun 2019: Talk at Connected Places Catapult, London, on "AI for Sound: A Future Technology for Smarter Living and Travelling" [Twitter (2) (3) (4)]

- See report by UKAuthority: Sound sensors ‘could support smart cities and assistive tech’

- 13 Jun 2019: Talk at the International Hearing Instruments Developer Forum (HADF 2019), Oldenburg, Germany on "Computational analysis of sound scenes and events"

- 3 Jun 2019: Paper accepted for EUSIPCO 2019: Emad M. Grais, Hagen Wierstorf, Dominic Ward, Russell Mason and Mark D. Plumbley. Referenceless performance evaluation of audio source separation using deep neural networks. [arXiv:1811.00454]

- 12-17 May 2019: At IEEE International Conference on Acoustics, Speech, and Signal Processing Signal Processing (ICASSP 2019), Brighton, UK

- Fri 17 May (pm): Qiuqiang Kong presented our poster: Kong, Xu, Iqbal, Cao, Wang & Plumbley: Acoustic Scene Generation with Conditional SampleRNN [Twitter] [Open Access] [Source Code] [Sound Samples]

- Fri 17 May (am): Christian Kroos presented our talk: Kroos, Bones, Cao, Harris, Jackson, Davies, Wang, Cox & Plumbley: Generalisation in Environmental Sound Classification: The 'Making Sense of Sounds' Data Set and Challenge [Open Access] [MSoS Challenge] [Dataset]

- Tue 14 May: Co-authoring three papers in session "Detection and Classification of Acoustic Scenes and Events II"

- Zhao Ren presented our talk: Ren, Kong, Han, Plumbley, Schuller: Attention-based Atrous Convolutional Neural Networks: Visualisation and Understanding Perspectives of Acoustic Scenes[Twitter] [Open Access]

- Yuanbo Hou presented our talk: Hou, Kong, Li, Plumbley: Sound Event Detection with Sequentially Labelled Data Based on Connectionist Temporal Classification and Unsupervised Clustering [Twitter] [Open Access]

- Zuzanna Podwinska presented our talk: Podwinska, Sobieraj, Fazenda, Davies, Plumbley: Acoustic Event Detection from Weakly Labeled Data Using Auditory Salience [Twitter] [Open Access]

- 13 May: Many congratulations to Zhijin Qin, presented with IEEE SPS Young Author Best Paper Award for: Qin, Gao, Plumbley, Parini. Wideband spectrum sensing on real-time signals at sub-Nyquist sampling rates in single and cooperative multiple nodes. IEEE Transactions on Signal Processing. 64: 3106-3117, 2016 [Twitter]

- 12-13 May: Tutorials Co-chair

- 10 May 2019: Paper accepted for IJCAI-19: Qiuqiang Kong, Yong Xu, Wenwu Wang, Philip J.B. Jackson and Mark D. Plumbley. Single-Channel Signal Separation and Deconvolution with Generative Adversarial Networks. [Abstract] [arXiv:1906.07552] [Source Code] (Acceptance rate: 18%)

- 18 Apr 2019: Now availble Open Access: Chungeun Kim, Emad M. Grais, Russell Mason and Mark D. Plumbley. Perception of phase changes in the context of musical audio source separation. AES 145th Convention, New York, 17-20 October 2018. [Open Access]

- 16 Apr 2019: Cross-task baseline code for all DCASE 2019 tasks released. Report: Qiuqiang Kong, Yin Cao, Turab Iqbal, Yong Xu, Wenwu Wang and Mark D. Plumbley. Cross-task learning for audio tagging, sound event detection and spatial localization: DCASE 2019 baseline systems [arXiv:1904.03476]

Source Code: - 4 Apr 2019: Presenting work from our sound recognition projects at the CVSSP 30th Anniversary event [Twitter (2)]

- 13 Mar 2019: Co-organizing kick-off meeting of AI@Surrey, bringing together AI related research across the University of Surrey [Twitter (2)]

- 20 Feb 2019: Paper published: Qiuqiang Kong, Yong Xu, Iwona Sobieraj, Wenwu Wang and Mark D. Plumbley. Sound event detection and time-frequency segmentation from weakly labelled data. IEEE/ACM Transactions on Audio, Speech and Language Processing 27(4): 777-787, April 2019. DOI:10.1109/TASLP.2019.2895254 [Open Access] [Source Code]

- 31 Jan 2019: AudioCommons project completed. See also: Deliverables & Papers; Tools & Resources

- 2 Jan 2019: Paper published: Estefanía Cano, Derry FitzGerald, Antoine Liutkus, Mark D. Plumbley and Fabian-Robert Stöter. Musical Source Separation: An Introduction. IEEE Signal Processing Magazine 36(1):31-40, January 2019. DOI:10.1109/MSP.2018.2874719 [Open Access]

2018

- 18 Dec 2018: Tropical-themed CVSSP Christmas Party! [Twitter]

- 21-22 Nov 2018: Hosting the 5th General Meeting of the AudioCommons project at the University of Surrey [Twitter]

- 19-20 Nov 2018: Co-chair of DCASE 2018 Workshop on Detection and Classification of Acoustic Scenes and Events, Surrey, UK [Twitter: #DCASE2018] [Proceedings]

- 20 Nov:

- Chairing the final Panel Session [Twitter]

- Qiuqiang Kong presenting our poster: Kong, Iqbal, Xu, Wang, Plumbley: DCASE 2018 Challenge Surrey cross-task convolutional neural network baseline [Twitter]

Code: - 19 Nov:

- Turab Iqbal and Qiuqiang Kong presenting our poster: Iqbal, Kong, Plumbley, Wang: General-purpose audio tagging from noisy labels using convolutional neural networks

- Christian Kroos presenting our poster: Kroos, Bones, Cao, Harris, Jackson, Davies, Wang, Cox, Plumbley: The Making Sense of Sounds Challenge

- Zhao Ren presenting our talk: Ren, Kong, Qian, Plumbley, Schuller: Attention-based convolutional neural networks for acoustic scene classification [Twitter]

- Opening the workshop [Twitter]

- 18 Sep 2018: CVSSP team (with Turab Iqbal, Qiuqiang Kong, Wenwu Wang) ranked 3rd out of 558 systems on Kaggle for DCASE 2018 Challenge Task 2: Freesound General-Purpose Audio Tagging[News item]

- Report: Turab Iqbal, Qiuqiang Kong, Mark D. Plumbley and Wenwu Wang. Stacked convolutional neural networks for general-purpose audio tagging. Technical Report, Detection and Classification of Acoustic Scenes and Events 2018 (DCASE 2018) Challenge, September 2018 [Software Code]

- 8 Aug 2018: Making Sense of Sounds Challenge announced

- 26 Jul 2018: Shengchen Li presents our paper at the 37th Chinese Control Conference: Li, Dixon & Plumbley. A demonstration of hierarchical structure usage in expressive timing analysis by model selection tests. In: Proceedings of the 37th Chinese Control Conference, CCC2018, Wuhan, China, 25-27 July 2018, pp 3190-3195. DOI:10.23919/ChiCC.2018.8483169 [Open Access]

- 6 Jul 2018: Co-Chair of Audio Day 2018, University of Surrey, Guildford, UK [Twitter: #SurreyAudioDay]

- Hosting the closing Panel Discussion [Twitter]

- From the AudioCommons project:

- I present an overview of our work on the Making Sense of Sounds project [Twitter (2)]

- From the Musical Audio Repurposing using Source Separation project:

- 2-5 Jul 2018: Co-Chair of LVA/ICA 2018: 14th International Conference on Latent Variable Analysis and Signal Separation, University of Surrey, Guildford, UK [Twitter: #LVAICA2018]

- See also: Dan Stowell: Notes from LVA-ICA conference 2018

- 5 Jul

- Congratulations to Lucas Rencker for a "Best Student Paper Award"!

- Lucas Rencker presents our talk: Rencker, Bach, Wang & Plumbley. Consistent dictionary learning for signal declipping [Twitter] [Open Access]

- Alfredo Zermini presents our poster: Zermini, Kong, Xu, Plumbley & Wang. Improving Reverberant Speech Separation with Binaural Cues Using Temporal Context and Convolutional Neural Networks [Twitter] [Open Access]

- 4 Jul

- Cian O’Brien presenting our late-breaking poster: O’Brien & Plumbley: Latent Mixture Models for Automatic Music Transcription

- Qiuqiang Kong presenting our SiSEC poster: Ward, Kong & Plumbley. Source Separation with Long Time Dependency Gated Recurrent Units Neural Networks [Description] [Twitter]

- 3 Jul

- Emad M. Grais presenting our poster: Grais, Wierstorf, Ward & Plumbley. Multi-Resolution Fully Convolutional Neural Networks for Monaural Audio Source Separation [Twitter] [Open Access]

- Iwona Sobieraj presenting our late-breaking poster: Sobieraj, Rencker & Plumbley. Orthogonality-Regularized Masked NMF with KL-Divergence for Learning on Weakly Labeled Audio Data[Twitter]

- Opening the conference [Twitter]

- 5 Jun 2018: At introductory meeting of Communication and Room Acoustics SIG of UK Acoustics Network

- 23-26 May 2018: Group members at AES Milan 2018: 144th International Pro Audio Convention.

- Fri 25 May: Emad Grais presented our poster Grais & Plumbley: Combining Fully Convolutional and Recurrent Neural Networks for Single Channel Audio Source Separation [Twitter] [Details] [Poster (pdf) ]

- Thu 24 May: PhD student Qiuqiang Kong (Panellist) at Workshop W11: Deep Learning for Audio Applications [Twitter]

- Wed 23 May: Workshop (panel discussion) on Audio Repurposing Using Source Separation organized by our EPSRC project Musical Audio Repurposing using Source Separation, Chaired by Phil Coleman with postdoc Chungeun (Ryan) Kim (Panellist) [Twitter] [Blog from Phil Coleman]

- 18 May 2018: Visit to Audio Analytic at end of successful stay by PhD student Iwona Sobieraj.

- 18 May 2018: Papers accepted for EUSIPCO 2018:

- Emad M. Grais, Dominic Ward and Mark D. Plumbley. Raw Multi-Channel Audio Source Separation using Multi-Resolution Convolutional Auto-Encoders.

- Cian O’Brien and Mark D. Plumbley. A Hierarchical Latent Mixture Model for Polyphonic Music Analysis.

- 3 May 2018: Congratulations to Prof Trevor Cox, collaborator on the EPSRC-funded Making Sense of Sounds project, on new book Now You're Talking: Human Conversation from the Neanderthals to Artificial Intelligence.

- 30 Apr 2018: Congratulations to Dr Chris Baume on official award of PhD, for his thesis Semantic Audio Tools for Radio Production,

funded by BBC R&D as part of the BBC Audio Research Partnership. - 29 Apr 2018: Now Published (Open Access): Chris Baume, Mark D. Plumbley, David Frohlich, Janko Ćalić. PaperClip: A digital pen interface for semantic speech editing in radio production. Journal of the Audio Engineering Society 66(4):241-252, April 2018. DOI:10.17743/jaes.2018.0006 [Full text also at: http://epubs.surrey.ac.uk/845786/]

- 16-20 Apr 2018: At IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2018), Calgary, Alberta, Canada. [Twitter: #ICASSP2018]

- Iwona Sobieraj presented our poster Sobieraj, Rencker & Plumbley: Orthogonality-regularized masked NMF for learning on weakly labeled audio data [Tue 17 April, 13:30 - 15:30, Poster Area E] [Twitter]

- Qiuqiang Kong & Yong Xu presented two posters: (a) Kong, Xu, Wang & Plumbley: Audio Set classification with attention model: A probabilistic perspective and (b) Kong, Xu, Wang & Plumbley: A joint separation-classification model for sound event detection of weakly labelled data [Wed 18 Apr, 08:30 - 10:30, Poster Area C] [Twitter]

- Wenwu Wang & Philip Jackson presented our poster Huang, Jackson, Plumbley & Wang: Synthesis of images by two-stage generative adversarial networks [Wed 18 Apr, 13:30 - 15:30, Poster Area G] [Twitter]

- Yong Xu presented talk Xu, Kong, Wang & Plumbley: Large-scale weakly supervised audio classification using gated convolutional neural network [Thu 19 Apr, 13:30 - 13:50] [Twitter]

- Emad Grais presented our poster Ward, Wierstorf, Mason, Grais & Plumbley. BSS Eval or PEASS? Predicting the perception of singing-voice separation [Thu 19 April, 16:00 - 18:00, Poster Area D] [Twitter] [Details] [Study info] [Code]

- Cian O'Brien presented our poster O'Brien & Plumbley: Inexact proximal operators for lp-quasinorm minimization [Fri 20 Apr, 16:00 - 18:00, Poster Area G] [Twitter]

- Also: Springer stand with our new book: Tuomas Virtanen, Mark D. Plumbley &Dan Ellis Computational Analysis of Sound Scenes and Events [Twitter]

- 1 Apr 2018: Now Published (Open Access): Chris Baume, Mark D. Plumbley, Janko Ćalić and David Frohlich. A contextual study of semantic speech editing in radio production. International Journal of Human-Computer Studies 115:67-80, July 2018. DOI:10.1016/j.ijhcs.2018.03.006 [Full text also at: http://epubs.surrey.ac.uk/846079/]

- 1 Apr 2018: Paper accepted for 37th Chinese Control Conference, CCC2018: Shengchen Li, Simon Dixon and Mark D. Plumbley. A demonstration of hierarchical structure usage in expressive timing analysis by model selection tests.

- 23 Mar 2018: At SpaRTaN-MacSeNet Workshop on Sparse Representations and Compressed Sensing in Paris, organized by our SpaRTaN and MacSeNet Initial/Innovative Training Networks. [Twitter: #SpartanMacsenet]

- 22 Mar 2018: At SpaRTaN-MacSeNet Training Workshop, Paris [Twitter]

- 19 Mar 2018: Papers accepted for LVA/ICA 2018:

- Emad M. Grais, Hagen Wierstorf, Dominic Ward and Mark D. Plumbley. Multi-resolution fully convolutional neural networks for monaural audio source separation.

- Lucas Rencker, Francis Bach, Wenwu Wang and Mark D. Plumbley. Consistent dictionary learning for signal declipping.

- Alfredo Zermini, Qiuqiang Kong, Yong Xu, Mark D. Plumbley and Wenwu Wang. Improving reverberant speech separation with binaural cues using temporal context and convolutional neural networks.

- 14 Mar 2018: At launch of the Digital Catapult's Machine Intelligence Garage, London [Twitter: #MachineIntelligenceGarage]

- 6 Mar 2018: Welcome to Saeid Safavi, joining the EU H2020 project Audio Commons.

- 5 Mar 2018: Welcome to Ryan Kim, joining the EPSRC-funded project Musical Audio Repurposing using Source Separation

- 23 Feb 2018: Paper accepted for HCI International 2018: Tijs Duel, David M. Frohlich, Christian Kroos, Yong Xu, Philip J. B. Jackson and Mark D. Plumbley. Supporting audiography: Design of a system for sentimental sound recording, classification and playback.

- 22 Feb 2018: At 4th General Meeting of the AudioCommons project in Barcelona [Twitter (2)]

- 14 Feb 2018: Paper accepted for AES Milan 2018: Emad M. Grais and Mark D. Plumbley. Combining fully convolutional and recurrent neural networks for single channel audio source separation.

- 12 Feb 2018: Welcome to Manal Helal, joining the EPSRC-funded project Musical Audio Repurposing using Source Separation

- 29 Jan 2018: Papers accepted for ICASSP 2018:

- Qiang Huang, Philip Jackson, Mark D. Plumbley and Wenwu Wang. Synthesis of images by two-stage generative adversarial networks.

- Qiuqiang Kong, Yong Xu, Wenwu Wang and Mark D. Plumbley. A joint separation-classification model for sound event detection of weakly labelled data.

- Qiuqiang Kong, Yong Xu, Wenwu Wang and Mark D. Plumbley. Audio Set classification with attention model: A probabilistic perspective.

- Cian O'Brien and Mark D. Plumbley. Inexact proximal operators for lp-quasinorm minimization.

- Iwona Sobieraj, Lucas Rencker and Mark D. Plumbley. Orthogonality-regularized masked NMF for learning on weakly labeled audio data.

- Dominic Ward, Hagen Wierstorf, Russell D. Mason, Emad M. Grais and Mark D. Plumbley. BSS Eval or PEASS? Predicting the perception of singing-voice separation.

- Yong Xu, Qiuqiang Kong, Wenwu Wang and Mark D. Plumbley. Large-scale weakly supervised audio classification using gated convolutional neural network.

2017

- 4 Dec 2017: Iwona Sobieraj presents poster at Women in Machine Learning Workshop (WiML 2017): Masked Non-negative Matrix Factorization for Bird Detection Using Weakly Labelled Data

- 27 Nov 2017: Introducing the Communication Acoustics SIG at the Launch of UK Acoustics Network [Event] [Photos] [Twitter: #UKAcousticsLaunch]

- 16-17 Nov 2017: At Workshop on Detection and Classification of Acoustic Scenes and Events (DCASE 2017), Munch, Germany. [Twitter: #DCASE2017]

- Christian Kroos presented our paper: Kroos & Plumbley: Neuroevolution for Sound Event Detection in Real Life Audio: A Pilot Study [Twitter (2) (3) (4)] [Slides] [Video]

- Yong Xu and Qiuqiang Kong presented our technical report poster: Surrey-CVSSP System for DCASE2017 Challenge Task4 [Twitter]

- 15 Nov 2017: At Meeting of RCUK Digital Economy Programme Advisory Board (DE PAB), Swindon.

- 14 Nov 2017: Emad Grais at GlobalSIP 2017 presenting our poster: Grais & Plumbley: Single Channel Audio Source Separation using Convolutional Denoising Autoencoders.

- 1 Nov 2017: Chairing Advisory Board of the Software Sustainability Institute, Southampton.

- 25 Oct 2017: At Dutch Design Week 2017, Eindhoven, The Netherlands.

- 23-27 Oct 2017: Presentations from group at ISMIR 2017, Suzhou, China

- 27 Oct: Qiuqiang Kong presents our Late-Breaking poster "Music source separation using weakly labelled data" at [Extended abstract]

- 25 Oct: Shengchen Li presents our poster "Clustering expressive timing with regressed polynomial coefficients demonstrated by a model selection test" [Paper]

- 20 Oct 2017: Hagen Wierstorf presents our talk "Perceptual Evaluation of Source Separation for Remixing Music" at AES New York 2017 [Abstract] [Twitter] [Paper] [Open Access]

- 19 Oct 2017: At Speech and Audio in the North East (SANE 2017), New York, NY, USA [Twitter: #SANE2017]

- Presented our poster: Signal Processing, Psychoacoustic Engineering and Digital Worlds: Interdisciplinary Audio Research at the University of Surrey [Twitter]

- 16-18 Oct 2017: At WASPAA 2017, New Paltz, NY, USA

- 18 Oct: Keynote talk: Making Sense of Sounds: Machine Listening in the Real World [Twitter]

- 6 Oct 2017: Delighted that Department of Computer Science receives Athena SWAN Bronze award, glad to have helped the April 2017 submission.

- 4-7 Sep 2017: Handing back to Helen Treharne as returning Head of Department of Computer Science.

- 28 Aug - 2 Sep 2017: Presentations from group at EUSIPCO 2017:

- Qiuqiang Kong, Yong Xu and Mark D. Plumbley. Joint detection and classification convolutional neural network on weakly labelled bird audio detection.

- Cian O’Brien and Mark D. Plumbley. Automatic music transcription using low rank non-negative matrix decomposition.

- Lucas Rencker, Wenwu Wang and Mark D. Plumbley. Multivariate Iterative Hard Thresholding for sparse decomposition with flexible sparsity patterns.

- Iwona Sobieraj, Qiuqiang Kong and Mark D. Plumbley. Masked non-negative matrix factorization for bird detection using weakly labeled data.

- 22 Jun 2017: At 2017 AES International Conference on Semantic Audio, Erlangen, Germany [Twitter: #aessa17]

- See also: Report from Brecht De Man

- Presenting Opening Keynote: "Audio Event Detection and Scene Recognition" [Twitter]

- 8 Jun 2017: At Cheltenham Science Festival for Panel Discussion on Is Your Tech Listening To You? with Jason Nurse and Rory Cellan-Jones. Featured with interview in BBC Tech Tent 9 Jun 2017

News

In the media

ResearchResearch interests

My research concerns AI for Sound: using machine learning and signal processing for analysis and recognition of sounds. My focus is on detection, classification and separation of acoustic scenes and events, particularly real-world sounds, using methods such as deep learning, sparse representations and probabilistic models.

I have published over 400 papers in journals, conferences and books, including over 70 journal papers and the recent Springer co-edited book on Computational Analysis of Sound Scenes and Events.

Much of my research is funded by grants from EPSRC and EU, Innovate UK and other sources. I currently hold an EPSRC Fellowship on "AI for Sound", and recently led EPSRC projects Making Sense of Sounds and Musical Audio Repurposing using Source Separation, and two EU research training networks, SpaRTaN and MacSeNet. My total grant funding is around £54M, including £20M as Principal Investigator, Coordinator or Lead Applicant.

I was co-Chair of the DCASE 2018 Workshop on Detection and Classification of Acoustic Scenes and Events, co-Chair of the 14th International Conference on Latent Variable Analysis and Signal Separation (LVA/ICA 2018) and co-Chair of the Signal Processing with Adaptive Sparse Structured Representations (SPARS 2017) workshop.

Research projects

EPSRC grant EP/V007866/1, £1.4M total, £40k to Surrey, Apr 2021 – Mar 2025. Joint with Horoshenkov (Sheffield), Bristow (Surrey), plus 10 others at Imperial, Loughborough, Salford, LSBU, Manchester, Bath, Reading, Southampton, Nottingham Trent.

EPSRC grant EP/V002856/1, £2.2M total, £863k to Surrey, Apr 2021 – Sep 2024. Joint with with Kittler, Wang & Bober (Surrey); Wang (QUB), Bond & Mulvenna (Ulster); Gales (Cambridge), BBC R&D.

EPSRC Fellowship EP/T019751/1, £2.1M, May 2020 – Apr 2025.

EPSRC grant EP/N014111/1, £1.28M total, £875k to Surrey, Mar 2016 – Mar 2019. Joint with University of Salford.

EPSRC grant EP/L027119/1 (Queen Mary) & EP/L027119/2 (Surrey), £887,607, Nov 2014 – Oct 2018.

EPSRC grant EP/P022529/1, £1.58M, Aug 2017 – Jul 2022.

EU H2020 Research and Innovation Grant 688382 total €2.98M (~£2.2M), £470k to Surrey, Feb 2016 - Jan 2019.

EU Marie Skłodowska-Curie Action (MSCA) Innovative Training Network H2020-MSCA-ITN-2014 project 642685, Total €3.8M (£3.0M), £560k to Surrey, 2015-2018.

EU Marie Curie Actions Initial Training Networks, FP7-PEOPLE-2013-ITN project 607290. Total €2.8M (£2.1M), €840k (£630k) to Surrey, 2014-2018.

Research interests

My research concerns AI for Sound: using machine learning and signal processing for analysis and recognition of sounds. My focus is on detection, classification and separation of acoustic scenes and events, particularly real-world sounds, using methods such as deep learning, sparse representations and probabilistic models.

I have published over 400 papers in journals, conferences and books, including over 70 journal papers and the recent Springer co-edited book on Computational Analysis of Sound Scenes and Events.

Much of my research is funded by grants from EPSRC and EU, Innovate UK and other sources. I currently hold an EPSRC Fellowship on "AI for Sound", and recently led EPSRC projects Making Sense of Sounds and Musical Audio Repurposing using Source Separation, and two EU research training networks, SpaRTaN and MacSeNet. My total grant funding is around £54M, including £20M as Principal Investigator, Coordinator or Lead Applicant.

I was co-Chair of the DCASE 2018 Workshop on Detection and Classification of Acoustic Scenes and Events, co-Chair of the 14th International Conference on Latent Variable Analysis and Signal Separation (LVA/ICA 2018) and co-Chair of the Signal Processing with Adaptive Sparse Structured Representations (SPARS 2017) workshop.

Research projects

EPSRC grant EP/V007866/1, £1.4M total, £40k to Surrey, Apr 2021 – Mar 2025. Joint with Horoshenkov (Sheffield), Bristow (Surrey), plus 10 others at Imperial, Loughborough, Salford, LSBU, Manchester, Bath, Reading, Southampton, Nottingham Trent.

EPSRC grant EP/V002856/1, £2.2M total, £863k to Surrey, Apr 2021 – Sep 2024. Joint with with Kittler, Wang & Bober (Surrey); Wang (QUB), Bond & Mulvenna (Ulster); Gales (Cambridge), BBC R&D.

EPSRC Fellowship EP/T019751/1, £2.1M, May 2020 – Apr 2025.

EPSRC grant EP/N014111/1, £1.28M total, £875k to Surrey, Mar 2016 – Mar 2019. Joint with University of Salford.

EPSRC grant EP/L027119/1 (Queen Mary) & EP/L027119/2 (Surrey), £887,607, Nov 2014 – Oct 2018.

EPSRC grant EP/P022529/1, £1.58M, Aug 2017 – Jul 2022.

EU H2020 Research and Innovation Grant 688382 total €2.98M (~£2.2M), £470k to Surrey, Feb 2016 - Jan 2019.

EU Marie Skłodowska-Curie Action (MSCA) Innovative Training Network H2020-MSCA-ITN-2014 project 642685, Total €3.8M (£3.0M), £560k to Surrey, 2015-2018.

EU Marie Curie Actions Initial Training Networks, FP7-PEOPLE-2013-ITN project 607290. Total €2.8M (£2.1M), €840k (£630k) to Surrey, 2014-2018.

Supervision

Postgraduate research supervision

I welcome applications from excellent students who wish to study for a PhD in my areas of research interest. Some example potential PhD projects are given below. I am also happy to discuss your own ideas for a project that you think may be of interest.

For more information on undertaking PhD study see: Centre for Vision, Speech and Signal Processing: PhD Study. For information on how to apply, see: Vision, Speech and Signal Processing PhD.

Example potential PhD projects

Deep learning for audio models and representations

The aim of this project is to investigate new machine learning methods for discovering good representations from audio sensor data. Deep learning has demonstrated very good performance on many machine learning problems, including audio event detection and acoustic scene classification. However, the theory of the deep learning process is not well understood, and these models typically require large amounts of training data. Recent advances based on information theory, such as the Information Bottleneck method, and model compression offer a promising direction for learning deep learning models and representations. The project will pay particular attention to methods for learning without labels, such as unsupervised and self-supervised learning. Project outcomes are expected to include new methods for constructing and learning efficient models and representations of audio data, making best use of available training data and labels, where available.

Privacy-preserving machine learning for sound sensing

The aim of this project is to build methods for machine learning for sound sensors, while preserving privacy of people or activities that are part of the sensed sound environment. For sound monitoring, we may wish to deploy sound sensors in public spaces, homes, or on personal devices. If the traditional approach of a large central data store were used for machine learning on deployed sensors, if may be difficult to achieve privacy of individuals whose sounds were picked up by sensors. Recently methods have been proposed that attempt to preserve privacy. For example, federated learning allows training to take place on distributed client devices, without the data itself being transferred to a central server, while differential privacy allows aggregate information about a dataset to be disclosed without disclosing information on individual records. This project will use these and related methods to investigate and develop new methods to allow sound sensors to be updated based on sounds captured by deployed sensors, while avoiding distribution of private or sensitive information.

Fair machine learning of sound data

The aim of this project is to investigate potential biases in sound-related datasets and challenges, and develop ways to overcome such biases to ensure fair machine learning approaches for sound data. When machine learning is used to tackle real-world problems, we should ensure that these models do not incorporate bias. Biases in datasets may not only result in skewed classification performance, such as over-reporting of performance, but can also result in subjects with protected characteristics, such as race or gender, being unfairly penalised. Biases can occur due to many factors, such as imbalanced data collection, correlations of factors with protected characteristics, or the machine learning models themselves. Fair Machine Learning (FML) is an area of research that explores the idea of fair, unbiased classification, in an attempt to work towards a fairer society through the use of machine learning. This project will investigate potential biases in databases such as DCASE challenge databases, and investigate methodologies and tools to document, measure and counteract biases. We will also consider how to make such methods understandable, including investigating metrics to evaluate the transparency of different approaches, and explore interpretability frameworks for deep learning models used for audio classification, and how to improve these.

Active Learning for sounds

We will investigate methods for active learning for sound classification, where users are queried interactively for labels which are used to update labels and class boundaries. As well as reducing the burden of labelling compared to "brute force" labelling from scratch, active learning offer the potential for individual users to create personalized sets of classes which are more useful to their intended application. Active learning can also help preserve user privacy, using models created locally based on the user data, without transmitting this data outside of the device. Initial investigation may start from simulated semi-supervised active learning, where datasets are used which are fully labelled but where the labels are queried one at a time. Developed methods will be tested on real subjects, which may include users recruited via crowdsourcing approaches. We will also investigate combinations of active learning with methods designed to work with small numbers of labelled data, such as few-shot learning and self-supervised learning, to develop efficient methods that can learn sound classes from a small number of user queries.

Measurement of soundscapes and noise

The aim of this PhD project is to use sound recognition to develop new estimates of sound impacts on people, to enable improved measurement and design of soundscapes. Exposure to noise can have significant physical and mental health implications: The annual UK cost of noise impacts is 7 billion–10 billion GBP. Most currently noise measurements and noise policies rely on simple loudness measures (Sound Pressure Level, measured in e.g. dBA), which do not fully reflect the real impact on individual people. Automatically recognizing different sound sources, and creating new measures that reflect the impact of these sounds on different people, could help us to improve people’s experience of our sonic environment. In this project we will investigate and propose predictive soundscape models based on sound source recognition. The project may start from baseline sound recognition models, mapping these onto a valence-arousal affect (emotion) space, and would include soundwalks and laboratory studies to inform the development of new models, and to validate models on unseen data and surveys on indoor and/or outdoor settings. Methods produced by this project may contribute to future work on soundscape standards, and how these are used in future noise and soundscape policies to improve wellbeing of citizens.

Publications

Audio captioning aims at using language to describe the content of an audio clip. Existing audio captioning systems are generally based on an encoder-decoder architecture, in which acoustic information is extracted by an audio encoder and then a language decoder is used to generate the captions. Training an audio captioning system often encounters the problem of data scarcity. Transferring knowledge from pre-trained audio models such as Pre-trained Audio Neural Networks (PANNs) have recently emerged as a useful method to mitigate this issue. However, there is less attention on exploiting pre-trained language models for the decoder, compared with the encoder. BERT is a pre-trained language model that has been extensively used in natural language processing tasks. Nevertheless, the potential of using BERT as the language decoder for audio captioning has not been investigated. In this study, we demonstrate the efficacy of the pre-trained BERT model for audio captioning. Specifically, we apply PANNs as the encoder and initialize the decoder from the publicly available pre-trained BERT models. We conduct an empirical study on the use of these BERT models for the decoder in the audio captioning model. Our models achieve competitive results with the existing audio captioning methods on the AudioCaps dataset.

—Acoustic scene classification (ASC) aims to classify an audio clip based on the characteristic of the recording environment. In this regard, deep learning based approaches have emerged as a useful tool for ASC problems. Conventional approaches to improving the classification accuracy include integrating auxiliary methods such as attention mechanism, pre-trained models and ensemble multiple sub-networks. However, due to the complexity of audio clips captured from different environments, it is difficult to distinguish their categories without using any auxiliary methods for existing deep learning models using only a single classifier. In this paper, we propose a novel approach for ASC using deep neural decision forest (DNDF). DNDF combines a fixed number of convolutional layers and a decision forest as the final classifier. The decision forest consists of a fixed number of decision tree classifiers, which have been shown to offer better classification performance than a single classifier in some datasets. In particular, the decision forest differs substantially from traditional random forests as it is stochastic, differentiable, and capable of using the back-propagation to update and learn feature representations in neural network. Experimental results on the DCASE2019 and ESC-50 datasets demonstrate that our proposed DNDF method improves the ASC performance in terms of classification accuracy and shows competitive performance as compared with state-of-the-art baselines.

Polyphonic sound event localization and detection (SELD), which jointly performs sound event detection (SED) and direction-of-arrival (DoA) estimation, detects the type and occurrence time of sound events as well as their corresponding DoA angles simultaneously. We study the SELD task from a multi-task learning perspective. Two open problems are addressed in this paper. Firstly, to detect overlapping sound events of the same type but with different DoAs, we propose to use a trackwise output format and solve the accompanying track permutation problem with permutation-invariant training. Multi-head self-attention is further used to separate tracks. Secondly, a previous finding is that, by using hard parameter-sharing, SELD suffers from a performance loss compared with learning the subtasks separately. This is solved by a soft parameter-sharing scheme. We term the proposed method as Event Independent Network V2 (EINV2), which is an improved version of our previously-proposed method and an end-to-end network for SELD. We show that our proposed EINV2 for joint SED and DoA estimation outperforms previous methods by a large margin, and has comparable performance to state-of-the-art ensemble models. Index Terms— Sound event localization and detection, direction of arrival, event-independent, permutation-invariant training, multi-task learning.

Polyphonic sound event localization and detection is not only detecting what sound events are happening but localizing corresponding sound sources. This series of tasks was first introduced in DCASE 2019 Task 3. In 2020, the sound event localization and detection task introduces additional challenges in moving sound sources and overlapping-event cases, which include two events of the same type with two different direction-of-arrival (DoA) angles. In this paper, a novel event-independent network for polyphonic sound event lo-calization and detection is proposed. Unlike the two-stage method we proposed in DCASE 2019 Task 3, this new network is fully end-to-end. Inputs to the network are first-order Ambisonics (FOA) time-domain signals, which are then fed into a 1-D convolutional layer to extract acoustic features. The network is then split into two parallel branches. The first branch is for sound event detection (SED), and the second branch is for DoA estimation. There are three types of predictions from the network, SED predictions, DoA predictions , and event activity detection (EAD) predictions that are used to combine the SED and DoA features for onset and offset estimation. All of these predictions have the format of two tracks indicating that there are at most two overlapping events. Within each track, there could be at most one event happening. This architecture introduces a problem of track permutation. To address this problem, a frame-level permutation invariant training method is used. Experimental results show that the proposed method can detect polyphonic sound events and their corresponding DoAs. Its performance on the Task 3 dataset is greatly increased as compared with that of the baseline method. Index Terms— Sound event localization and detection, direction of arrival, event-independent, permutation invariant training.

Acoustic Scene Classification (ASC) is a task that classifies a scene according to environmental acoustic signals. Audios collected from different cities and devices often exhibit biases in feature distributions, which may negatively impact ASC performance. Taking the city and device of the audio collection as two types of data domain, this paper attempts to disentangle the audio features of each domain to remove the related feature biases. A dual-alignment framework is proposed to generalize the ASC system on new devices or cities, by aligning boundaries across domains and decision boundaries within each domain. During the alignment, the maximum classifier discrepancy and gradient reversed layer are used for the feature disentanglement of scene, city and device, while four candidate domain classifiers are proposed to explore the optimal solution of feature disentanglement. To evaluate the dual-alignment framework, three experiments of biased ASC tasks are designed: 1) cross-city ASC in new cities; 2) cross-device ASC in new devices; 3) cross-city-device ASC in new cities and new devices. Results demonstrate the superiority of the proposed framework, showcasing performance improvements of 0.9%, 19.8%, and 10.7% on classification accuracy, respectively. The effectiveness of the proposed feature disentanglement approach is further evaluated in both biased and unbiased ASC problems, and the results demonstrate that better-disentangled audio features can lead to a more robust ASC system across different devices and cities. This paper advocates for the integration of feature disentanglement in ASC systems to achieve more reliable performance.

Particle filters (PFs) have been widely used in speaker tracking due to their capability in modeling a non-linear process or a non-Gaussian environment. However, particle filters are limited by several issues. For example, pre-defined handcrafted measurements are often used which can limit the model performance. In addition, the transition and update models are often preset which make PF less flexible to be adapted to different scenarios. To address these issues, we propose an end-to-end differentiable particle filter framework by employing the multi-head attention to model the long-range dependencies. The proposed model employs the self-attention as the learned transition model and the cross-attention as the learned update model. To our knowledge, this is the first proposal of combining particle filter and transformer for speaker tracking, where the measurement extraction, transition and update steps are integrated into an end-to-end architecture. Experimental results show that the proposed model achieves superior performance over the recurrent baseline models.

Despite recent progress in text-to-audio (TTA) generation, we show that the state-of-the-art models, such as AudioLDM, trained on datasets with an imbalanced class distribution, such as AudioCaps, are biased in their generation performance. Specifically, they excel in generating common audio classes while underperforming in the rare ones, thus degrading the overall generation performance. We refer to this problem as long-tailed text-to-audio generation. To address this issue, we propose a simple retrieval-augmented approach for TTA models. Specifically, given an input text prompt, we first leverage a Contrastive Language Audio Pretraining (CLAP) model to retrieve relevant text-audio pairs. The features of the retrieved audio-text data are then used as additional conditions to guide the learning of TTA models. We enhance AudioLDM with our proposed approach and denote the resulting augmented system as Re-AudioLDM. On the AudioCaps dataset, Re-AudioLDM achieves a state-of-the-art Frechet Audio Distance (FAD) of 1.37, outperforming the existing approaches by a large margin. Furthermore, we show that Re-AudioLDM can generate realistic audio for complex scenes, rare audio classes, and even unseen audio types, indicating its potential in TTA tasks.

Foley sound generation aims to synthesise the background sound for multimedia content. Previous models usually employ a large development set with labels as input (e.g., single numbers or one-hot vector). In this work, we propose a diffusion model based system for Foley sound generation with text conditions. To alleviate the data scarcity issue, our model is initially pre-trained with large-scale datasets and fine-tuned to this task via transfer learning using the contrastive language-audio pertaining (CLAP) technique. We have observed that the feature embedding extracted by the text encoder can significantly affect the performance of the generation model. Hence, we introduce a trainable layer after the encoder to improve the text embedding produced by the encoder. In addition, we further refine the generated waveform by generating multiple candidate audio clips simultaneously and selecting the best one, which is determined in terms of the similarity score between the embedding of the candidate clips and the embedding of the target text label. Using the proposed method, our system ranks \({1}^{st}\) among the systems submitted to DCASE Challenge 2023 Task 7. The results of the ablation studies illustrate that the proposed techniques significantly improve sound generation performance. The codes for implementing the proposed system are available online.

Universal source separation (USS) is a fundamental research task for computational auditory scene analysis, which aims to separate mono recordings into individual source tracks. There are three potential challenges awaiting the solution to the audio source separation task. First, previous audio source separation systems mainly focus on separating one or a limited number of specific sources. There is a lack of research on building a unified system that can separate arbitrary sources via a single model. Second, most previous systems require clean source data to train a separator, while clean source data are scarce. Third, there is a lack of USS system that can automatically detect and separate active sound classes in a hierarchical level. To use large-scale weakly labeled/unlabeled audio data for audio source separation, we propose a universal audio source separation framework containing: 1) an audio tagging model trained on weakly labeled data as a query net; and 2) a conditional source separation model that takes query net outputs as conditions to separate arbitrary sound sources. We investigate various query nets, source separation models, and training strategies and propose a hierarchical USS strategy to automatically detect and separate sound classes from the AudioSet ontology. By solely leveraging the weakly labelled AudioSet, our USS system is successful in separating a wide variety of sound classes, including sound event separation, music source separation, and speech enhancement. The USS system achieves an average signal-to-distortion ratio improvement (SDRi) of 5.57 dB over 527 sound classes of AudioSet; 10.57 dB on the DCASE 2018 Task 2 dataset; 8.12 dB on the MUSDB18 dataset; an SDRi of 7.28 dB on the Slakh2100 dataset; and an SSNR of 9.00 dB on the voicebank-demand dataset. We release the source code at https://github.com/bytedance/uss

Large Language Models (LLMs) have shown great promise in integrating diverse expert models to tackle intricate language and vision tasks. Despite their significance in advancing the field of Artificial Intelligence Generated Content (AIGC), their potential in intelligent audio content creation remains unexplored. In this work, we tackle the problem of creating audio content with storylines encompassing speech, music, and sound effects, guided by text instructions. We present WavJourney, a system that leverages LLMs to connect various audio models for audio content generation. Given a text description of an auditory scene, WavJourney first prompts LLMs to generate a structured script dedicated to audio storytelling. The audio script incorporates diverse audio elements, organized based on their spatio-temporal relationships. As a conceptual representation of audio, the audio script provides an interactive and interpretable rationale for human engagement. Afterward, the audio script is fed into a script compiler, converting it into a computer program. Each line of the program calls a task-specific audio generation model or computational operation function (e.g., concatenate, mix). The computer program is then executed to obtain an explainable solution for audio generation. We demonstrate the practicality of WavJourney across diverse real-world scenarios, including science fiction, education, and radio play. The explainable and interactive design of WavJourney fosters human-machine co-creation in multi-round dialogues, enhancing creative control and adaptability in audio production. WavJourney audiolizes the human imagination, opening up new avenues for creativity in multimedia content creation.

Language-queried audio source separation (LASS) is a new paradigm for computational auditory scene analysis (CASA). LASS aims to separate a target sound from an audio mixture given a natural language query, which provides a natural and scalable interface for digital audio applications. Recent works on LASS, despite attaining promising separation performance on specific sources (e.g., musical instruments, limited classes of audio events), are unable to separate audio concepts in the open domain. In this work, we introduce AudioSep, a foundation model for open-domain audio source separation with natural language queries. We train AudioSep on large-scale multimodal datasets and extensively evaluate its capabilities on numerous tasks including audio event separation, musical instrument separation, and speech enhancement. AudioSep demonstrates strong separation performance and impressive zero-shot generalization ability using audio captions or text labels as queries, substantially outperforming previous audio-queried and language-queried sound separation models. For reproducibility of this work, we will release the source code, evaluation benchmark and pre-trained model at: https://github.com/Audio-AGI/AudioSep.

For learning-based sound event localization and detection (SELD) methods, different acoustic environments in the training and test sets may result in large performance differences in the validation and evaluation stages. Different environments, such as different sizes of rooms, different reverberation times, and different background noise, may be reasons for a learning-based system to fail. On the other hand, acquiring annotated spatial sound event samples, which include onset and offset time stamps, class types of sound events, and direction-of-arrival (DOA) of sound sources is very expensive. In addition, deploying a SELD system in a new environment often poses challenges due to time-consuming training and fine-tuning processes. To address these issues, we propose Meta-SELD, which applies meta-learning methods to achieve fast adaptation to new environments. More specifically, based on Model Agnostic Meta-Learning (MAML), the proposed Meta-SELD aims to find good meta-initialized parameters to adapt to new environments with only a small number of samples and parameter updating iterations. We can then quickly adapt the meta-trained SELD model to unseen environments. Our experiments compare fine-tuning methods from pre-trained SELD models with our Meta-SELD on the Sony-TAU Realistic Spatial Soundscapes 2023 (STARSSS23) dataset. The evaluation results demonstrate the effectiveness of Meta-SELD when adapting to new environments.

Foley sound presents the background sound for multimedia content and the generation of Foley sound involves computationally modelling sound effects with specialized techniques. In this work, we proposed a system for DCASE 2023 challenge task 7: Foley Sound Synthesis. The proposed system is based on AudioLDM, which is a diffusion-based text-to-audio generation model. To alleviate the data-hungry problem, the system first trained with large-scale datasets and then downstreamed into this DCASE task via transfer learning. Through experiments, we found out that the feature extracted by the encoder can significantly affect the performance of the generation model. Hence, we improve the results by leveraging the input label with related text embedding features obtained by a significant language model, i.e., contrastive language-audio pertaining (CLAP). In addition, we utilize a filtering strategy to further refine the output, i.e. by selecting the best results from the candidate clips generated in terms of the similarity score between the sound and target labels. The overall system achieves a Frechet audio distance (FAD) score of 4.765 on average among all seven different classes, substantially outperforming the baseline system which performs a FAD score of 9.7.

Automated audio captioning (AAC) aims to describe the content of an audio clip using simple sentences. Existing AAC methods are developed based on an encoder-decoder architecture that success is attributed to the use of a pre-trained CNN10 called PANNs as the encoder to learn rich audio representations. AAC is a highly challenging task due to its high-dimensional talent space involves audio of various scenarios. Existing methods only use the high-dimensional representation of the PANNs as the input of the decoder. However, the low-dimension representation may retain as much audio information as the high-dimensional representation may be neglected. In addition, although the high-dimensional approach may predict the audio captions by learning from existing audio captions, which lacks robustness and efficiency. To deal with these challenges, a fusion model which integrates low- and high-dimensional features AAC framework is proposed. In this paper, a new encoder-decoder framework is proposed called the Low- and High-Dimensional Feature Fusion (LHDFF) model for AAC. Moreover, in LHDFF, a new PANNs encoder is proposed called Residual PANNs (RPANNs) by fusing the low-dimensional feature from the intermediate convolution layer output and the high-dimensional feature from the final layer output of PANNs. To fully explore the information of the low- and high-dimensional fusion feature and high-dimensional feature respectively, we proposed dual transformer decoder structures to generate the captions in parallel. Especially, a probabilistic fusion approach is proposed that can ensure the overall performance of the system is improved by concentrating on the respective advantages of the two transformer decoders. Experimental results show that LHDFF achieves the best performance on the Clotho and AudioCaps datasets compared with other existing models

The advancement of audio-language (AL) multimodal learning tasks has been significant in recent years. However, researchers face challenges due to the costly and time-consuming collection process of existing audio-language datasets, which are limited in size. To address this data scarcity issue, we introduce WavCaps, the first large-scale weakly-labelled audio captioning dataset, comprising approximately 400k audio clips with paired captions. We sourced audio clips and their raw descriptions from web sources and a sound event detection dataset. However, the online-harvested raw descriptions are highly noisy and unsuitable for direct use in tasks such as automated audio captioning. To overcome this issue, we propose a three-stage processing pipeline for filtering noisy data and generating high-quality captions, where ChatGPT, a large language model, is leveraged to filter and transform raw descriptions automatically. We conduct a comprehensive analysis of the characteristics of WavCaps dataset and evaluate it on multiple downstream audio-language multimodal learning tasks. The systems trained on WavCaps outperform previous state-of-the-art (SOTA) models by a significant margin. Our aspiration is for the WavCaps dataset we have proposed to facilitate research in audio-language multimodal learning and demonstrate the potential of utilizing ChatGPT to enhance academic research. Our dataset and codes are available at https://github.com/XinhaoMei/WavCaps.