11am - 12 noon

Friday 30 January 2026

Democratising Sketch-based Vision Applications via Foundation Models

PhD Viva Open Presentation - Subhadeep Koley

Hybrid Meeting (21BA02 & Teams) - All Welcome!

Free

University of Surrey

Guildford

Surrey

GU2 7XH

This event has passed

Democratising Sketch-based Vision Applications via Foundation Models

Abstract:

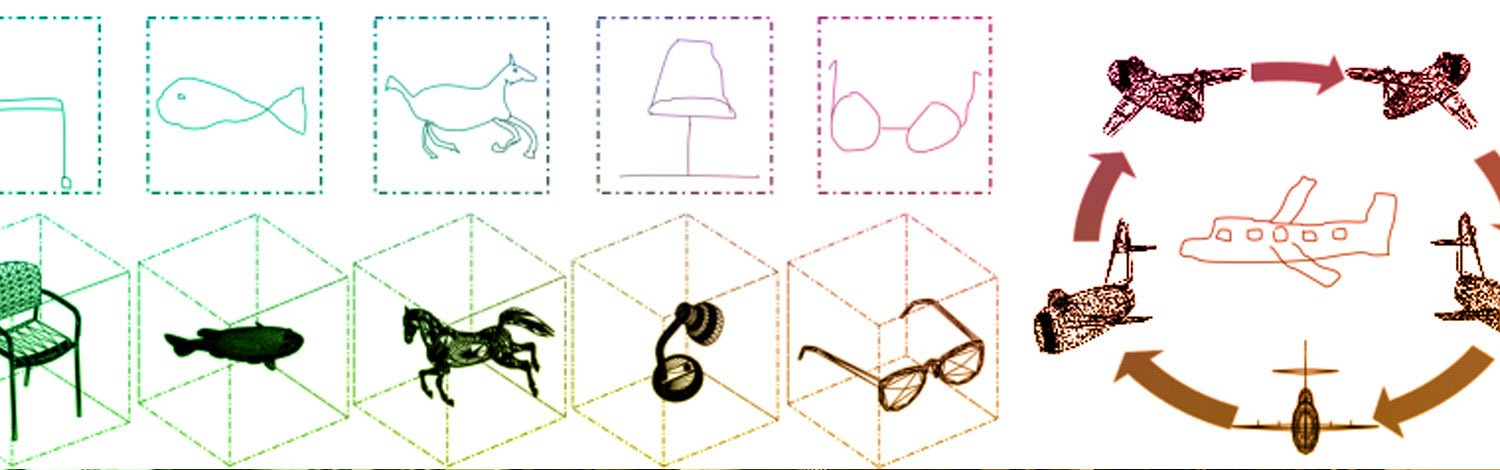

Sketches have served as a timeless medium for humans to express thoughts, communicate ideas, and capture fleeting inspirations, dating back to prehistoric times. Their intuitive nature often conveys concepts more effectively than words (e.g., moments when we instinctively reach for pen and paper to sketch-out a complex idea). As the field of computer vision evolves, recent advent of foundation models has significantly transformed the landscape. These models, trained on vast datasets, have demonstrated remarkable versatility across various visual tasks. However, the potential of these models remains largely untapped in the realm of sketch-based understanding. Making powerful foundation models more accessible can open up new possibilities for sketch-based tasks, such as generation, retrieval, and editing, and place the expressiveness of human sketches at the centre of AI-driven visual understanding. Building on this vision, the thesis delivers four key contributions, across two application domains, under one unifying theme of democratisation.

The first chapter democratises sketch-based image retrieval (SBIR) by bridging the abstraction gap between abstract freehand sketches and pixel-perfect photos. We embed abstraction understanding into our system by introducing feature-level and retrieval granularity-level innovations, leveraging the knowledge-rich latent space of a pre-trained StyleGAN.

The second chapter further advances the democratisation of SBIR by introducing a universal zero-shot framework that leverages the latent potential of a pre-trained diffusion model, combined with prompt learning, to effectively handle unseen object categories during retrieval.

The third chapter extends the scope of democratisation to the domain of sketch-based image generation by introducing freehand sketch-conditioning into StyleGAN models on freehand sketches and lowering the usability barrier, enabling everyday users to transform their rough outlines into realistic images without the need for edgemap-like sketches drawn by skilled artists.

Finally, the fourth chapter further advances democratisation of multi-class image generation by incorporating practical sketch-control into diffusion models and removing the need for detailed textual prompts, thus enabling a sketch-only generation experience. Collectively, these contributions lay a foundation for accessible, sketch-centric workflows with foundation models, empowering users across skill levels to create visual content intuitively.