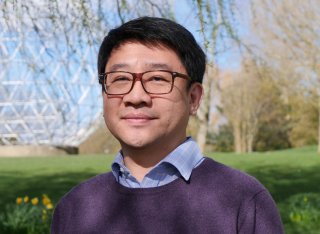

Professor Yi-Zhe Song

Academic and research departments

Centre for Vision, Speech and Signal Processing (CVSSP), School of Computer Science and Electronic Engineering.About

Biography

Yi-Zhe Song is a Professor of Computer Vision and AI at the Centre for Vision Speech and Signal Processing (CVSSP), University of Surrey, one of the UK's oldest and largest research centres on Artificial Intelligence.

He is Co-Director of the Surrey Institute for People-Centred AI, and leads the SketchX Lab within CVSSP. His vision for SketchX is understanding how seeing can be explained by drawing. In other words, how better understanding of human sketch data can be translated to insights of how human visual systems operate, and in turn how such insights can benefit computer vision and cognitive science at large.

He serves as an Associate Editor of the IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI) and the International Journal of Computer Vision (IJCV) — the two top-ranked journals in the field. He is Co-General Chair for ACM Multimedia 2028 and the Alan Turing Institute Academic Lead at Surrey. He regularly serves as Area Chair for flagship conferences, including CVPR (2021–2025), ICCV (2021, 2023), and ECCV (2022), and was Programme Chair for BMVC 2021.

SketchX publishes consistently in top-tier conferences and journals, including a Best Paper Award at BMVC 2015 and a CVPR 2023 Best Paper Award Finalist (top 0.1%). As of 2025: 58× CVPR, 16× ICCV, 18× ECCV, 5× ICLR, 2× NeurIPS, 3× SIGGRAPH Asia.

He is Co-Director of the UKRI Centre for Doctoral Training on AI for Digital Media Inclusion, and Democratisation Lead on the £75.6M UKRI CoSTAR programme — one of the largest infrastructure grants in UKRI history. He founded and leads the MSc in AI programme at Surrey, having previously established an MSc in AI programme at Queen Mary University of London.

He is consistently ranked in Stanford University's World Top 2% Scientists list (2022–2025).

He obtained a PhD in 2008 from the University of Bath, an MSc (Best Dissertation Award) in 2004 from the University of Cambridge, and a Bachelor's Degree (First Class Honours) in 2003 from the University of Bath.

News

Publications

This paper, for the first time, marries large foundation models with human sketch understanding. We demonstrate what this brings – a paradigm shift in terms of generalised sketch representation learning (e.g., classification). This generalisation happens on two fronts: (i) generalisation across unknown categories (i.e., open-set), and (ii) generalisation traversing abstraction levels (i.e., good and bad sketches), both being timely challenges that remain unsolved in the sketch literature. Our design is intuitive and centred around transferring the already stellar generalisation ability of CLIP to benefit generalised learning for sketches. We first “condition” the vanilla CLIP model by learning sketchspecific prompts using a novel auxiliary head of raster to vector sketch conversion. This importantly makes CLIP “sketch-aware”. We then make CLIP acute to the inherently different sketch abstraction levels. This is achieved by learning a codebook of abstraction-specific prompt biases, a weighted combination of which facilitates the representation of sketches across abstraction levels – low abstract edge-maps, medium abstract sketches in TU-Berlin, and highly abstract doodles in QuickDraw. Our framework surpasses popular sketch representation learning algorithms in both zero-shot and few-shot setups and in novel settings across different abstraction boundaries.

In this paper, we democratise 3D content creation, enabling precise generation of 3D shapes from abstract sketches while overcoming limitations tied to drawing skills. We introduce a novel part-level modelling and alignment framework that facilitates abstraction modelling and cross-modal correspondence. Leveraging the same part-level decoder, our approach seamlessly extends to sketch modelling by establishing correspondence between CLIPasso edgemaps and projected 3D part regions, eliminating the need for a dataset pairing human sketches and 3D shapes. Additionally, our method introduces a seamless in-position editing process as a byproduct of cross-modal part-aligned modelling. Operating in a low-dimensional implicit space, our approach significantly reduces computational demands and processing time.

In this paper, we explore the unique modality of sketch for explainability, emphasising the profound impact of human strokes compared to conventional pixel-oriented studies. Beyond explanations of network behavior, we discern the genuine implications of explainability across diverse downstream sketch-related tasks. We propose a lightweight and portable explainability solution -- a seamless plugin that integrates effortlessly with any pre-trained model, eliminating the need for re-training. Demonstrating its adaptability, we present four applications: highly studied retrieval and generation, and completely novel assisted drawing and sketch adversarial attacks. The centrepiece to our solution is a stroke-level attribution map that takes different forms when linked with downstream tasks. By addressing the inherent non-differentiability of rasterisation, we enable explanations at both coarse stroke level (SLA) and partial stroke level (P-SLA), each with its advantages for specific downstream tasks.

We propose SketchINR, to advance the representation of vector sketches with implicit neural models. A variable length vector sketch is compressed into a latent space of fixed dimension that implicitly encodes the underlying shape as a function of time and strokes. The learned function predicts the $xy$ point coordinates in a sketch at each time and stroke. Despite its simplicity, SketchINR outperforms existing representations at multiple tasks: (i) Encoding an entire sketch dataset into a fixed size latent vector, SketchINR gives $60\times$ and $10\times$ data compression over raster and vector sketches, respectively. (ii) SketchINR's auto-decoder provides a much higher-fidelity representation than other learned vector sketch representations, and is uniquely able to scale to complex vector sketches such as FS-COCO. (iii) SketchINR supports parallelisation that can decode/render $\sim$$100\times$ faster than other learned vector representations such as SketchRNN. (iv) SketchINR, for the first time, emulates the human ability to reproduce a sketch with varying abstraction in terms of number and complexity of strokes. As a first look at implicit sketches, SketchINR's compact high-fidelity representation will support future work in modelling long and complex sketches.

3D shape modeling is labor-intensive, time-consuming, and requires years of expertise. To facilitate 3D shape modeling, we propose a 3D shape generation network that takes a 3D VR sketch as a condition. We assume that sketches are created by novices without art training and aim to reconstruct geometrically realistic 3D shapes of a given category. To handle potential sketch ambiguity, our method creates multiple 3D shapes that align with the original sketch’s structure. We carefully design our method, training the model step-by-step and leveraging multi-modal 3D shape representation to support training with limited training data. To guarantee the realism of generated 3D shapes we leverage the normalizing flow that models the distribution of the latent space of 3D shapes. To encourage the fidelity of the generated 3D shapes to an input sketch, we propose a dedicated loss that we deploy at different stages of the training process. The code is available at https://github.com/Rowl1ng/3Dsketch2shape.

Despite significant progress, the shortage of labeled data and expert knowledge remains a challenge for Fine-grained Visual Classification (FGVC). Some multi-source approaches that incorporate additional modalities, such as sound or bounding boxes, show promise for data enrichment but introduce added complexity to data collection. In this paper, we pose the question: can multi-source capabilities be achieved solely with existing images? The answer, confirmed by a pilot study, is affirmative. By analyzing the probability distribution of model output with different resolutions image, we find that complementary information beneficial to FGVC exists among images of different resolutions. Although the classification accuracy of low-resolution images is lower than high-resolution images, it can provide additional information for high-resolution input images. We designed a naive baseline that uses mixed training of multi-resolution images. Through the experimental results of the baseline, we find that i) not all low-resolution images are beneficial, and ii) adaptively selecting low-resolution images is what we need. Therefore, we proposed a meta-learning-based adaptive "resolution" pooling layer. Through the pooling operation, the features of low-resolution images are obtained from high-resolution images, and the most appropriate complementary features are selected for the features of high-resolution images through the gating mechanism, which enables the model to fully and autonomously exploit the complementary information. Experimental results on three FGVC datasets validate the effectiveness of our proposed method. Our code is available at https://github.com/PRIS-CV/Adaptive-Multi-Resolution-Feature-Fusion.

Analysis of human sketches in deep learning has advanced immensely through the use of waypoint-sequences rather than raster-graphic representations. We further aim to model sketches as a sequence of low-dimensional parametric curves. To this end, we propose an inverse graphics framework capable of approximating a raster or waypoint based stroke encoded as a point-cloud with a variable-degree Bezier curve. Building on this module, ´we present Cloud2Curve, a generative model for scalable high-resolution vector sketches that can be trained end-to-end using point-cloud data alone. As a consequence, our model is also capable of deterministic vectorization which can map novel raster or waypoint based sketches to their corresponding high-resolution scalable Bezier equivalent. ´We evaluate the generation and vectorization capabilities of our model on Quick, Draw! and K-MNIST datasets. The analysis of free-hand sketches using deep learning [40] has flourished over the past few years, with sketches now being well analysed from classification [43, 42] and retrieval [27, 12, 4] perspectives. Sketches for digital analysis have always been acquired in two primary modalities - raster (pixel grids) and vector (line segments). Raster sketches have mostly been the modality of choice for sketch recognition and retrieval [43, 27]. However, generative sketch models began to advance rapidly [16] after focusing on vector representations and generating sketches as sequences [7, 37] of waypoints/line segments, similarly to how humans sketch. As a happy byproduct, this paradigm leads to clean and blur-free image generation as opposed to direct raster-graphic generations [30]. Recent works have studied creativity in sketch generation [16], learning to sketch raster photo input images [36], learning efficient

We study the practical task of fine-grained 3D-VR-sketch-based 3D shape retrieval. This task is of particular interest as 2D sketches were shown to be effective queries for 2D images. However, due to the domain gap, it remains hard to achieve strong performance in 3D shape retrieval from 2D sketches. Recent work demonstrated the advantage of 3D VR sketching on this task. In our work, we focus on the challenge caused by inherent inaccuracies in 3D VR sketches. We observe that retrieval results obtained with a triplet loss with a fixed margin value, commonly used for retrieval tasks, contain many irrelevant shapes and often just one or few with a similar structure to the query. To mitigate this problem, we for the first time draw a connection between adaptive margin values and shape similarities. In particular, we propose to use a triplet loss with an adaptive margin value driven by a 'fitting gap', which is the similarity of two shapes under structure-preserving deformations. We also conduct a user study which confirms that this fitting gap is indeed a suitable criterion to evaluate the structural similarity of shapes. Furthermore, we introduce a dataset of 202 VR sketches for 202 3D shapes drawn from memory rather than from observation. The code and data are available at https://github.com/Rowl1ng/Structure-Aware-VR-Sketch-Shape-Retrieval.

We advance sketch research to scenes with the first dataset of freehand scene sketches, FS-COCO. With practical applications in mind, we collect sketches that convey scene content well but can be sketched within a few minutes by a person with any sketching skills. Our dataset comprises 10,000 freehand scene vector sketches with per point space-time information by 100 non-expert individuals, offering both object- and scene-level abstraction. Each sketch is augmented with its text description. Using our dataset, we study for the first time the problem of fine-grained image retrieval from freehand scene sketches and sketch captions. We draw insights on: (i) Scene salience encoded in sketches using the strokes temporal order; (ii) Performance comparison of image retrieval from a scene sketch and an image caption; (iii) Complementarity of information in sketches and image captions, as well as the potential benefit of combining the two modalities. In addition, we extend a popular vector sketch LSTM-based encoder to handle sketches with larger complexity than was supported by previous work. Namely, we propose a hierarchical sketch decoder, which we leverage at a sketch-specific ``pretext" task. Our dataset enables for the first time research on freehand scene sketch understanding and its practical applications. We release the dataset under CC BY-NC 4.0 license: https://fscoco.github.io

Whether what you see in Figure 1 is a "flamingo" or a "bird", is the question we ask in this paper. While fine-grained visual classification (FGVC) strives to arrive at the former, for the majority of us non-experts just "bird" would probably suffice. The real question is therefore - how can we tailor for different fine-grained definitions under divergent levels of expertise. For that, we re-envisage the traditional setting of FGVC, from single-label classification, to that of top-down traversal of a pre-defined coarse-to-fine label hierarchy - so that our answer becomes "bird" ⇒ "Phoenicopteriformes" ⇒ "Phoenicopteridae" ⇒ "flamingo".To approach this new problem, we first conduct a comprehensive human study where we confirm that most participants prefer multi-granularity labels, regardless whether they consider themselves experts. We then discover the key intuition that: coarse-level label prediction exacerbates fine-grained feature learning, yet fine-level feature betters the learning of coarse-level classifier. This discovery enables us to design a very simple albeit surprisingly effective solution to our new problem, where we (i) leverage level-specific classification heads to disentangle coarse-level features with fine-grained ones, and (ii) allow finer-grained features to participate in coarser-grained label predictions, which in turn helps with better disentanglement. Experiments show that our method achieves superior performance in the new FGVC setting, and performs better than state-of-the-art on the traditional single-label FGVC problem as well. Thanks to its simplicity, our method can be easily implemented on top of any existing FGVC frameworks and is parameter-free.

Sketch-based image retrieval (SBIR) is a cross-modal matching problem which is typically solved by learning a joint embedding space where the semantic content shared between photo and sketch modalities are preserved. However, a fundamental challenge in SBIR has been largely ignored so far, that is, sketches are drawn by humans and considerable style variations exist amongst different users. An effective SBIR model needs to explicitly account for this style diversity, crucially, to generalise to unseen user styles. To this end, a novel style-agnostic SBIR model is proposed. Different from existing models, a cross-modal variational autoencoder (VAE) is employed to explicitly disentangle each sketch into a semantic content part shared with the corresponding photo, and a style part unique to the sketcher. Importantly, to make our model dynamically adaptable to any unseen user styles, we propose to metatrain our cross-modal VAE by adding two style-adaptive components: a set of feature transformation layers to its encoder and a regulariser to the disentangled semantic content latent code. With this meta-learning framework, our model can not only disentangle the cross-modal shared semantic content for SBIR, but can adapt the disentanglement to any unseen user style as well, making the SBIR model truly style-agnostic. Extensive experiments show that our style-agnostic model yields state-of-the-art performance for both category-level and instance-level SBIR.

Perceptual organization remains one of the very few established theories on the human visual system. It underpinned many pre-deep seminal works on segmentation and detection, yet research has seen a rapid decline since the preferential shift to learning deep models. Of the limited attempts, most aimed at interpreting complex visual scenes using perceptual organizational rules. This has however been proven to be sub-optimal, since models were unable to effectively capture the visual complexity in real-world imagery. In this paper, we rejuvenate the study of perceptual organization, by advocating two positional changes: (i) we examine purposefully generated synthetic data, instead of complex real imagery, and (ii) we ask machines to synthesize novel perceptually-valid patterns, instead of explaining existing data. Our overall answer lies with the introduction of a novel visual challenge - the challenge of perceptual question answering (PQA). Upon observing example perceptual question-answer pairs, the goal for PQA is to solve similar questions by generating answers entirely from scratch (see Figure 1). Our first contribution is therefore the first dataset of perceptual question-answer pairs, each generated specifically for a particular Gestalt principle. We then borrow insights from human psychology to design an agent that casts perceptual organization as a self-attention problem, where a proposed grid-to-grid mapping network directly generates answer patterns from scratch. Experiments show our agent to outperform a selection of naive and strong baselines. A human study however indicates that ours uses astronomically more data to learn when compared to an average human, necessitating future research (with or without our dataset).

Handwritten Text Recognition (HTR) remains a challenging problem to date, largely due to the varying writing styles that exist amongst us. Prior works however generally operate with the assumption that there is a limited number of styles, most of which have already been captured by existing datasets. In this paper, we take a completely different perspective - we work on the assumption that there is always a new style that is drastically different, and that we will only have very limited data during testing to perform adaptation. This creates a commercially viable solution - being exposed to the new style, the model has the best shot at adaptation, and the few-sample nature makes it practical to implement. We achieve this via a novel meta-learning framework which exploits additional new-writer data via a support set, and outputs a writer-adapted model via single gradient step update, all during inference (see Figure 1). We discover and leverage on the important insight that there exists few key characters per writer that exhibit relatively larger style discrepancies. For that, we additionally propose to meta-learn instance specific weights for a character-wise cross-entropy loss, which is specifically designed to work with the sequential nature of text data. Our writer-adaptive MetaHTR framework can be easily implemented on the top of most state-of-the-art HTR models. Experiments show an average performance gain of 5-7% can be obtained by observing very few new style data (≤ 16).

A layout to image (L2I) generation model aims to generate a complicated image containing multiple objects (things) against natural background (stuff), conditioned on a given layout. Built upon the recent advances in generative adversarial networks (GANs), existing L2I models have made great progress. However, a close inspection of their generated images reveals two major limitations: (1) the object-to-object as well as object-to-stuff relations are often broken and (2) each object's appearance is typically distorted lacking the key defining characteristics associated with the object class. We argue that these are caused by the lack of context-aware object and stuff feature encoding in their generators, and location-sensitive appearance representation in their discriminators. To address these limitations, two new modules are proposed in this work. First, a context-aware feature transformation module is introduced in the generator to ensure that the generated feature encoding of either object or stuff is aware of other coexisting objects/stuff in the scene. Second, instead of feeding location-insensitive image features to the discriminator, we use the Gram matrix computed from the feature maps of the generated object images to preserve location-sensitive information, resulting in much enhanced object appearance. Extensive experiments show that the proposed method achieves state-of-the-art performance on the COCO-Thing-Stuff and Visual Genome benchmarks. Code available at: https://github.com/wtliao/layout2img.

Open-Set Domain Adaptation (OSDA) aims at adapting a model trained on a labelled source domain, to an unlabeled target domain that is corrupted with unknown classes. The key challenge inherent to this open-set setting is therefore how best to avoid the negative transfer incurred by unknown classes during model adaptation. Most existing works tackle this challenge by simply pushing the entire unknown classes away. In this paper, we take a different stance - instead of addressing these unknown classes as a single entity, we "reserve" in-between spaces for their subsets in the learned embedding. Our key finding is that the inter-class relations learned off the source domain, can help to enforce class separations in the target domain - thereby reserving spaces for unknown classes. More specifically, we first prep the "reservation" by tightening the known-class representations while enlarging their inter-class margin. We then learn soft-label prototypes in the source domain to facilitate the discrimination of known and unknown samples in the target domain. It follows that these two steps are iterated at each epoch in a mutually beneficial manner - better discrimination of unknown samples helps with space reservation, and vice versa. We show state-of-the-art results on four standard OSDA datasets, Office-31, Office-Home, VisDA and ImageCLEF, and conduct further analysis to help understand our method. Codes are available at: https://github.com/PRIS-CV/Reserve_to_Adapt

A fundamental challenge faced by existing Fine-Grained Sketch-Based Image Retrieval (FG-SBIR) models is the data scarcity – model performances are largely bottlenecked by the lack of sketch-photo pairs. Whilst the number of photos can be easily scaled, each corresponding sketch still needs to be individually produced. In this paper, we aim to mitigate such an upper-bound on sketch data, and study whether unlabelled photos alone (of which they are many) can be cultivated for performance gain. In particular, we introduce a novel semi-supervised framework for cross-modal retrieval that can additionally leverage large-scale unla-belled photos to account for data scarcity. At the center of our semi-supervision design is a sequential photo-to-sketch generation model that aims to generate paired sketches for unlabelled photos. Importantly, we further introduce a discriminator-guided mechanism to guide against unfaithful generation, together with a distillation loss-based regu-larizer to provide tolerance against noisy training samples. Last but not least, we treat generation and retrieval as two conjugate problems, where a joint learning procedure is devised for each module to mutually benefit from each other. Extensive experiments show that our semi-supervised model yields a significant performance boost over the state-of-the-art supervised alternatives, as well as existing methods that can exploit unlabelled photos for FG-SBIR.

Self-supervised learning has gained prominence due to its efficacy at learning powerful representations from un-labelled data that achieve excellent performance on many challenging downstream tasks. However, supervision-free pretext tasks are challenging to design and usually modality specific. Although there is a rich literature of self-supervised methods for either spatial (such as images) or temporal data (sound or text) modalities, a common pretext task that benefits both modalities is largely missing. In this paper, we are interested in defining a self-supervised pretext task for sketches and handwriting data. This data is uniquely characterised by its existence in dual modalities of rasterized images and vector coordinate sequences. We address and exploit this dual representation by proposing two novel cross-modal translation pretext tasks for self-supervised feature learning: Vectorization and Rasteriza-tion. Vectorization learns to map image space to vector coordinates and rasterization maps vector coordinates to image space. We show that our learned encoder modules benefit both raster-based and vector-based downstream approaches to analysing hand-drawn data. Empirical evidence shows that our novel pretext tasks surpass existing single and multi-modal self-supervision methods.

Growing free online 3D shapes collections dictated research on 3D retrieval. Active debate has however been had on (i) what the best input modality is to trigger retrieval, and (ii) the ultimate usage scenario for such retrieval. In this paper, we offer a different perspective towards answering these questions -- we study the use of 3D sketches as an input modality and advocate a VR-scenario where retrieval is conducted. Thus, the ultimate vision is that users can freely retrieve a 3D model by air-doodling in a VR environment. As a first stab at this new 3D VR-sketch to 3D shape retrieval problem, we make four contributions. First, we code a VR utility to collect 3D VR-sketches and conduct retrieval. Second, we collect the first set of \(167\) 3D VR-sketches on two shape categories from ModelNet. Third, we propose a novel approach to generate a synthetic dataset of human-like 3D sketches of different abstract levels to train deep networks. At last, we compare the common multi-view and volumetric approaches: We show that, in contrast to 3D shape to 3D shape retrieval, volumetric point-based approaches exhibit superior performance on 3D sketch to 3D shape retrieval due to the sparse and abstract nature of 3D VR-sketches. We believe these contributions will collectively serve as enablers for future attempts at this problem. The VR interface, code and datasets are available at https://tinyurl.com/3DSketch3DV.

Achieving generalization for deep learning models has usually suffered from the bottleneck of annotated sample scarcity. As a common way of tackling this issue, few-shot learning focuses on "episodes", i.e. sampled tasks that help the model acquire generalizable knowledge onto unseen categories - better the episodes, the higher a model's generalisability. Despite extensive research, the characteristics of episodes and their potential effects are relatively less explored. A recent paper discussed that different episodes exhibit different prediction difficulties, and coined a new metric "hardness" to quantify episodes, which however is too wide-range for an arbitrary dataset and thus remains impractical for realistic applications. In this paper therefore, we for the first time conduct an algebraic analysis of the critical factors influencing episode hardness supported by experimental demonstrations, that reveal episode hardness to largely depend on classes within an episode, and importantly propose an efficient pre-sampling hardness assessment technique named Inverse-Fisher Discriminant Ratio (IFDR). This enables sampling hard episodes at the class level via class-level (cl) sampling scheme that drastically decreases quantification cost. Delving deeper, we also develop a variant called class-pair-level (cpl) sampling, which further reduces the sampling cost while guaranteeing the sampled distribution. Finally, comprehensive experiments conducted on benchmark datasets verify the efficacy of our proposed method. Codes are available at: https://github.com/PRIS-CV/class-level-sampling

Reconstructing a 3D shape based on a single sketch image is challenging due to the inherent sparsity and ambiguity present in sketches. Existing methods lose fine details when extracting features to predict 3D objects from sketches. Upon analyzing the 3D-to-2D projection process, we observe that the density map, characterizing the distribution of 2D point clouds, can serve as a proxy to facilitate the reconstruction process. In this work, we propose a novel sketch-based 3D reconstruction model named SketchSampler . It initiates the process by translating a sketch through an image translation network into a more informative 2D representation, which is then used to generate a density map. Subsequently, a two-stage probabilistic sampling process is employed to reconstruct a 3D point cloud: firstly, recovering the 2D points (i.e., the x and y coordinates) by sampling the density map; and secondly, predicting the depth (i.e., the z coordinate) by sampling the depth values along the ray determined by each 2D point. Additionally, we convert the reconstructed point cloud into a 3D mesh for wider applications. To reduce ambiguity, we incorporate hidden lines in sketches. Experimental results demonstrate that our proposed approach significantly outperforms other baseline methods.

In this paper, we delve into the intricate dynamics of Fine-Grained Sketch-Based Image Retrieval (FG-SBIR) by addressing a critical yet overlooked aspect – the choice of viewpoint during sketch creation. Unlike photo systems that seamlessly handle diverse views through extensive datasets, sketch systems, with limited data collected from fixed perspectives, face challenges. Our pilot study, employing a pre-trained FG-SBIR model, highlights the system’s struggle when query-sketches differ in viewpoint from target instances. Interestingly, a questionnaire however shows users desire autonomy, with a significant percentage favouring view-specific retrieval. To reconcile this, we advocate for a view-aware system, seamlessly accommodating both view-agnostic and view-specific tasks. Overcoming dataset limitations, our first contribution leverages multi-view 2D projections of 3D objects, instilling cross-modal view awareness. The second contribution introduces a customisable cross-modal feature through disentanglement, allowing effortless mode switching. Extensive experiments on standard datasets validate the effectiveness of our method.

Large-scale Vision-and-Language (V+L) pre-training for representation learning has proven to be effective in boosting various downstream V+L tasks. However, when it comes to the fashion domain, existing V+L methods are inadequate as they overlook the unique characteristics of both fashion V+L data and downstream tasks. In this work, we propose a novel fashion-focused V+L representation learning framework, dubbed as FashionViL. It contains two novel fashion-specific pre-training tasks designed particularly to exploit two intrinsic attributes with fashion V+L data. First, in contrast to other domains where a V+L datum contains only a single image-text pair, there could be multiple images in the fashion domain. We thus propose a Multi-View Contrastive Learning task for pulling closer the visual representation of one image to the compositional multimodal representation of another image+text. Second, fashion text (e.g., product description) often contains rich fine-grained concepts (attributes/noun phrases). To capitalize this, a Pseudo-Attributes Classification task is introduced to encourage the learned unimodal (visual/textual) representations of the same concept to be adjacent. Further, fashion V+L tasks uniquely include ones that do not conform to the common one-stream or two-stream architectures (e.g., text-guided image retrieval). We thus propose a flexible, versatile V+L model architecture consisting of a modality-agnostic Transformer so that it can be flexibly adapted to any downstream tasks. Extensive experiments show that our FashionViL achieves new state of the art across five downstream tasks. Code is available at https://github.com/BrandonHanx/mmf.

Image-based virtual try-on aims to fit an in-shop garment into a clothed person image. To achieve this, a key step is garment warping which spatially aligns the target garment with the corresponding body parts in the person image. Prior methods typically adopt a local appearance flow estimation model. They are thus intrinsically susceptible to difficult body poses/occlusions and large mis-alignments between person and garment images (see Fig. 1). To overcome this limitation, a novel global appearance flow estimation model is proposed in this work. For the first time, a StyleGAN based architecture is adopted for appearance flow estimation. This enables us to take advantage of a global style vector to encode a whole-image context to cope with the aforementioned challenges. To guide the StyleGAN flow generator to pay more attention to local garment deformation, a flow refinement module is introduced to add local context. Experiment results on a popular virtual try-on benchmark show that our method achieves new state-of-the-art performance. It is particularly effective in a 'in-the-wild' application scenario where the reference image is MI-body resulting in a large mis-alignment with the garment image (Fig. 1 Top). Code is available at: https://github.com/SenGHe/Flow-Stylr-VTON.

Fine-grained visual classification (FGVC) is much more challenging than traditional classification tasks due to the inherently subtle intra-class object variations. Recent works are mainly part-driven (either explicitly or implicitly), with the assumption that fine-grained information naturally rests within the parts. In this paper, we take a different stance, and show that part operations are not strictly necessary – the key lies with encouraging the network to learn at different granularities and progressively fusing multi-granularity features together. In particular, we propose: (i) a progressive training strategy that effectively fuses features from different granularities, and (ii) a random jigsaw patch generator that encourages the network to learn features at specific granularities. We evaluate on several standard FGVC benchmark datasets, and show the proposed method consistently outperforms existing alternatives or delivers competitive results. The code is available at https://github.com/PRIS-CV/PMG-Progressive-Multi-Granularity-Training.

As lovely as bunnies are, your sketched version would probably not do it justice (Fig. 1). This paper recognises this very problem and studies sketch quality measurement for the first time - letting you find these badly drawn ones. Our key discovery lies in exploiting the magnitude (L 2 norm) of a sketch feature as a quantitative quality metric. We propose Geometry-Aware Classification Layer (GACL), a generic method that makes feature-magnitude-as-quality-metric possible and importantly does it without the need for specific quality annotations from humans. GACL sees feature magnitude and recognisability learning as a dual task, which can be simultaneously optimised under a neat crossentropy classification loss. GACL is lightweight with theoretic guarantees and enjoys a nice geometric interpretation to reason its success. We confirm consistent quality agreements between our GACL-induced metric and human perception through a carefully designed human study. Notably, we demonstrate three practical sketch applications enabled for the first time using our quantitative quality metric.

In this paper, we investigate the problem of zero-shot sketch-based image retrieval (ZS-SBIR), where human sketches are used as queries to conduct retrieval of photos from unseen categories. We importantly advance prior arts by proposing a novel ZS-SBIR scenario that represents a firm step forward in its practical application. The new setting uniquely recognizes two important yet often neglected challenges of practical ZS-SBIR, (i) the large domain gap between amateur sketch and photo, and (ii) the necessity for moving towards large-scale retrieval. We first contribute to the community a novel ZS-SBIR dataset, QuickDraw-Extended, that consists of 330,000 sketches and 204,000 photos spanning across 110 categories. Highly abstract amateur human sketches are purposefully sourced to maximize the domain gap, instead of ones included in existing datasets that can often be semi-photorealistic. We then formulate a ZS-SBIR framework to jointly model sketches and photos into a common embedding space. A novel strategy to mine the mutual information among domains is specifically engineered to alleviate the domain gap. External semantic knowledge is further embedded to aid semantic transfer. We show that, rather surprisingly, retrieval performance significantly outperforms that of state-of-the-art on existing datasets that can already be achieved using a reduced version of our model. We further demonstrate the superior performance of our full model by comparing with a number of alternatives on the newly proposed dataset. The new dataset, plus all training and testing code of our model, will be publicly released to facilitate future research.

Sketches are distinctly different to photos. They are highly abstract and exhibit a severe lack of visual cues. Prior works have therefore explored additional traits unique to sketches to help recognition such as stroke ordering. In this paper, we pioneer in studying the role of structure in sketches, for the task of sketch recognition. In particular, we propose a novel graph representation specifically designed for sketches, which follows the inherent hierarchical relationship ("segment-stroke-sketch") of sketching elements. By conforming to this hierarchy, we also introduce a joint network that encapsulates both the structural and temporal traits of sketches for sketch recognition, termed S(3)Net. S(3)Net employs a recurrent neural network (RNN) to extract segment-level features, followed by a graph convolutional network (GCN) to aggregate them into sketch-level features. The RNN first encodes temporal cues in sketches while its outputs are used as node embedding to construct a hierarchical sketch-graph. The GCN module then takes in this sketch-graph to produce a structure-aware embedding for sketches. Extensive experiments on the QuickDraw dataset, exhibit superior performance over state-of-the-arts, surpassing them by over 4%. Ablative studies further demonstrate the effectiveness of the proposed structural graph for both inter-class, and intra-class feature discrimination. Code is available at: https://github.com/yanglan0225/s3net.

In this paper we propose a sequential learning framework for Domain Generalization (DG), the problem of training a model that is robust to domain shift by design. Various DG approaches have been proposed with different motivating intuitions, but they typically optimize for a single step of domain generalization – training on one set of domains and generalizing to one other. Our sequential learning is inspired by the idea lifelong learning, where accumulated experience means that learning the nth\documentclass[12pt]{minimal} \usepackage{amsmath} \usepackage{wasysym} \usepackage{amsfonts} \usepackage{amssymb} \usepackage{amsbsy} \usepackage{mathrsfs} \usepackage{upgreek} \setlength{\oddsidemargin}{-69pt} \begin{document}$$n^{th}$$\end{document} thing becomes easier than the 1st\documentclass[12pt]{minimal} \usepackage{amsmath} \usepackage{wasysym} \usepackage{amsfonts} \usepackage{amssymb} \usepackage{amsbsy} \usepackage{mathrsfs} \usepackage{upgreek} \setlength{\oddsidemargin}{-69pt} \begin{document}$$1^{st}$$\end{document} thing. In DG this means encountering a sequence of domains and at each step training to maximise performance on the next domain. The performance at domain n then depends on the previous n-1\documentclass[12pt]{minimal} \usepackage{amsmath} \usepackage{wasysym} \usepackage{amsfonts} \usepackage{amssymb} \usepackage{amsbsy} \usepackage{mathrsfs} \usepackage{upgreek} \setlength{\oddsidemargin}{-69pt} \begin{document}$$n-1$$\end{document} learning problems. Thus backpropagating through the sequence means optimizing performance not just for the next domain, but all following domains. Training on all such sequences of domains provides dramatically more ‘practice’ for a base DG learner compared to existing approaches, thus improving performance on a true testing domain. This strategy can be instantiated for different base DG algorithms, but we focus on its application to the recently proposed Meta-Learning Domain generalization (MLDG). We show that for MLDG it leads to a simple to implement and fast algorithm that provides consistent performance improvement on a variety of DG benchmarks.

Most existing studies on unsupervised domain adaptation (UDA) assume that each domain's training samples come with domain labels (e.g., painting, photo). Samples from each domain are assumed to follow the same distribution and the domain labels are exploited to learn domain-invariant features via feature alignment. However, such an assumption often does not hold true-there often exist numerous finer-grained domains (e.g., dozens of modern painting styles have been developed, each differing dramatically from those of the classic styles). Therefore, forcing feature distribution alignment across each artificially-defined and coarse-grained domain can be ineffective. In this paper, we address both single-source and multi-source UDA from a completely different perspective, which is to view each instance as a fine domain . Feature alignment across domains is thus redundant. Instead, we propose to perform dynamic instance domain adaptation (DIDA). Concretely, a dynamic neural network with adaptive convolutional kernels is developed to generate instance-adaptive residuals to adapt domain-agnostic deep features to each individual instance. This enables a shared classifier to be applied to both source and target domain data without relying on any domain annotation. Further, instead of imposing intricate feature alignment losses, we adopt a simple semi-supervised learning paradigm using only a cross-entropy loss for both labeled source and pseudo labeled target data. Our model, dubbed DIDA-Net, achieves state-of-the-art performance on several commonly used single-source and multi-source UDA datasets including Digits, Office-Home, DomainNet, Digit-Five, and PACS.

Deep image-based modeling received lots of attention in recent years, yet the parallel problem of sketch-based modeling has only been briefly studied, often as a potential application. In this work, for the first time, we identify the main differences between sketch and image inputs: (i) style variance, (ii) imprecise perspective, and (iii) sparsity. We discuss why each of these differences can pose a challenge, and even make a certain class of image-based methods inapplicable. We study alternative solutions to address each of the difference. By doing so, we drive out a few important insights: (i) sparsity commonly results in an incorrect prediction of foreground versus background, (ii) diversity of human styles, if not taken into account, can lead to very poor generalization properties, and finally (iii) unless a dedicated sketching interface is used, one can not expect sketches to match a perspective of a fixed viewpoint. Finally, we compare a set of representative deep single-image modeling solutions and show how their performance can be improved to tackle sketch input by taking into consideration the identified critical differences.

Designing real and virtual garments is becoming extremely demanding with rapidly changing fashion trends and the increasing need for synthesizing realistically dressed digital humans for various applications. However, traditionally designing real and virtual garments has been time-consuming. Sketch-based modeling aims to bring the ease and immediacy of drawing to the 3D world thereby motivating faster iterations. We propose a novel sketch-based garment modeling framework specifically targeted to synchronize with the iterative process of garment ideation, e.g., adding or removing details from different views in each iteration. At the core of our learning-based approach is a view-aware feature aggregation module that fuses the features from the latest sketch with the thus far aggregated features to effectively refine the generated 3D shape. We evaluate our approach on a wide variety of garment types and iterative refinement scenarios. We also provide comparisons to alternative feature aggregation methods and demonstrate favorable results. The code is available at https://github.com/pinakinathc/multiviewsketch-garment.

Existing Temporal Action Detection (TAD) methods typically take a pre-processing step in converting an input varying-length video into a fixed-length snippet representation sequence, before temporal boundary estimation and action classification. This pre-processing step would temporally downsample the video, reducing the inference resolution and hampering the detection performance in the original temporal resolution. In essence, this is due to a temporal quantization error introduced during the resolution downsampling and recovery. This could negatively impact the TAD performance, but is largely ignored by existing methods. To address this problem, in this work we introduce a novel model-agnostic post-processing method without model redesign and retraining. Specifically, we model the start and end points of action instances with a Gaussian distribution for enabling temporal boundary inference at a sub-snippet level. We further introduce an efficient Taylor-expansion based approximation, dubbed as Gaussian Approximated Post-processing (GAP). Extensive experiments demonstrate that our GAP can consistently improve a wide variety of pre-trained off-the-shelf TAD models on the challenging ActivityNet (+0.2% -0.7% in average mAP) and THUMOS (+0.2% -0.5% in average mAP) benchmarks. Such performance gains are already significant and highly comparable to those achieved by novel model designs. Also, GAP can be integrated with model training for further performance gain. Importantly, GAP enables lower temporal resolutions for more efficient inference, facilitating low-resource applications. The code will be available in https://github.com/sauradip/GAP

This paper, for the very first time, introduces human sketches to the landscape of XAI (Explainable Artificial Intelligence). We argue that sketch as a ``human-centred'' data form, represents a natural interface to study explainability. We focus on cultivating sketch-specific explainability designs. This starts by identifying strokes as a unique building block that offers a degree of flexibility in object construction and manipulation impossible in photos. Following this, we design a simple explainability-friendly sketch encoder that accommodates the intrinsic properties of strokes: shape, location, and order. We then move on to define the first ever XAI task for sketch, that of stroke location inversion SLI. Just as we have heat maps for photos, and correlation matrices for text, SLI offers an explainability angle to sketch in terms of asking a network how well it can recover stroke locations of an unseen sketch. We offer qualitative results for readers to interpret as snapshots of the SLI process in the paper, and as GIFs on the project page. A minor but interesting note is that thanks to its sketch-specific design, our sketch encoder also yields the best sketch recognition accuracy to date while having the smallest number of parameters. The code is available at \url{https://sketchxai.github.io}.

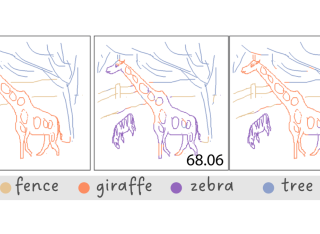

We present the first one-shot personalized sketch segmentation method. We aim to segment all sketches belonging to the same category provisioned with a single sketch with a given part annotation while (i) preserving the parts semantics embedded in the exemplar, and (ii) being robust to input style and abstraction. We refer to this scenario as personalized. With that, we importantly enable a much-desired personalization capability for downstream fine-grained sketch analysis tasks. To train a robust segmentation module, we deform the exemplar sketch to each of the available sketches of the same category. Our method generalizes to sketches not observed during training. Our central contribution is a sketch-specific hierarchical deformation network. Given a multi-level sketch-strokes encoding obtained via a graph convolutional network, our method estimates rigid-body transformation from the target to the exemplar, on the upper level. Finer deformation from the exemplar to the globally warped target sketch is further obtained through stroke-wise deformations, on the lower level. Both levels of deformation are guided by mean squared distances between the keypoints learned without supervision, ensuring that the stroke semantics are preserved. We evaluate our method against the state-of-the-art segmentation and perceptual grouping baselines re-purposed for the one-shot setting and against two few-shot 3D shape segmentation methods. We show that our method outperforms all the alternatives by more than $10\%$ on average. Ablation studies further demonstrate that our method is robust to personalization: changes in input part semantics and style differences.

3D shape modeling is labor-intensive and time-consuming and requires years of expertise. Recently, 2D sketches and text inputs were considered as conditional modalities to 3D shape generation networks to facilitate 3D shape modeling. However, text does not contain enough fine-grained information and is more suitable to describe a category or appearance rather than geometry, while 2D sketches are ambiguous, and depicting complex 3D shapes in 2D again requires extensive practice. Instead, we explore virtual reality sketches that are drawn directly in 3D. We assume that the sketches are created by novices, without any art training, and aim to reconstruct physically-plausible 3D shapes. Since such sketches are potentially ambiguous, we tackle the problem of the generation of multiple 3D shapes that follow the input sketch structure. Limited in the size of the training data, we carefully design our method, training the model step-by-step and leveraging multi-modal 3D shape representation. To guarantee the plausibility of generated 3D shapes we leverage the normalizing flow that models the distribution of the latent space of 3D shapes. To encourage the fidelity of the generated 3D models to an input sketch, we propose a dedicated loss that we deploy at different stages of the training process. We plan to make our code publicly available.

Existing temporal action detection (TAD) methods rely on large training data including segment-level annotations, limited to recognizing previously seen classes alone during inference. Collecting and annotating a large training set for each class of interest is costly and hence unscalable. Zero-shot TAD (ZS-TAD) resolves this obstacle by enabling a pre-trained model to recognize any unseen action classes. Meanwhile, ZS-TAD is also much more challenging with significantly less investigation. Inspired by the success of zero-shot image classification aided by vision-language (ViL) models such as CLIP, we aim to tackle the more complex TAD task. An intuitive method is to integrate an off-the-shelf proposal detector with CLIP style classification. However, due to the sequential localization (e.g., proposal generation) and classification design, it is prone to localization error propagation. To overcome this problem, in this paper we propose a novel zero-Shot Temporal Action detection model via Vision-LanguagE prompting (STALE). Such a novel design effectively eliminates the dependence between localization and classification by breaking the route for error propagation in-between. We further introduce an interaction mechanism between classification and localization for improved optimization. Extensive experiments on standard ZS-TAD video benchmarks show that our STALE significantly outperforms state-of-the-art alternatives. Besides, our model also yields superior results on supervised TAD over recent strong competitors. The PyTorch implementation of STALE is available on https://github.com/sauradip/STALE.

Existing Temporal Action Detection (TAD) methods typ- ically take a pre-processing step in converting an input varying-length video into a fixed-length snippet represen- tation sequence, before temporal boundary estimation and action classification. This pre-processing step would tem- porally downsample the video, reducing the inference res- olution and hampering the detection performance in the original temporal resolution. In essence, this is due to a temporal quantization error introduced during the resolu- tion downsampling and recovery. This could negatively im- pact the TAD performance, but is largely ignored by existing methods. To address this problem, in this work we intro- duce a novel model-agnostic post-processing method with- out model redesign and retraining. Specifically, we model the start and end points of action instances with a Gaussian distribution for enabling temporal boundary inference at a sub-snippet level. We further introduce an efficient Taylor- expansion based approximation, dubbed as Gaussian Ap- proximated Post-processing (GAP). Extensive experiments demonstrate that our GAP can consistently improve a wide variety of pre-trained off-the-shelf TAD models on the chal- lenging ActivityNet (+0.2%∼0.7% in average mAP) and THUMOS (+0.2%∼0.5% in average mAP) benchmarks. Such performance gains are already significant and highly comparable to those achieved by novel model designs. Also, GAP can be integrated with model training for further performance gain. Importantly, GAP enables lower tem- poral resolutions for more efficient inference, facilitating low-resource applications. The code will be available in https://github.com/sauradip/GAP

Deep image-based modeling received lots of attention in recent years, yet the parallel problem of sketch-based modeling has only been briefly studied, often as a potential application. In this work, for the first time, we identify the main differences between sketch and image inputs: (i) style variance, (ii) imprecise perspective, and (iii) sparsity. We discuss why each of these differences can pose a challenge, and even make a certain class of image-based methods inapplicable. We study alternative solutions to address each of the difference. By doing so, we drive out a few important insights: (i) sparsity commonly results in an incorrect prediction of foreground versus background, (ii) diversity of human styles, if not taken into account, can lead to very poor generalization properties, and finally (iii) unless a dedicated sketching interface is used, one can not expect sketches to match a perspective of a fixed viewpoint. Finally, we compare a set of representative deep single-image modeling solutions and show how their performance can be improved to tackle sketch input by taking into consideration the identified critical differences.

Existing temporal action detection (TAD) methods rely on generating an overwhelmingly large number of proposals per video. This leads to complex model designs due to proposal generation and/or per-proposal action instance evaluation and the resultant high computational cost. In this work, for the first time, we propose a proposal-free Temporal Action detection model via Global Segmentation mask (TAGS). Our core idea is to learn a global segmentation mask of each action instance jointly at the full video length. The TAGS model differs significantly from the conventional proposal-based methods by focusing on global temporal representation learning to directly detect local start and end points of action instances without proposals. Further, by modeling TAD holistically rather than locally at the individual proposal level, TAGS needs a much simpler model architecture with lower computational cost. Extensive experiments show that despite its simpler design, TAGS outperforms existing TAD methods, achieving new state-of-the-art performance on two benchmarks. Importantly, it is -20x faster to train and -1.6x more efficient for inference. Our PyTorch implementation of TAGS is available at https://github.com/sauradip/TAGS.

In this paper we study, for the first time, the problem of fine-grained sketch-based 3D shape retrieval. We advocate the use of sketches as a fine-grained input modality to retrieve 3D shapes at instance-level - e.g., given a sketch of a chair, we set out to retrieve a specific chair from a gallery of all chairs. Fine-grained sketch-based 3D shape retrieval (FG-SBSR) has not been possible till now due to a lack of datasets that exhibit one-to-one sketch-3D correspondences. The first key contribution of this paper is two new datasets, consisting a total of 4,680 sketch-3D pairings from two object categories. Even with the datasets, FG-SBSR is still highly challenging because (i) the inherent domain gap between 2D sketch and 3D shape is large, and (ii) retrieval needs to be conducted at the instance level instead of the coarse category level matching as in traditional SBSR. Thus, the second contribution of the paper is the first cross-modal deep embedding model for FG-SBSR, which specifically tackles the unique challenges presented by this new problem. Core to the deep embedding model is a novel cross-modal view attention module which automatically computes the optimal combination of 2D projections of a 3D shape given a query sketch.

This paper, for the very first time, introduces human sketches to the landscape of XAI (Explainable Artificial Intelligence). We argue that sketch as a "human-centered" data form, represents a natural interface to study explainability. We focus on cultivating sketch-specific explainability designs. This starts by identifying strokes as a unique building block that offers a degree of flexibility in object construction and manipulation impossible in photos. Following this, we design a simple explainability-friendly sketch encoder that accommodates the intrinsic properties of strokes: shape, location, and order. We then define the first ever XAI task for sketch, that of stroke location inversion (SLI). Just as we have heat maps for photos and correlation matrices for text, SLI offers an explainability angle to sketch by asking a network how well it can recover stroke locations of an unseen sketch. We provide qualitative results for readers to interpret as snapshots of the SLI process in the paper and as GIFs on the project page. A minor but exciting note is that thanks to its sketch-specific design, our sketch encoder also yields the best sketch recognition accuracy to date while having the smallest number of parameters. The code is available at https://sketchxai.github.io.

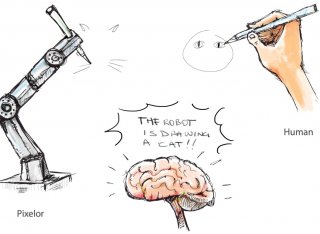

We present the first competitive drawing agent Pixelor that exhibits human-level performance at a Pictionary-like sketching game, where the participant whose sketch is recognized first is a winner. Our AI agent can autonomously sketch a given visual concept, and achieve a recognizable rendition as quickly or faster than a human competitor. The key to victory for the agent’s goal is to learn the optimal stroke sequencing strategies that generate the most recognizable and distinguishable strokes first. Training Pixelor is done in two steps. First, we infer the stroke order that maximizes early recognizability of human training sketches. Second, this order is used to supervise the training of a sequence-to-sequence stroke generator. Our key technical contributions are a tractable search of the exponential space of orderings using neural sorting; and an improved Seq2Seq Wasserstein (S2S-WAE) generator that uses an optimal-transport loss to accommodate the multi-modal nature of the optimal stroke distribution. Our analysis shows that Pixelor is better than the human players of the Quick, Draw! game, under both AI and human judging of early recognition. To analyze the impact of human competitors’ strategies, we conducted a further human study with participants being given unlimited thinking time and training in early recognizability by feedback from an AI judge. The study shows that humans do gradually improve their strategies with training, but overall Pixelor still matches human performance. The code and the dataset are available at http://sketchx.ai/pixelor.

Existing temporal action detection (TAD) methods rely on a large number of training data with segment-level annotations. Collecting and annotating such a training set is thus highly expensive and unscalable. Semi-supervised TAD (SS-TAD) alleviates this problem by leveraging unlabeled videos freely available at scale. However, SS-TAD is also a much more challenging problem than supervised TAD, and consequently much under-studied. Prior SS-TAD methods directly combine an existing proposal-based TAD method and a SSL method. Due to their sequential localization (e.g., proposal generation) and classification design, they are prone to proposal error propagation. To overcome this limitation, in this work we propose a novel Semi - supervised Temporal action detection model based on PropOsal - free Temporal mask (SPOT) with a parallel localization (mask generation) and classification architecture. Such a novel design effectively eliminates the dependence between localization and classification by cutting off the route for error propagation in-between. We further introduce an interaction mechanism between classification and localization for prediction refinement, and a new pretext task for self-supervised model pre-training. Extensive experiments on two standard benchmarks show that our SPOT outperforms state-of-the-art alternatives, often by a large margin. The PyTorch implementation of SPOT is available at https://github.com/sauradip/SPOT

In this paper, for the first time, we investigate the problem of generating 3D shapes from professional 2D sketches via deep learning. We target sketches done by professional artists, as these sketches are likely to contain more details than the ones produced by novices, and thus the reconstruction from such sketches poses a higher demand on the level of detail in the reconstructed models. This is importantly different to previous work, where the training and testing was conducted on either synthetic sketches or sketches done by novices. Novices sketches often depict shapes that are physically unrealistic, while models trained with synthetic sketches could not cope with the level of abstraction and style found in real sketches. To address this problem, we collected the first large-scale dataset of professional sketches, where each sketch is paired with a reference 3D shape, with a total of 1,500 professional sketches collected across 500 3D shapes. The dataset is available at http://sketchx.ai/downloads/. We introduce two bespoke designs within a deep adversarial network to tackle the imprecision of human sketches and the unique figure/ground ambiguity problem inherent to sketch-based reconstruction. We show that existing 3D shapes generation methods designed for images fail to be naively applied to our problem, and demonstrate the effectiveness of our method both qualitatively and quantitatively.

We present the first competitive drawing agent Pixelor that exhibits human-level performance at a Pictionary-like sketching game, where the participant whose sketch is recognized first is a winner. Our AI agent can autonomously sketch a given visual concept, and achieve a recognizable rendition as quickly or faster than a human competitor. The key to victory for the agent’s goal is to learn the optimal stroke sequencing strategies that generate the most recognizable and distinguishable strokes first. Training Pixelor is done in two steps. First, we infer the stroke order that maximizes early recognizability of human training sketches. Second, this order is used to supervise the training of a sequence-to-sequence stroke generator. Our key technical contributions are a tractable search of the exponential space of orderings using neural sorting; and an improved Seq2Seq Wasserstein (S2S-WAE) generator that uses an optimal-transport loss to accommodate the multi-modal nature of the optimal stroke distribution. Our analysis shows that Pixelor is better than the human players of the Quick, Draw! game, under both AI and human judging of early recognition. To analyze the impact of human competitors’ strategies, we conducted a further human study with participants being given unlimited thinking time and training in early recognizability by feedback from an AI judge. The study shows that humans do gradually improve their strategies with training, but overall Pixelor still matches human performance. The code and the dataset are available at http://sketchx.ai/pixelor.

We present the first one-shot personalized sketch segmentation method. We aim to segment all sketches belonging to the same category provisioned with a single sketch with a given part annotation while (i) preserving the parts semantics embedded in the exemplar, and (ii) being robust to input style and abstraction. We refer to this scenario as personalized . With that, we importantly enable a much-desired personalization capability for downstream fine-grained sketch analysis tasks. To train a robust segmentation module, we deform the exemplar sketch to each of the available sketches of the same category. Our method generalizes to sketches not observed during training. Our central contribution is a sketch-specific hierarchical deformation network. Given a multi-level sketch-strokes encoding obtained via a graph convolutional network, our method estimates rigid-body transformation from the target to the exemplar, on the upper level. Finer deformation from the exemplar to the globally warped target sketch is further obtained through stroke-wise deformations, on the lower-level. Both levels of deformation are guided by mean squared distances between the keypoints learned without supervision, ensuring that the stroke semantics are preserved. We evaluate our method against the state-of-the-art segmentation and perceptual grouping baselines re-purposed for the one-shot setting and against two few-shot 3D shape segmentation methods. We show that our method outperforms all the alternatives by more than 10% on average. Ablation studies further demonstrate that our method is robust to personalization : changes in input part semantics and style differences.

Deep image-based modeling has received a lot of attention in recent years. Sketch-based modeling in particular has gained popularity given the ubiquitous nature of touchscreen devices. In this paper, we (i) study and compare diverse single-image reconstruction methods on sketch input, comparing the different 3D shape representations: multi-view, voxel- and point-cloud-based, mesh-based and implicit ones; and (ii) analyze the main challenges and requirements of sketch-based modeling systems. We introduce the regression loss and provide two variants of its formulation for the two most promising 3D shape representations: point clouds and signed distance functions. We show that this loss can increase general reconstruction accuracy, and the view- and style-robustness of the reconstruction methods. Moreover, we demonstrate that this loss can benefit the disentanglement of latent space to view invariant and view-specific information, resulting in further improved performance. To address the figure-ground ambiguity typical for sparse freehand sketches, we propose a two-branch architecture that exploits sparse user labeling. We hope that our work will inform future research on sketch-based modeling.

Growing free online 3D shapes collections dictated research on 3D retrieval. Active debate has however been had on (i) what the best input modality is to trigger retrieval, and (ii) the ultimate usage scenario for such retrieval. In this paper, we offer a different perspective towards answering these questions - we study the use of 3D sketches as an input modality and advocate a VR-scenario where retrieval is conducted. Thus, the ultimate vision is that users can freely retrieve a 3D model by air-doodling in a VR environment. As a first stab at this new 3D VR-sketch to 3D shape retrieval problem, we make four contributions. First, we code a VR utility to collect 3D VR-sketches and conduct retrieval. Second, we collect the first set of 167 3D VRsketches on two shape categories from ModelNet. Third, we propose a novel approach to generate a synthetic dataset of human-like 3D sketches of different abstract levels to train deep networks. At last, we compare the common multi-view and volumetric approaches: We show that, in contrast to 3D shape to 3D shape retrieval, volumetric point-based approaches exhibit superior performance on 3D sketch to 3D shape retrieval due to the sparse and abstract nature of 3D VR-sketches. We believe these contributions will collectively serve as enablers for future attempts at this problem. The VR interface, code and datasets are available at https://tinyurl.com/3DSketch3DV.

We present the first fine-grained dataset of 1,497 3D VR sketch and 3D shape pairs of a chair category with large shapes diversity. Our dataset supports the recent trend in the sketch community on fine-grained data analysis, and extends it to an actively developing 3D domain. We argue for the most convenient sketching scenario where the sketch consists of sparse lines and does not require any sketching skills, prior training or time-consuming accurate drawing. We then, for the first time, study the scenario of fine-grained 3D VR sketch to 3D shape retrieval, as a novel VR sketching application and a proving ground to drive out generic insights to inform future research. By experimenting with carefully selected combinations of design factors on this new problem, we draw important conclusions to help follow-on work. We hope our dataset will enable other novel applications, especially those that require a fine-grained angle such as fine-grained 3D shape reconstruction. The dataset is available at tinyurl.com/ VRSketch3DV21.

Recent text-to-image (T2I) generative models allow for high-quality synthesis following either text instructions or visual examples. Despite their capabilities, these models face limitations in creating new, detailed creatures within specific categories (e.g., virtual dog or bird species), which are valuable in digital asset creation and biodiversity analysis. To bridge this gap, we introduce a novel task, Virtual Creatures Generation: Given a set of unlabeled images of the target concepts (e.g., 200 bird species), we aim to train a T2I model capable of creating new, hybrid concepts within diverse backgrounds and contexts. We propose a new method called DreamCreature, which identifies and extracts the underlying sub-concepts (e.g., body parts of a specific species) in an unsupervised manner. The T2I thus adapts to generate novel concepts (e.g., new bird species) with faithful structures and photorealistic appearance by seamlessly and flexibly composing learned sub-concepts. To enhance sub-concept fidelity and disentanglement, we extend the textual inversion technique by incorporating an additional projector and tailored attention loss regularization. Extensive experiments on two fine-grained image benchmarks demonstrate the superiority of DreamCreature over prior methods in both qualitative and quantitative evaluation. Ultimately, the learned sub-concepts facilitate diverse creative applications, including innovative consumer product designs and nuanced property modifications.

Rising concerns about privacy and anonymity preservation of deep learning models have facilitated research in data-free learning (DFL). For the first time, we identify that for data-scarce tasks like Sketch-Based Image Retrieval (SBIR), where the difficulty in acquiring paired photos and hand-drawn sketches limits data-dependent cross-modal learning algorithms, DFL can prove to be a much more practical paradigm. We thus propose Data-Free (DF)-SBIR, where, unlike existing DFL problems, pre-trained, single-modality classification models have to be leveraged to learn a cross-modal metric-space for retrieval without access to any training data. The widespread availability of pre-trained classification models, along with the difficulty in acquiring paired photo-sketch datasets for SBIR justify the practicality of this setting. We present a methodology for DF-SBIR, which can leverage knowledge from models independently trained to perform classification on photos and sketches. We evaluate our model on the Sketchy, TU-Berlin, and QuickDraw benchmarks, designing a variety of baselines based on state-of-the-art DFL literature, and observe that our method surpasses all of them by significant margins. Our method also achieves mAPs competitive with data-dependent approaches, all the while requiring no training data. Implementation is available at https://github.com/abhrac/data-free-sbir.

The problem of sketch semantic segmentation is far from being solved. Despite existing methods exhibiting near-saturating performances on simple sketches with high recognisability, they suffer serious setbacks when the target sketches are products of an imaginative process with high degree of creativity. We hypothesise that human creativity, being highly individualistic, induces a significant shift in distribution of sketches, leading to poor model generalisation. Such hypothesis, backed by empirical evidences, opens the door for a solution that explicitly disentangles creativity while learning sketch representations. We materialise this by crafting a learnable creativity estimator that assigns a scalar score of creativity to each sketch. It follows that we introduce CreativeSeg, a learning-to-learn framework that leverages the estimator in order to learn creativity-agnostic representation, and eventually the downstream semantic segmentation task. We empirically verify the superiority of CreativeSeg on the recent "Creative Birds" and "Creative Creatures" creative sketch datasets. Through a human study, we further strengthen the case that the learned creativity score does indeed have a positive correlation with the subjective creativity of human. Codes are available at https://github.com/PRIS-CV/Sketch-CS .

In the fashion domain, there exists a variety of vision-and-language (V+L) tasks, including cross-modal retrieval, text-guided image retrieval, multi-modal classification, and image captioning. They differ drastically in each individual input/output format and dataset size. It has been common to design a task-specific model and fine-tune it independently from a pre-trained V+L model (e.g., CLIP). This results in parameter inefficiency and inability to exploit inter-task relatedness. To address such issues, we propose a novel FAshion-focused Multi-task Efficient learning method for Vision-and-Language tasks (FAME-ViL) in this work. Compared with existing approaches, FAME-ViL applies a single model for multiple heterogeneous fashion tasks, therefore being much more parameter-efficient. It is enabled by two novel components: (1) a task-versatile architecture with cross-attention adapters and task-specific adapters integrated into a unified V+L model, and (2) a stable and effective multi-task training strategy that supports learning from heterogeneous data and prevents negative transfer. Extensive experiments on four fashion tasks show that our FAME-ViL can save 61.5% of parameters over alternatives, while significantly outperforming the conventional independently trained single-task models. Code is available at https://github.com/BrandonHanx/FAME-ViL.

This paper is about shape fitting to regions that segment an image and some applications that rely on the abstraction that offers. The novelty lies in three areas: (1) we fit a shape drawn from a selection of shape families, not just one class of shape, using a supervised classifier; (2) We use results from the classifier to match photographs and artwork of particular objects using a few qualitative shapes, which overcomes the significant differences between photographs and paintings; (3) We further use the shape classifier to process photographs into abstract synthetic art which, so far as we know, is novel too. Thus we use our shape classier in both discriminative (matching) and generative (image synthesis) tasks. We conclude the level of abstraction offered by our shape classifier is novel and useful.