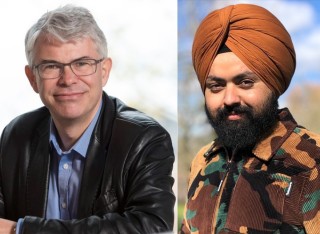

Dr Arshdeep Singh

Academic and research departments

Centre for Vision, Speech and Signal Processing (CVSSP), School of Computer Science and Electronic Engineering, Institute for Sustainability.About

Biography

Arshdeep Singh is employed as a Research Fellow in the AI Hub in Generative Models, King's College London (KCL). He is a visiting researcher at the Centre for Vision Speech and Signal Processing (CVSSP), and a Sustainability Fellow at the Institute for Sustainability, University of Surrey.

Previosuly, He was employed as a Research Fellow in project “AI for Sound” funded through an Established Career Fellowship awarded by the Engineering and Physical Sciences Research Council (EPSRC) to Prof Mark Plumbley (Principal Investigator). In May 2023, Arshdeep has been selected as a Early career Acoustic champion for AI within EPSRC funded UK acoustic network, UKAN+.

Arshdeep has completed his PhD from IIT Mandi, India. His research focuses on designing machine learning frameworks for audio scene classification and compression of neural networks. During his PhD, he worked on sound-based health monitoring to identify the health of an industrial machine as a part of his internship work in Intel Bangalore. Earlier, he has completed his M.E from Panjab University, India and bagged a Gold medal. He has also worked as a Project fellow in CSIR-CSIO Chandigarh.

Areas of specialism

University roles and responsibilities

- Fire warden

Supervision

Postgraduate research supervision

MSc students:

(Co-supervisor, Primary Supervisor: Prof Mark D Plumbley)

Soham Bhattacharya, Dissertation title: Efficient Convolutional Neural Networks for Audio Classification,

Bars Szegedi, Dissertation title: Classification of Sounds Heard at Home using Convolutional neural networks.

Undergraduate (Y3) Students:

(Co-supervisor, Primary Supervisor: Prof Mark D Plumbley)

Kristaps Redmers, Dissertation Title: Visualising Soundscapes using Machine learning, website link: www.soundseek.pro/

Yan-Ping, Liao, Dissertation Title: Recognizing sound events in the home or workplace.

Teaching

Teaching assistanship (tutorials) in 2023 EEE3008 Fundamentals of Digital Signal Processing(DSP).

Sustainable development goals

My research interests are related to the following:

Publications

Highlights

For full list of publications, please visit the link https://sites.google.com/view/arshdeep-singh/home/publications?authuser=0

Convolutional neural networks (CNNs) are commonplace in high-performing solutions to many real-world problems, such as audio classification. CNNs have many parameters and filters, with some having a larger impact on the performance than others. This means that networks may contain many unnecessary filters, increasing a CNN's computation and memory requirements while providing limited performance benefits. To make CNNs more efficient, we propose a pruning framework that eliminates filters with the highest "commonality". We measure this commonality using the graph-theoretic concept of "centrality". We hypothesise that a filter with a high centrality should be eliminated as it represents commonality and can be replaced by other filters without affecting the performance of a network much. An experimental evaluation of the proposed framework is performed on acoustic scene classification and audio tagging. On the DCASE 2021 Task 1A baseline network, our proposed method reduces computations per inference by 71\% with 50\% fewer parameters at less than a two percentage point drop in accuracy compared to the original network. For large-scale CNNs such as PANNs designed for audio tagging, our method reduces 24\% computations per inference with 41\% fewer parameters at a slight improvement in performance.

Environmental sound classification (ESC) aims to automatically recognize audio recordings from the underlying environment, such as " urban park " or " city centre ". Most of the existing methods for ESC use hand-crafted time-frequency features such as log-mel spectrogram to represent audio recordings. However, the hand-crafted features rely on transformations that are defined beforehand and do not consider the variability in the environment due to differences in recording conditions or recording devices. To overcome this, we present an alternative representation framework by leveraging a pre-trained convolutional neural network, SoundNet, trained on a large-scale audio dataset to represent raw audio recordings. We observe that the representations obtained from the intermediate layers of SoundNet lie in low-dimensional subspace. However, the dimensionality of the low-dimensional subspace is not known. To address this, an automatic compact dictionary learning framework is utilized that gives the dimensionality of the underlying subspace. The low-dimensional embeddings are then aggregated in a late-fusion manner in the ensemble framework to incorporate hierarchical information learned at various intermediate layers of SoundNet. We perform experimental evaluation on publicly available DCASE 2017 and 2018 ASC datasets. The proposed ensemble framework improves performance between 1 and 4 percentage points compared to that of existing time-frequency representations

This paper presents a novel approach to make convolutional neural networks (CNNs) efficient by reducing their computational cost and memory footprint. Even though large-scale CNNs show state-of-the-art performance in many tasks, high computational costs and the requirement of a large memory footprint make them resource-hungry. Therefore, deploying large-scale CNNs on resource-constrained devices poses significant challenges. To address this challenge, we propose to use quaternion CNNs, where quaternion algebra enables the memory footprint to be reduced. Furthermore, we investigate methods to reduce the memory footprint and computational cost further through pruning the quaternion CNNs. Experimental evaluation of the audio tagging task involving the classification of 527 audio events from AudioSet shows that the quaternion algebra and pruning reduce memory footprint by 90% and computational cost by 70% compared to the original CNN model while maintaining similar performance.

Convolutional neural networks (CNNs) have shown state-of-the-art performance in various applications. However, CNNs are resource-hungry due to their requirement of high computational complexity and memory storage. Recent efforts toward achieving computational and memory efficiency in CNNs involve filter pruning methods that eliminate some of the filters in CNNs based on the “importance” of the filters. The majority of existing filter pruning methods are either “active”, which use a dataset and generate feature maps to quantify filter importance, or “passive”, which compute filter importance using entry-wise norm of the filters or by measuring similarity among filters without involving data. However, the existing passive filter pruning methods eliminate relatively smaller norm filters or similar filters without considering the significance of the filters in producing the node output, resulting in degradation in the performance. To address this, we present a passive filter pruning method where the least significant filters with relatively smaller contribution in producing output are pruned away by incorporating the operator norm of the filters. The proposed pruning method results in better performance across various CNNs compared to that of the existing passive filter pruning methods. In comparison to the existing active filter pruning methods, the proposed pruning method is more efficient and achieves similar performance as well. The efficacy of the proposed pruning method is evaluated on audio scene classification and audio tagging tasks using various CNNs architecture such as VGGish, DCASE21_Net and PANNs. The proposed pruning method reduces number of computations and parameters of the unrpuned CNNs by at least 40% and 50% respectively, enhancing inference latency while maintaining similar performance as obtained using the unpruned CNNs.

Although automated audio captioning (AAC) has achieved remarkable performance improvement in recent years, concerns about the complexity of AAC models have drawn little attention from the research community. To reduce the number of model parameters, passive filter pruning has been successfully applied to convolution neural networks (CNNs) in audio classification tasks. However, due to the discrepancy between audio classification and AAC, these pruning methods are not necessarily suitable for captioning. In this work, we investigate the effectiveness of several passive filter pruning approaches on an efficient CNN-Transformer-based AAC architecture. Through extensive experiments, we find that under the same pruning ratio, pruning from the later convolution blocks significantly improves the performance. Utilizing the norm-based pruning method, our pruned model reduces the parameter number by 15% compared to that of the original model while maintaining a similar performance.

This paper presents a residential audio dataset to support sound event detection research for smart home applications aimed at promoting wellbeing for older adults. The dataset is constructed by deploying audio recording systems in the homes of 8 participants aged 55-80 years for a 7-day period. Acoustic characteristics are documented through detailed floor plans and construction material information to enable replication of the recording environments for AI model deployment. A novel automated speech removal pipeline is developed, using pretrained audio neural networks to detect and remove segments containing spoken voice, while preserving segments containing other sound events. The resulting dataset consists of privacycompliant audio recordings that accurately capture the soundscapes and activities of daily living within residential spaces. The paper details the dataset creation methodology, the speech removal pipeline utilizing cascaded model architectures, and an analysis of the vocal label distribution to validate the speech removal process. This dataset enables the development and benchmarking of sound event detection models tailored specifically for in-home applications.