Professor Richard Bowden

Academic and research departments

Centre for Vision, Speech and Signal Processing (CVSSP), School of Computer Science and Electronic Engineering.About

Biography

Richard Bowden is Professor of Computer Vision and Machine Learning at the University of Surrey where he leads the Cognitive Vision Group within CVSSP and is Associate Dean for postgraduate research within his faculty. His research centres on the use of computer vision to locate, track, understand and learn from humans. He has held over 40 research grants from UK, EU funding bodies as well as industrial funded projects. These projects cover areas such as cognitive robotics and vision, sign and gesture recognition, lip-reading and nonverbal communication as well as many fundamental topics to computer vision such as tracking and detection. His research has been recognised by prizes, plenary talks and media/press coverage including the Sullivan thesis prize in 2000 and many best paper awards.

To date, he has published over 200 peer reviewed publications and has served as either program committee member or area chair for ICCV, CVPR, ECCV, BMVA, FG and ICPR in addition to numerous international workshops and conferences. He is an Associate Editor for the journals Image and Vision Computing and IEEE Trans Pattern Analysis and Machine Intelligence (the top journal in his field). He was awarded a Royal Society Leverhulme Trust Senior Research Fellowship in 2013 and is a member of the RS international exchanges committee. He was a member of the British Machine Vision Association (BMVA) executive committee and a company director for seven years. He is a member of the BMVA, a senior member of the IEEE and a Fellow of the Higher Education Academy. He was awarded a prestigious Fellowship of the International Association of Pattern Recognition in 2016.

Areas of specialism

University roles and responsibilities

- Professor of Computer Vision and Machine Learning

My qualifications

Previous roles

Affiliations and memberships

ResearchResearch interests

Richard's research focuses on the use of computer vision to locate, track and understand humans, with specific examples in Sign and Gesture recognition, Activity and Action recognition, lip-reading and facial feature tracking but also in HCI and cognitive robotics and machine perception where machines learn to understand and predict humans.

His research into tracking and artificial life received worldwide media coverage, appearing at the British Science Museum and the Minnesota Science Museum.

He is cofounder of the AI startup Signapse that provide real time photorelastic sign language translation using AI.

Research projects

SignGPT is a UKRI EPSRC Programme Grant focused on the development of an AI-powered translation system capable of unconstrained, bidirectional translation between British Sign Language (BSL) and English. The project will build the first generative predictive transformer for sign language, combining computer vision, sign linguistics, and machine learning. It is led by an interdisciplinary team from the University of Surrey, University of Oxford, and University College London, with direct involvement from Deaf organisations and community partners.

Grant funding and additional support from Google’s philanthropic arm, Google.org, in order to develop artificial intelligence (AI) research to pave the way for instant Sign Language translation. Working with Signapse as a delivery partner, the project will translate key websites into Sign Language, boosting digital inclusion for the 600,000 deaf people in the US and UK for whom Sign Language is their first language.

The goal of the IICT flagship is to develop information and communication technologies (ICT) for persons with disabilities. Therefore, the flagship targets five applications in the context of accessibility: text simplification, sign language translation, sign language assessment, audio description, and spoken subtitles. Within the flagship, each application constitutes its own subproject, with tight connections between the subprojects through shared technologies such as artificial intelligence techniques.

The project is a Swiss funded national project but Surrey is included due to our extensive expertise in the area of AI for Sign Languages.

The goal of the proposed project SMILE is to pioneer an assessment system for Swiss German Sign Language (Deutschschweizerische Gebärdensprache, DSGS) using automatic sign language recognition technology.

The project tackled the subject of automatically learn to recognise dynamic activity in broadcast footage with demonstration activities in both Sign Language and more general actions and activity

Improving investigative capability through the application of science and technology

Dicta-Sign aimed to enable communication between Deaf individuals by promoting the development of natural human computer interfaces (HCI) for Deaf users. It researched and develop recognition and synthesis systems for sign languages (SLs) at a level of detail necessary for recognising and generating authentic signing. Research outcomes were integrated into three prototypes (a Search-by-Example tool, a SL-to-SL translator and a sign-Wiki).

LiLiR looked at Language Independent Lip Reading from video

The DIPLECS project aimed to design an Artificial Cognitive System capable of learning and adapting to respond to everyday situations humans take for granted. The primary demonstration of its capability was providing assistance and advice to the driver of a car. The system learnt by watching humans, how they act and react while driving, building models of their behaviour and predicting what a driver would do when presented with a specific driving scenario.

In the COSPAL architecture we combined techniques from the field of artificial intelligence (AI) for symbolic reasoning and learning of artificial neural networks (ANN) for association of percepts and states. We establish feedback loops through the continuous and the symbolic parts of the system, which allow perception-action feedback at several levels in the system. After an initial bootstrapping phase, incremental learning techniques were used to train the system, allowing adaptation and exploration.

The central goal is to build a vision system that can be used in a wider variety of fields and that is re-usable by introducing self-adaptation at the level of perception, by providing categorisation capabilities, and by making explicit the knowledge base at the level of reasoning, and thereby enabling the knowledge base to be changed. In order to make these ideas concrete CogViSys aims at developing a virtual commentator which is able to translate visual information into a textual description.

Indicators of esteem

Spinout Company of the Year: Surrey Business Awards (Signapse AI Ltd) 2022

Outstanding Paper Award: International Conference of Robotics and Automation 2022

Challenge Winner: Chalearn Sign Spotting Competition 2022

Distinguished Fellowship: British Machine Vision Association 2021

Fellowship: Inaugural Fellow of Asia-Pacific AI Association, FAAIA 2021

Surrey AI Fellowship: core member of Surrey Institute for People-Centred AI 2021

Advisory Board Member: EPSRC CAMERA 2.0 2020-2025

Business Fellowship: Transport Systems Catapult now Connected Places Catapult 2018-2019

Awarded Fellow of the International Association of Pattern Recognition in 2016

Member of Royal Society’s International Exchanges Committee 2016-2021

Executive Committee member and theme leader for EPSRC ViiHM Network 2015

TIGA Games award for Makaton Learning Environment with Gamelab UK 2013

Appointed Associate Editor for IEEE Pattern Analysis and Machine Intelligence 2013-2019

Royal Society Leverhulme Trust Senior Research Fellowship 2012

Best Paper Award at VISAPP2012

Advisory Board for Springer Advances in Computer Vision and Pattern Recognition 2012-present

General Chair BMVC2012

Outstanding Reviewer Award ICCV 2011

Best Paper Award at IbPRIA2011

Main Track Chair (Computer & Robot Vision) ICPR2012, Japan

Appointed Associate Editor International Journal of Image & Vision Comp

Sullivan thesis prize in 2000

Research interests

Richard's research focuses on the use of computer vision to locate, track and understand humans, with specific examples in Sign and Gesture recognition, Activity and Action recognition, lip-reading and facial feature tracking but also in HCI and cognitive robotics and machine perception where machines learn to understand and predict humans.

His research into tracking and artificial life received worldwide media coverage, appearing at the British Science Museum and the Minnesota Science Museum.

He is cofounder of the AI startup Signapse that provide real time photorelastic sign language translation using AI.

Research projects

SignGPT is a UKRI EPSRC Programme Grant focused on the development of an AI-powered translation system capable of unconstrained, bidirectional translation between British Sign Language (BSL) and English. The project will build the first generative predictive transformer for sign language, combining computer vision, sign linguistics, and machine learning. It is led by an interdisciplinary team from the University of Surrey, University of Oxford, and University College London, with direct involvement from Deaf organisations and community partners.

Grant funding and additional support from Google’s philanthropic arm, Google.org, in order to develop artificial intelligence (AI) research to pave the way for instant Sign Language translation. Working with Signapse as a delivery partner, the project will translate key websites into Sign Language, boosting digital inclusion for the 600,000 deaf people in the US and UK for whom Sign Language is their first language.

The goal of the IICT flagship is to develop information and communication technologies (ICT) for persons with disabilities. Therefore, the flagship targets five applications in the context of accessibility: text simplification, sign language translation, sign language assessment, audio description, and spoken subtitles. Within the flagship, each application constitutes its own subproject, with tight connections between the subprojects through shared technologies such as artificial intelligence techniques.

The project is a Swiss funded national project but Surrey is included due to our extensive expertise in the area of AI for Sign Languages.

The goal of the proposed project SMILE is to pioneer an assessment system for Swiss German Sign Language (Deutschschweizerische Gebärdensprache, DSGS) using automatic sign language recognition technology.

The project tackled the subject of automatically learn to recognise dynamic activity in broadcast footage with demonstration activities in both Sign Language and more general actions and activity

Improving investigative capability through the application of science and technology

Dicta-Sign aimed to enable communication between Deaf individuals by promoting the development of natural human computer interfaces (HCI) for Deaf users. It researched and develop recognition and synthesis systems for sign languages (SLs) at a level of detail necessary for recognising and generating authentic signing. Research outcomes were integrated into three prototypes (a Search-by-Example tool, a SL-to-SL translator and a sign-Wiki).

LiLiR looked at Language Independent Lip Reading from video

The DIPLECS project aimed to design an Artificial Cognitive System capable of learning and adapting to respond to everyday situations humans take for granted. The primary demonstration of its capability was providing assistance and advice to the driver of a car. The system learnt by watching humans, how they act and react while driving, building models of their behaviour and predicting what a driver would do when presented with a specific driving scenario.

In the COSPAL architecture we combined techniques from the field of artificial intelligence (AI) for symbolic reasoning and learning of artificial neural networks (ANN) for association of percepts and states. We establish feedback loops through the continuous and the symbolic parts of the system, which allow perception-action feedback at several levels in the system. After an initial bootstrapping phase, incremental learning techniques were used to train the system, allowing adaptation and exploration.

The central goal is to build a vision system that can be used in a wider variety of fields and that is re-usable by introducing self-adaptation at the level of perception, by providing categorisation capabilities, and by making explicit the knowledge base at the level of reasoning, and thereby enabling the knowledge base to be changed. In order to make these ideas concrete CogViSys aims at developing a virtual commentator which is able to translate visual information into a textual description.

Indicators of esteem

Spinout Company of the Year: Surrey Business Awards (Signapse AI Ltd) 2022

Outstanding Paper Award: International Conference of Robotics and Automation 2022

Challenge Winner: Chalearn Sign Spotting Competition 2022

Distinguished Fellowship: British Machine Vision Association 2021

Fellowship: Inaugural Fellow of Asia-Pacific AI Association, FAAIA 2021

Surrey AI Fellowship: core member of Surrey Institute for People-Centred AI 2021

Advisory Board Member: EPSRC CAMERA 2.0 2020-2025

Business Fellowship: Transport Systems Catapult now Connected Places Catapult 2018-2019

Awarded Fellow of the International Association of Pattern Recognition in 2016

Member of Royal Society’s International Exchanges Committee 2016-2021

Executive Committee member and theme leader for EPSRC ViiHM Network 2015

TIGA Games award for Makaton Learning Environment with Gamelab UK 2013

Appointed Associate Editor for IEEE Pattern Analysis and Machine Intelligence 2013-2019

Royal Society Leverhulme Trust Senior Research Fellowship 2012

Best Paper Award at VISAPP2012

Advisory Board for Springer Advances in Computer Vision and Pattern Recognition 2012-present

General Chair BMVC2012

Outstanding Reviewer Award ICCV 2011

Best Paper Award at IbPRIA2011

Main Track Chair (Computer & Robot Vision) ICPR2012, Japan

Appointed Associate Editor International Journal of Image & Vision Comp

Sullivan thesis prize in 2000

Supervision

Postgraduate research supervision

Current:

Oline Ranum (Current), Karahan Sahin (Current), Low Jian He (Leo) (Current), Sobhan Asasi (Current), Marshall Thomas (Current), Olliver Cory (Current), Alexandre Symeonidis-Herzig (Current), Georgina Alcolado Nuthall (Current), Rogier Fransen (Current), Anton Pelykh (Current), Ben Canini (Current), Maksym Ivashechkin (Current)

Postgraduate research supervision

Graduated:

Harry Walsh (Graduated 2025), Ryan Wong (Graduated 2025), James Ross (Graduated 2025), Avishkar Saha (Graduated 2024), Ben Saunders (Graduated 2024), Nimet Kaygusuz (Graduated 2023), Salar Arbabi (Graduated 2023), Guillaume Rochette (Graduated 2023), Matthew Vowels (Graduated 2022), Sampo Kuutti (Graduated 2022), Jaime Spencer Martin (Graduated 2022), Celyn Walters (Graduated 2021), Rebecca Allday (Graduated 2021), Cihan Camgoz (Graduated 2020), Oscar Koller (Graduated 2020), Matthew Marter (Graduated 2018), James Elder (Graduated 2017), Oscar Mendez Maldonado (Graduated 2017), Karel Lebada (Graduated 2016), Phil Krejov (Graduated 2016), Brian Holt (Graduated 2014), Simon Hadfield (Graduated 2013), Ashish Gupta (Graduated 2013), Timothy Sheerman-Chase (Graduated 2013), Stephen Moore (Graduated 2012), Olusegun Oshin (Graduated 2011), Dumebi Okwechime (Graduated 2011), Liam Ellis (Graduated 2010), Helen Cooper (Graduated 2010), Andrew Gilbert (Graduated 2008), Nickolas Dowson (Graduated 2006), Antonio Micilotta (Graduated 2005), Pakorn KaewTraKulPong (Graduated 2002).

Teaching

I normally teach 1 C/C++ programming and Robotics but due to a heavy research portfolio Im currently having a sabbatical from teaching.

Publications

Recent progress in Sign Language Translation (SLT) has focussed primarily on improving the representational capacity of large language models to incorporate Sign Language features. This work explores an alternative direction: enhancing the geometric properties of skeletal representations themselves. We propose Geo-Sign, a method that leverages the properties of hyperbolic geometry to model the hierarchical structure inherent in sign language kinematics. By projecting skeletal features derived from Spatio-Temporal Graph Convolutional Networks (ST-GCNs) into the Poincaré ball model, we aim to create more discriminative embeddings, particularly for fine-grained motions like finger articulations. We introduce a hyperbolic projection layer, a weighted Fréchet mean aggregation scheme, and a geometric contrastive loss operating directly in hyperbolic space. These components are integrated into an end-to-end translation framework as a regularisation function, to enhance the representations within the language model. This work demonstrates the potential of hyperbolic geometry to improve skeletal representations for Sign Language Translation, improving on SOTA RGB methods while preserving privacy and improving computational efficiency.

Sign Language Translation (SLT) aims to automatically convert visual sign language videos into spoken language text and vice versa. While recent years have seen rapid progress, the true sources of performance improvements often remain unclear. Do reported performance gains come from methodological novelty, or from the choice of a different backbone, training optimizations, hyperparameter tuning, or even differences in the calculation of evaluation metrics? This paper presents a comprehensive study of recent gloss-free SLT models by re-implementing key contributions in a unified codebase. We ensure fair comparison by standardizing preprocessing, video encoders, and training setups across all methods. Our analysis shows that many of the performance gains reported in the literature often diminish when models are evaluated under consistent conditions, suggesting that implementation details and evaluation setups play a significant role in determining results. We make the codebase publicly available here to support transparency and reproducibility in SLT research.

There has been significant progress in human image generation in recent years, particularly with the introduction of diffusion models. However, it is challenging for the existing methods to produce consistent hand anatomy, and the generated images often lack precise control over hand pose. To address this limitation, we introduce a novel two-stage approach to pose-conditioned human image generation. Firstly, we generate detailed hands and then outpaint the body around those hands. We propose training the hand generator in a multi-task setting to produce both hand image and their corresponding segmentation masks, and employ the trained model in the first stage of generation. An adapted ControlNet model is then used in the second stage to outpaint the body. We introduce a novel blending technique that combines the results of both stages in a coherent way and preserves the hand details. It involves sequential expansion of the outpainted region while fusing the latent representations, to ensure a seamless and cohesive synthesis of the final image. Experimental evaluations demonstrate the superiority of our proposed method over state-of-the-art techniques in both pose accuracy and image quality, as validated on the HaGRID and YouTube-ASL datasets. Our approach not only enhances the quality of the generated hands, but also offers improved control over hand pose, advancing the capabilities of pose-conditioned human image generation. We make the code available.

Photorealistic and controllable human avatars have gained popularity in the research community thanks to rapid advances in neural rendering, providing fast and realistic synthesis tools. However, a limitation of current solutions is the presence of noticeable blurring. To solve this problem, we propose GaussianGAN, an animatable avatar approach developed for photorealistic rendering of people in real-time. We introduce a novel Gaussian splatting densification strategy to build Gaussian points from the surface of cylindrical structures around estimated skeletal limbs. Given the camera calibration, we render an accurate semantic segmentation with our novel view segmentation module. Finally, a UNet generator uses the rendered Gaussian splatting features and the segmentation maps to create photorealistic digital avatars. Our method runs in real-time with a rendering speed of 79 FPS. It outperforms previous methods regarding visual perception and quality, achieving a state-of-the-art results in terms of a pixel fidelity of 32.94db on the ZJU Mocap dataset and 33.39db on the Thuman4 dataset.

This work tackles the challenge of continuous signlanguage segmentation, a key task with huge implications forsign language translation and data annotation. We proposea transformer-based architecture that models the temporaldynamics of signing and frames segmentation as a sequencelabeling problem using the Begin-In-Out (BIO) tagging scheme.Our method leverages the HaMeR hand features, and iscomplemented with 3D Angles. Extensive experiments show thatour model achieves state-of-the-art results on the DGS Corpus,while our features surpass prior benchmarks on BSLCorpus.

Sign Language Translation (SLT) aims to convert sign language videos into spoken or written text. While early systems relied on gloss annotations as an intermediate supervision, such annotations are costly to obtain and often fail to capture the full complexity of continuous signing. In this work, we propose a two-phase, dual visual encoder framework for gloss-free SLT, leveraging contrastive visual-language pretraining. During pretraining, our approach employs two complementary visual backbones whose outputs are jointly aligned with each other and with sentence-level text embeddings via a contrastive objective. During the downstream SLT task, we fuse the visual features and input them into an encoder-decoder model. On the Phoenix-2014T benchmark, our dual encoder architecture consistently outperforms its single stream variants and achieves the highest BLEU-4 score among existing gloss-free SLT approaches.

Sign Language Translation (SLT) is a challenging task that aims to generate spoken language sentences from sign language videos. In this paper, we introduce a lightweight, modular SLT framework, Spotter+GPT, that leverages the power of Large Language Models (LLMs) and avoids heavy end-to-end training. Spotter+GPT breaks down the SLT task into two distinct stages. First, a sign spotter identifies individual signs within the input video. The spotted signs are then passed to an LLM, which transforms them into meaningful spoken language sentences. Spotter+GPT eliminates the requirement for SLT-specific training. This significantly reduces computational costs and time requirements. The source code and pretrained weights of the Spotter are available at https://gitlab.surrey.ac.uk/cogvispublic/sign-spotter.

Sign Language Translation (SLT) attempts to convert sign language videos into spoken sentences. However, many existing methods struggle with the disparity between visual and textual representations during end-to-end learning. Gloss-based approaches help to bridge this gap by leveraging structured linguistic information. While, gloss-free methods offer greater flexibility and remove the burden of annotation, they require effective alignment strategies. Recent advances in Large Language Models (LLMs) have enabled gloss-free SLT by generating text-like representations from sign videos. In this work, we introduce a novel hierarchical pre-training strategy inspired by the structure of sign language, incorporating pseudo-glosses and contrastive video-language alignment. Our method hierarchically extracts features at frame, segment, and video levels, aligning them with pseudo-glosses and the spoken sentence to enhance translation quality. Experiments demonstrate that our approach improves BLEU-4 and ROUGE scores while maintaining efficiency.

In natural language processing (NLP) of spoken languages , word embeddings have been shown to be a useful method to encode the meaning of words. Sign languages are visual languages, which require sign embeddings to capture the visual and linguistic semantics of sign. Unlike many common approaches to Sign Recognition, we focus on explicitly creating sign embeddings that bridge the gap between sign language and spoken language. We propose a learning framework to derive LCC (Learnt Con-trastive Concept) embeddings for sign language, a weakly supervised contrastive approach to learning sign embed-dings. We train a vocabulary of embeddings that are based on the linguistic labels for sign video. Additionally, we develop a conceptual similarity loss which is able to utilise word embeddings from NLP methods to create sign embed-dings that have better sign language to spoken language correspondence. These learnt representations allow the model to automatically localise the sign in time. Our approach achieves state-of-the-art keypoint-based sign recognition performance on the WLASL and BOBSL datasets.

Sign Language Translation (SLT) is a challenging task that aims to generate spoken language sentences from sign language videos, both of which have different grammar and word/gloss order. From a Neural Machine Translation (NMT) perspective, the straightforward way of training translation models is to use sign language phrase-spoken language sentence pairs. However, human interpreters heavily rely on the context to understand the conveyed information , especially for sign language interpretation, where the vocabulary size may be significantly smaller than their spoken language equivalent. Taking direct inspiration from how humans translate, we propose a novel multi-modal transformer architecture that tackles the translation task in a context-aware manner, as a human would. We use the context from previous sequences and confident predictions to disambiguate weaker visual cues. To achieve this we use complementary transformer encoders, namely: (1) A Video Encoder, that captures the low-level video features at the frame-level, (2) A Spotting Encoder, that models the recognized sign glosses in the video, and (3) A Context Encoder, which captures the context of the preceding sign sequences. We combine the information coming from these encoders in a final transformer decoder to generate spoken language translations. We evaluate our approach on the recently published large-scale BOBSL dataset, which contains ∼1.2M sequences , and on the SRF dataset, which was part of the WMT-SLT 2022 challenge. We report significant improvements on state-of-the-art translation performance using contextual information, nearly doubling the reported BLEU-4 scores of baseline approaches.

Automatic Sign Language Translation requires the integration of both computer vision and natural language processing to effectively bridge the communication gap between sign and spoken languages. However, the deficiency in large-scale training data to support sign language translation means we need to leverage resources from spoken language. We introduce, Sign2GPT, a novel framework for sign language translation that utilizes large-scale pretrained vision and language models via lightweight adapters for gloss-free sign language translation. The lightweight adapters are crucial for sign language translation, due to the constraints imposed by limited dataset sizes and the computational requirements when training with long sign videos. We also propose a novel pretraining strategy that directs our encoder to learn sign representations from automatically extracted pseudo-glosses without requiring gloss order information or annotations. We evaluate our approach on two public benchmark sign language translation datasets, namely RWTH-PHOENIX-Weather 2014T and CSL-Daily, and improve on state-of-the-art gloss-free translation performance with a significant margin.

Graph convolutional networks (GCNs) enable end-to-end learning on graph structured data. However, many works assume a given graph structure. When the input graph is noisy or unavailable, one approach is to construct or learn a latent graph structure. These methods typically fix the choice of node degree for the entire graph, which is suboptimal. Instead, we propose a novel end-to-end differentiable graph generator which builds graph topologies where each node selects both its neighborhood and its size. Our module can be readily integrated into existing pipelines involving graph convolution operations, replacing the predetermined or existing adjacency matrix with one that is learned, and optimized, as part of the general objective. As such it is applicable to any GCN. We integrate our module into trajectory prediction, point cloud classification and node classification pipelines resulting in improved accuracy over other structure-learning methods across a wide range of datasets and GCN backbones.

Graph convolutional networks (GCNs) enable end-to-end learning on graph structured data. However, many works assume a given graph structure. When the input graph is noisy or unavailable, one approach is to construct or learn a latent graph structure. These methods typically fix the choice of node degree for the entire graph, which is suboptimal. Instead, we propose a novel end-to-end differentiable graph generator which builds graph topologies where each node selects both its neighborhood and its size. Our module can be readily integrated into existing pipelines involving graph convolution operations, replacing the predetermined or existing adjacency matrix with one that is learned, and optimized, as part of the general objective. As such it is applicable to any GCN. We integrate our module into trajectory prediction, point cloud classification and node classification pipelines resulting in improved accuracy over other structure-learning methods across a wide range of datasets and GCN backbones. We will release the code.

Sign Language Assessment (SLA) tools are useful to aid in language learning and are underdeveloped. Previous work has focused on isolated signs or comparison against a single reference video to assess Sign Languages (SL). This paper introduces a novel SLA tool designed to evaluate the comprehensibility of SL by modelling the natural distribution of human motion. We train our pipeline on data from native signers and evaluate it using SL learners. We compare our results to ratings from a human raters study and find strong correlation between human ratings and our tool. We visually demonstrate our tools ability to detect anomalous results spatio-temporally, providing actionable feedback to aid in SL learning and assessment.

As robots increasingly coexist with humans, they must navigate complex, dynamic environments rich in visual information and implicit social dynamics, like when to yield or move through crowds. Addressing these challenges requires significant advances in vision-based sensing and a deeper understanding of socio-dynamic factors, particularly in tasks like navigation. To facilitate this, robotics researchers need advanced simulation platforms offering dynamic, photorealistic environments with realistic actors. Unfortunately, most existing simulators fall short, prioritizing geometric accuracy over visual fidelity, and employing unrealistic agents with fixed trajectories and low-quality visuals. To overcome these limitations, we developed a simulator that incorporates three essential elements: (1) photorealistic neural rendering of environments, (2) neurally animated human entities with behaviour management, and (3) an ego-centric robotic agent providing multi-sensor output. By utilizing advanced neural rendering techniques in a dual-NeRF simulator, our system produces high-fidelity, photorealistic renderings of both environments and human entities. Additionally, it integrates a state-of-the-art Social Force Model (SoFM) to model dynamic human-human and human-robot interactions, creating the first photorealistic and accessible human-robot simulation system powered by neural rendering.

Lip Reading, or Visual Automatic Speech Recognition (V-ASR), is a complex task requiring the interpretation of spoken language exclusively from visual cues, primarily lip movements and facial expressions. This task is especially challenging due to the absence of auditory information and the inherent ambiguity when visually distinguishing phonemes that have overlapping visemes, where different phonemes appear identical on the lips. Current methods typically attempt to predict words or characters directly from these visual cues, but this approach frequently encounters high error rates due to coarticulation effects and viseme ambiguity. We propose a novel two-stage, phoneme-centric framework for Visual Automatic Speech Recognition (V-ASR) that addresses these longstanding challenges. First, our model predicts a compact sequence of phonemes from visual inputs using a Video Transformer with a CTC head, thereby reducing the task complexity and achieving robust speaker invariance. This phoneme output then serves as the input to a fine-tuned Large Language Model (LLM), which reconstructs coherent words and sentences by leveraging broader linguistic context. Unlike existing methods that either predict words directly or rely on large-scale multimodal pre-training, our approach explicitly encodes intermediate linguistic structure while remaining highly data efficient. We demonstrate state-of-the-art performance on two challenging datasets, LRS2 and LRS3, where our method achieves significant reductions in Word Error Rate (WER) achieving a SOTA WER of 18.7 on LRS3 despite using 99.4% less labelled video data than the next best approach.

3D human generation is an important problem with a wide range of applications in computer vision and graphics.Despite recent progress in generative AI, such as diffusion models or rendering methods like Neural Radiance Fields or Gaussian Splatting, controlling the generation of accurate 3D humans from text prompts remains an open challenge.Current methods struggle with fine detail, accurate rendering of hands and faces, human realism, and controlability over appearance.The lack of diversity, realism, and annotation in human image data also remains a challenge, hindering the development of a foundational 3D human model.We present a weakly supervised pipeline that attempts to address these challenges.In the first step, we generate a photorealistic human image dataset with controllable attributes such as appearance, race, gender, etc., using a state-of-the-art image diffusion model.Next, we propose an efficient mapping approach from image features to 3D point clouds using a transformer-based architecture.Finally, we close the loop by training a point-cloud diffusion model that is conditioned on the same text prompts used to generate the original samples. We demonstrate orders-of-magnitude speed-ups in 3D human generation compared to the state-of-the-art approaches, along with significantly improved text-prompt alignment, realism, and rendering quality.The code and data are available at https://github.com/ivashmak/hugediff.git.

The applications of causal inference may be life-critical, including the evaluation of vaccinations, medicine, and social policy. However, when undertaking estimation for causal inference, practitioners rarely have access to what might be called ‘ground-truth’ in a supervised learning setting, meaning the chosen estimation methods cannot be evaluated and must be assumed to be reliable. It is therefore crucial that we have a good understanding of the performance consistency of typical methods available to practitioners. In this work we provide a comprehensive evaluation of recent semiparametric methods (including neural network approaches) for average treatment effect estimation. Such methods have been proposed as a means to derive unbiased causal effect estimates and statistically valid confidence intervals, even when using otherwise non-parametric, data-adaptive machine learning techniques. We also propose a new estimator ‘MultiNet’, and a variation on the semiparametric update step ‘MultiStep’, which we evaluate alongside existing approaches. The performance of both semiparametric and ‘regular’ methods are found to be dataset dependent, indicating an interaction between the methods used, the sample size, and nature of the data generating process. Our experiments highlight the need for practitioners to check the consistency of their findings, potentially by undertaking multiple analyses with different combinations of estimators.

This paper presents an open and comprehensive framework to systematically evaluate state-of-the-art contributions to self-supervised monocular depth estimation. This includes pretraining, backbone, architectural design choices and loss functions. Many papers in this field claim novelty in either architecture design or loss formulation. However, simply updating the backbone of historical systems results in relative improvements of 25%, allowing them to outperform the majority of existing systems. A systematic evaluation of papers in this field was not straightforward. The need to compare like-with-like in previous papers means that longstanding errors in the evaluation protocol are ubiquitous in the field. It is likely that many papers were not only optimized for particular datasets, but also for errors in the data and evaluation criteria. To aid future research in this area, we release a modular codebase (this https URL), allowing for easy evaluation of alternate design decisions against corrected data and evaluation criteria. We re-implement, validate and re-evaluate 16 state-of-the-art contributions and introduce a new dataset (SYNS-Patches) containing dense outdoor depth maps in a variety of both natural and urban scenes. This allows for the computation of informative metrics in complex regions such as depth boundaries.

This paper presents an open and comprehensive framework to systematically evaluate state-of-the-art contributions to self-supervised monocular depth estimation. This includes pretraining, backbone, architectural design choices and loss functions. Many papers in this field claim novelty in either architecture design or loss formulation. However, simply updating the backbone of historical systems results in relative improvements of 25%, allowing them to outperform the majority of existing systems. A systematic evaluation of papers in this field was not straightforward. The need to compare like-with-like in previous papers means that longstanding errors in the evaluation protocol are ubiquitous in the field. It is likely that many papers were not only optimized for particular datasets, but also for errors in the data and evaluation criteria. To aid future research in this area, we release a modular codebase (https://github.com/jspenmar/monodepth_benchmark), allowing for easy evaluation of alternate design decisions against corrected data and evaluation criteria. We re-implement, validate and re-evaluate 16 state-of-the-art contributions and introduce a new dataset (SYNS-Patches) containing dense outdoor depth maps in a variety of both natural and urban scenes. This allows for the computation of informative metrics in complex regions such as depth boundaries.

We explore symbolic policy optimization for various legged locomotion challenges; specifically walker environments ranging from bipedal to highly redundant systems with 128 legs. These represent a broad range of action space dimensionalities. We find that state-of-the-art symbolic policy optimization approaches struggle to scale to these higher dimensional problems, due to the need to iterate over action dimensions, and their reliance on a neural network anchor policy. We thus propose Fast Symbolic Policy (FSP) to accelerate the training of symbolic locomotion policies. This approach avoids the need to iterate over the action dimensions, and does not require a pre-trained neural network anchor. We also propose Dim-X, a method for effectively reducing the action space dimensionality using the inductive priors of legged locomotion. We demonstrate that FSP with Dim-X can learn symbolic policies, with improved scaling performance compared to the baselines, vastly exceeding that possible with previous symbolic techniques. We further show that Dim-X on its own can also be integrated into neural network policies to shorten their training time and improve scaling performance.

One of the largest challenges in the deployment of legged robots in the real world is deriving effective general gaits. In this paper, we present BeeTLe, which is a framework that enables terrain aware locomotion without the need for dedicated terrain sensors. BeeTLe is realised as a multi-expert policy Reinforcement Learning (RL) algorithm. This enables multiple gaits, applicable to different surface types, to be stored and shared in a single policy. Sensor free terrain awareness is incorporated using a Recurrent Neural Network (RNN) to infer surface type purely from actuator positions over time. The RNN achieves an accuracy of 94% in terrain identification out of 8 possible options. We demonstrate that BeeTLe achieves a greater performance than the baselines across a series of challenges including: the traversal of a flat plane, a tilted plane, a sequence of tilted planes and geometry modelling a natural hilly terrain. This is despite not seeing the sequence of tilted planes and the natural hilly terrain during training.

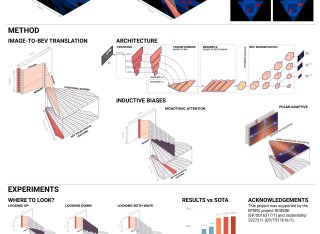

We approach instantaneous mapping, converting images to a top-down view of the world, as a translation problem. We show how a novel form of transformer network can be used to map from images and video directly to an overhead map or bird's-eye-view (BEV) of the world, in a single end-to-end network. We assume a 1-1 correspondence between a vertical scanline in the image, and rays passing through the camera location in an overhead map. This lets us formulate map generation from an image as a set of sequence-to-sequence translations. This constrained formulation , based upon a strong physical grounding of the problem, leads to a restricted transformer network that is convolutional in the horizontal direction only. The structure allows us to make efficient use of data when training, and obtains state-of-the-art results for instantaneous mapping of three large-scale datasets, including a 15% and 30% relative gain against existing best performing methods on the nuScenes and Argoverse datasets, respectively.

Hand pose estimation from a single image has many applications. However, approaches to full 3D body pose estimation are typically trained on day-to-day activities or actions. As such, detailed hand-to-hand interactions are poorly represented, especially during motion. We see this in the failure cases of techniques such as OpenPose [6] or MediaPipe[30]. However, accurate hand pose estimation is crucial for many applications where the global body motion is less important than accurate hand pose estimation. This paper addresses the problem of 3D hand pose estimation from monocular images or sequences. We present a novel end-to-end framework for 3D hand regression that employs diffusion models that have shown excellent ability to capture the distribution of data for generative purposes. Moreover, we enforce kinematic constraints to ensure realistic poses are generated by incorporating an explicit forward kinematic layer as part of the network. The proposed model provides state-of-the-art performance when lifting a 2D single-hand image to 3D. However, when sequence data is available, we add a Transformer module over a temporal window of consecutive frames to refine the results, overcoming jittering and further increasing accuracy. The method is quantitatively and qualitatively evaluated showing state-of-the-art robustness, generalization, and accuracy on several different datasets.

Machine learning models fundamentally rely on large quantities of high-quality data. Collecting the necessary data for these models can be challenging due to cost, scarcity, and privacy restrictions. Signed languages are visual languages used by the deaf community and are considered low-resource languages. Sign language datasets are often orders of magnitude smaller than their spoken language counterparts. Sign Language Production is the task of generating sign language videos from spoken language sentences, while Sign Language Translation is the reverse translation task. Here, we propose leveraging recent advancements in Sign Language Production to augment existing sign language datasets and enhance the performance of Sign Language Translation models. For this, we utilize three techniques: a skeleton-based approach to production, sign stitching, and two photo-realistic generative models, SignGAN and SignSplat. We evaluate the effectiveness of these techniques in enhancing the performance of Sign Language Translation models by generating variation in the signer's appearance and the motion of the skeletal data. Our results demonstrate that the proposed methods can effectively augment existing datasets and enhance the performance of Sign Language Translation models by up to 19%, paving the way for more robust and accurate Sign Language Translation systems, even in resource-constrained environments.

Recent years have seen significant progress in human image generation, particularly with the advancements in diffusion models. However, existing diffusion methods encounter challenges when producing consistent hand anatomy and the generated images often lack precise control over the hand pose. To address this limitation, we introduce a novel approach to pose-conditioned human image generation, dividing the process into two stages: hand generation and subsequent body outpainting around the hands. We propose training the hand generator in a multi-task setting to produce both hand images and their corresponding segmentation masks, and employ the trained model in the first stage of generation. An adapted ControlNet model is then used in the second stage to outpaint the body around the generated hands, producing the final result. A novel blending technique is introduced to preserve the hand details during the second stage that combines the results of both stages in a coherent way. This involves sequential expansion of the outpainted region while fusing the latent representations, to ensure a seamless and cohesive synthesis of the final image. Experimental evaluations demonstrate the superiority of our proposed method over state-of-the-art techniques, in both pose accuracy and image quality, as validated on the HaGRID dataset. Our approach not only enhances the quality of the generated hands but also offers improved control over hand pose, advancing the capabilities of pose-conditioned human image generation. The source code is available here. 1 1 https://github.com/apelykh/hand-to-diffusion

This paper addresses the problem of diversity-aware sign language production, where we want to give an image (or sequence) of a signer and produce another image with the same pose but different attributes (e.g. gender, skin color). To this end, we extend the variational inference paradigm to include information about the pose and the conditioning of the attributes. This formulation improves the quality of the synthesised images. The generator framework is presented as a UNet architecture to ensure spatial preservation of the input pose, and we include the visual features from the variational inference to maintain control over appearance and style. We generate each body part with a separate decoder. This architecture allows the generator to deliver better overall results. Experiments on the SMILE II dataset show that the proposed model performs quantitatively better than state-of-the-art baselines regarding diversity, per-pixel image quality, and pose estimation. Quantitatively, it faithfully reproduces non-manual features for signers.

Significant advances in robotics and machine learning have resulted in many datasets designed to support research into autonomous vehicle technology. However, these datasets are rarely suitable for a wide variety of navigation tasks. For example, datasets that include multiple cameras often have short trajectories without loops that are unsuitable for the evaluation of longer-range SLAM or odometry systems, and datasets with a single camera often lack other sensors, making them unsuitable for sensor fusion approaches. Furthermore, alternative environmental representations such as semantic Bird's Eye View (BEV) maps are growing in popularity, but datasets often lack accurate ground truth and are not flexible enough to adapt to new research trends.To address this gap, we introduce Campus Map, a novel large-scale multi-camera dataset with 2M images from 6 mounted cameras that includes GPS data and 64-beam, 125k point LiDAR scans totalling 8M points (raw packets also provided). The dataset consists of 16 sequences in a large car park and 6 long-term trajectories around a university campus that provide data to support research into a variety of autonomous driving and parking tasks. Long trajectories (average 10 min) and many loops make the dataset ideal for the evaluation of SLAM, odometry and loop closure algorithms, and we provide several state-of-the-art baselines.We also include 40k semantic BEV maps rendered from a digital twin. This novel approach to ground truth generation allows us to produce more accurate and crisp semantic maps than are currently available. We make the simulation environment available to allow researchers to adapt the dataset to their specific needs. Dataset available at: cvssp.org/data/twizy_data

Sign spotting, the task of identifying and localizing individual signs within continuous sign language video, plays a pivotal role in scaling dataset annotations and addressing the severe data scarcity issue in sign language translation. While automatic sign spotting holds great promise for enabling frame-level supervision at scale, it grapples with challenges such as vocabulary inflexibility and ambiguity inherent in continuous sign streams. Hence, we introduce a novel, training-free framework that integrates Large Language Models (LLMs) to significantly enhance sign spotting quality. Our approach extracts global spatio-temporal and hand shape features, which are then matched against a large-scale sign dictionary using dynamic time warping and cosine similarity. This dictionary-based matching inherently offers superior vocabulary flexibility without requiring model retraining. To mitigate noise and ambiguity from the matching process, an LLM performs context-aware gloss disambiguation via beam search, notably without fine-tuning. Extensive experiments on both synthetic and real-world sign language datasets demonstrate our method's superior accuracy and sentence fluency compared to traditional approaches, highlighting the potential of LLMs in advancing sign spotting.

Machine learning models fundamentally rely on large quantities of high-quality data. Collecting the necessary data for these models can be challenging due to cost, scarcity, and privacy restrictions. Signed languages are visual languages used by the deaf community and are considered low-resource languages. Sign language datasets are often orders of magnitude smaller than their spoken language counterparts. Sign Language Production (SLP) is the task of generating sign language videos from spoken language sentences, while Sign Language Translation (SLT) is the reverse translation task. Here, we propose leveraging recent advancements in SLP to augment existing sign language datasets and enhance the performance of SLT models. For this, we utilize three techniques: a skeleton-based approach to production, sign stitching, and two photo-realistic generative models, SignGAN and SignSplat. We evaluate the effectiveness of these techniques in enhancing the performance of SLT models by generating variation in the signer's appearance and the motion of the skeletal data. Our results demonstrate that the proposed methods can effectively augment existing datasets and enhance the performance of SLT models by up to 19%, paving the way for more robust and accurate SLT systems, even in resource-constrained environments.

Sign spotting, the task of identifying and localizing individual signs within continuous sign language video, plays a pivotal role in scaling dataset annotations and addressing the severe data scarcity issue in sign language translation. While automatic sign spotting holds great promise for enabling frame-level supervision at scale, it grapples with challenges such as vocabulary inflexibility and ambiguity inherent in continuous sign streams. Hence, we introduce a novel, training-free framework that integrates Large Language Models (LLMs) to significantly enhance sign spotting quality. Our approach extracts global spatio-temporal and hand shape features, which are then matched against a large-scale sign dictionary using dynamic time warping and cosine similarity. This dictionary-based matching inherently offers superior vocabulary flexibility without requiring model retraining. To mitigate noise and ambiguity from the matching process, an LLM performs context-aware gloss disambiguation via beam search, notably without fine-tuning. Extensive experiments on both synthetic and real-world sign language datasets demonstrate our method's superior accuracy and sentence fluency compared to traditional approaches, highlighting the potential of LLMs in advancing sign spotting.

Sign language representation learning presents unique challenges due to the complex spatio-temporal nature of signs and the scarcity of labelled datasets. Existing methods often rely either on models pre-trained on general visual tasks, that lack sign-specific features, or use complex multimodal and multi-branch architectures. To bridge this gap, we introduce a scalable, self-supervised framework for sign representation learning. We leverage important inductive (sign) priors during the training of our RGB model. To do this, we leverage simple but important cues based on skeletons while pretraining a masked autoencoder. These sign specific priors alongside feature regularization and an adversarial style agnostic loss provide a powerful backbone. Notably, our model does not require skeletal keypoints during inference, avoiding the limitations of keypoint-based models during downstream tasks. When finetuned, we achieve state-of-the-art performance for sign recognition on the WLASL, ASL-Citizen and NMFs-CSL datasets, using a simpler architecture and with only a single-modality. Beyond recognition, our frozen model excels in sign dictionary retrieval and sign translation, surpassing standard MAE pretraining and skeletal-based representations in retrieval. It also reduces computational costs for training existing sign translation models while maintaining strong performance on Phoenix2014T, CSL-Daily and How2Sign.

Realistic, high-fidelity 3D facial animations are crucial for expressive avatar systems in human-computer interaction and accessibility. Although prior methods show promising quality, their reliance on the mesh domain limits their ability to fully leverage the rapid visual innovations seen in 2D computer vision and graphics. We propose VisualSpeaker, a novel method that bridges this gap using photorealistic differentiable rendering, supervised by visual speech recognition, for improved 3D facial animation. Our contribution is a perceptual lip-reading loss, derived by passing photorealistic 3D Gaussian Splatting avatar renders through a pre-trained Visual Automatic Speech Recognition model during training. Evaluation on the MEAD dataset demonstrates that VisualSpeaker improves both the standard Lip Vertex Error metric by 56.1% and the perceptual quality of the generated animations, while retaining the controllability of mesh-driven animation. This perceptual focus naturally supports accurate mouthings, essential cues that disambiguate similar manual signs in sign language avatars.

Gloss-free Sign Language Translation (SLT) has advanced rapidly, achieving strong performances without relying on gloss annotations. However, these gains have often come with increased model complexity and high computational demands, raising concerns about scalability, especially as large-scale sign language datasets become more common. We propose a segment-aware visual tokenization framework that leverages sign segmentation to convert continuous video into discrete, sign-informed visual tokens. This reduces input sequence length by up to 50% compared to prior methods, resulting in up to 2.67× lower memory usage and better scalability on larger datasets. To bridge the visual and linguistic modalities, we introduce a token-to-token contrastive alignment objective, along with a dual-level supervision that aligns both language embeddings and intermediate hidden states. This improves fine-grained cross-modal alignment without relying on gloss-level supervision. Our approach notably exceeds the performance of state-of-the-art methods on the PHOENIX14T benchmark, while significantly reducing sequence length. Further experiments also demonstrate our improved performance over prior work under comparable sequence-lengths, validating the potential of our tokenization and alignment strategies.

This paper presents the results of the fourth edition of the Monocular Depth Estimation Challenge (MDEC), which focuses on zero-shot generalization to the SYNS-Patches benchmark, a dataset featuring challenging environments in both natural and indoor settings. In this edition, we revised the evaluation protocol to use least-squares alignment with two degrees of freedom to support disparity and affine invariant predictions. We also revised the baselines and included popular off-the-shelf methods: Depth Anything v2 and Marigold. The challenge received a total of 24 submissions that outperformed the baselines on the test set; 10 of these included a report describing their approach, with most leading methods relying on affine-invariant predictions. The challenge winners improved the 3D F-Score over the previous edition’s best result, raising it from 22.58% to 23.05%.

Sign Language Production (SLP) is the task of generating sign language video from spoken language inputs. The field has seen a range of innovations over the last few years, with the introduction of deep learning-based approaches providing significant improvements in the realism and naturalness of generated outputs. However, the lack of standardized evaluation metrics for SLP approaches hampers meaningful comparisons across different systems. To address this, we introduce the first Sign Language Production Challenge, held as part of the third SLRTP Workshop at CVPR 2025. The competition's aims are to evaluate architectures that translate from spoken language sentences to a sequence of skeleton poses, known as Text-to-Pose (T2P) translation , over a range of metrics. For our evaluation data, we use the RWTH-PHOENIX-Weather-2014T dataset, a Ger-man Sign Language-Deutsche Gebärdensprache (DGS) weather broadcast dataset. In addition, we curate a custom hidden test set from a similar domain of discourse. This paper presents the challenge design and the winning methodologies. The challenge attracted 33 participants who submitted 231 solutions, with the top-performing team achieving BLEU-1 scores of 31.40 and DTW-MJE of 0.0574. The winning approach utilized a retrieval-based framework and a pre-trained language model. As part of the workshop, we release a standardized evaluation network , including high-quality skeleton extraction-based key-points establishing a consistent baseline for the SLP field, which will enable future researchers to compare their work against a broader range of methods.

This paper summarizes the results of the first Monocular Depth Estimation Challenge (MDEC) organized at WACV2023. This challenge evaluated the progress of selfsupervised monocular depth estimation on the challenging SYNS-Patches dataset. The challenge was organized on CodaLab and received submissions from 4 valid teams. Participants were provided a devkit containing updated reference implementations for 16 State-of-the-Art algorithms and 4 novel techniques. The threshold for acceptance for novel techniques was to outperform every one of the 16 SotA baselines. All participants outperformed the baseline in traditional metrics such as MAE or AbsRel. However, pointcloud reconstruction metrics were challenging to improve upon.We found predictions were characterized by interpolation artefacts at object boundaries and errors in relative object positioning. We hope this challenge is a valuable contribution to the community and encourage authors to participate in future editions

This paper discusses the results for the second edition of the Monocular Depth Estimation Challenge (MDEC). This edition was open to methods using any form of supervision, including fully-supervised, self-supervised, multi-task or proxy depth. The challenge was based around the SYNS-Patches dataset, which features a wide diversity of environments with high-quality dense ground-truth. This includes complex natural environments, e.g. forests or fields, which are greatly underrepresented in current benchmarks. The challenge received eight unique submissions that outperformed the provided SotA baseline on any of the pointcloud- or image-based metrics. The top supervised submission improved relative F-Score by 27.62%, while the top self-supervised improved it by 16.61%. Supervised submissions generally leveraged large collections of datasets to improve data diversity. Self-supervised submissions instead updated the network architecture and pretrained backbones. These results represent a significant progress in the field, while highlighting avenues for future research, such as reducing interpolation artifacts at depth boundaries, improving self-supervised indoor performance and overall natural image accuracy.

The visual anonymisation of sign language data is an essential task to address privacy concerns raised by large-scale dataset collection. Previous anonymisation techniques have either significantly affected sign comprehension or required manual, labour-intensive work. In this paper, we formally introduce the task of Sign Language Video Anonymisation (SLVA) as an automatic method to anonymise the visual appearance of a sign language video whilst retaining the meaning of the original sign language sequence. To tackle SLVA, we propose Anonysign, a novel automatic approach for visual anonymisation of sign language data. We first extract pose information from the source video to remove the original signer appearance. We next generate a photo-realistic sign language video of a novel appearance from the pose sequence, using image-to-image translation methods in a conditional variational autoencoder framework. An approximate posterior style distribution is learnt, which can be sampled from to synthesise novel human appearances. In addition, we propose a novel style loss that ensures style consistency in the anonymised sign language videos. We evaluate ANONYSIGN for the SLVA task with extensive quantitative and qualitative experiments highlighting both realism and anonymity of our novel human appearance synthesis. In addition, we formalise an anonymity perceptual study as an evaluation criteria for the SLVA task and showcase that video anonymisation using ANONYSIGN retains the original sign language content.

Most of the vision-based sign language research to date has focused on Isolated Sign Language Recognition (ISLR), where the objective is to predict a single sign class given a short video clip. Although there has been significant progress in ISLR, its real-life applications are limited. In this paper, we focus on the challenging task of Sign Spotting instead, where the goal is to simultaneously identify and localise signs in continuous co-articulated sign videos. To address the limitations of current ISLR-based models, we propose a hierarchical sign spotting approach which learns coarse-to-fine spatio-temporal sign features to take advantage of representations at various temporal levels and provide more precise sign localisation. Specifically, we develop Hierarchical Sign I3D model (HS-I3D) which consists of a hierarchical network head that is attached to the existing spatio-temporal I3D model to exploit features at different layers of the network. We evaluate HS-I3D on the ChaLearn 2022 Sign Spotting Challenge-MSSL track and achieve a state-of-the-art 0.607 F1 score, which was the top-1 winning solution of the competition.

The ability to produce large-scale maps for nav-igation, path planning and other tasks is a crucial step for autonomous agents, but has always been challenging. In this work, we introduce BEV-SLAM, a novel type of graph-based SLAM that aligns semantically-segmented Bird's Eye View (BEV) predictions from monocular cameras. We introduce a novel form of occlusion reasoning into BEV estimation and demonstrate its importance to aid spatial aggregation of BEV predictions. The result is a versatile SLAM system that can operate across arbitrary multi-camera configurations and can be seamlessly integrated with other sensors. We show that the use of multiple cameras significantly increases performance, and achieves lower relative error than high-performance GPS. The resulting system is able to create large, dense, globally-consistent world maps from monocular cameras mounted around an ego vehicle. The maps are metric and correctly-scaled, making them suitable for downstream navigation tasks.

Recent approaches to Sign Language Production (SLP) have adopted spoken language Neural Machine Translation (NMT) architectures, applied without sign-specific modifications. In addition, these works represent sign language as a sequence of skeleton pose vectors, projected to an abstract representation with no inherent skeletal structure. In this paper, we represent sign language sequences as a skeletal graph structure, with joints as nodes and both spatial and temporal connections as edges. To operate on this graphical structure, we propose Skeletal Graph Self-Attention (SGSA), a novel graphical attention layer that embeds a skeleton inductive bias into the SLP model. Retaining the skeletal feature representation throughout, we directly apply a spatio-temporal adjacency matrix into the self-attention formulation. This provides structure and context to each skeletal joint that is not possible when using a non-graphical abstract representation, enabling fluid and expressive sign language production. We evaluate our Skeletal Graph Self-Attention architecture on the challenging RWTH-PHOENIX-Weather-2014T (PHOENIX14T) dataset, achieving state-of-the-art back translation performance with an 8% and 7% improvement over competing methods for the dev and test sets.

We propose HandOcc, a novel framework for hand rendering based upon occupancy. Popular rendering methods such as NeRF are often combined with parametric meshes to provide deformable hand models. However, in doing so, such approaches present a trade-off between the fidelity of the mesh and the complexity and dimensionality of the parametric model. The simplicity of parametric mesh structures is appealing, but the underlying issue is that it binds methods to mesh initialization, making it unable to generalize to objects where a parametric model does not exist. It also means that estimation is tied to mesh resolution and the accuracy of mesh fitting. This paper presents a pipeline for meshless 3D rendering, which we apply to the hands. By providing only a 3D skeleton, the desired appearance is extracted via a convolutional model. We do this by exploiting a NeRF renderer conditioned upon an occupancy-based representation. The approach uses the hand occupancy to resolve hand-to-hand interactions further improving results, allowing fast rendering, and excellent hand appearance transfer. On the benchmark INTERHAND2.6M dataset, we achieved state-of-the-art results.

Recent approaches to multi-task learning (MTL) have fo-cused on modelling connections between tasks at the de-coder level. This leads to a tight coupling between tasks, which need retraining if a new task is inserted or removed. We argue that MTL is a stepping stone towards universal feature learning (UFL), which is the ability to learn generic features that can be applied to new tasks without retraining. We propose Medusa to realize this goal, designing task heads with dual attention mechanisms. The shared feature attention masks relevant backbone features for each task, allowing it to learn a generic representation. Meanwhile, a novel Multi-Scale Attention head allows the network to better combine per-task features from different scales when making the final prediction. We show the effectiveness of Medusa in UFL (+13.18% improvement), while maintaining MTL performance and being 25% more efficient than previous approaches.

We approach instantaneous mapping, converting images to a top-down view of the world, as a translation problem. We show how a novel form of transformer network can be used to map from images and video directly to an overhead map or bird's-eye-view (BEV) of the world, in a single end-to-end network. We assume a 1-1 correspondence between a vertical scanline in the image, and rays passing through the camera location in an overhead map. This lets us formulate map generation from an image as a set of sequence-to-sequence translations. Posing the problem as translation allows the network to use the context of the image when interpreting the role of each pixel. This constrained formulation, based upon a strong physical grounding of the problem, leads to a restricted transformer network that is convolutional in the horizontal direction only. The structure allows us to make efficient use of data when training, and obtains state-of-the-art results for instantaneous mapping of three large-scale datasets, including a 15% and 30% relative gain against existing best performing methods on the nuScenes and Argoverse datasets, respectively.

It is common practice to represent spoken languages at their phonetic level. However, for sign languages, this implies breaking motion into its constituent motion primitives. Avatar based Sign Language Production (SLP) has traditionally done just this, building up animation from sequences of hand motions, shapes and facial expressions. However, more recent deep learning based solutions to SLP have tackled the problem using a single network that estimates the full skeletal structure. We propose splitting the SLP task into two distinct jointly-trained sub-tasks. The first translation sub-task translates from spoken language to a latent sign language representation, with gloss supervision. Subsequently, the animation sub-task aims to produce expressive sign language sequences that closely resemble the learnt spatio-temporal representation. Using a progressive transformer for the translation sub-task, we propose a novel Mixture of Motion Primitives (MOMP) architecture for sign language animation. A set of distinct motion primitives are learnt during training, that can be temporally combined at inference to animate continuous sign language sequences. We evaluate on the challenging RWTH-PHOENIX-Weather-2014T (PHOENIX14T) dataset, presenting extensive ablation studies and showing that MOMP outperforms baselines in user evaluations. We achieve state-of-the-art back translation performance with an 11% improvement over competing results. Importantly, and for the first time, we showcase stronger performance for a full translation pipeline going from spoken language to sign, than from gloss to sign.

Undertaking causal inference with observational data is incredibly useful across a wide range of tasks including the development of medical treatments, advertisements and marketing, and policy making. There are two significant challenges associated with undertaking causal inference using observational data: treatment assignment heterogeneity (i.e., differences between the treated and untreated groups), and an absence of counterfactual data (i.e., not knowing what would have happened if an individual who did get treatment, were instead to have not been treated). We address these two challenges by combining structured inference and targeted learning. In terms of structure, we factorize the joint distribution into risk, confounding, instrumental, and miscellaneous factors, and in terms of targeted learning, we apply a regularizer derived from the influence curve in order to reduce residual bias. An ablation study is undertaken, and an evaluation on benchmark datasets demonstrates that TVAE has competitive and state of the art performance across.

Predicting 3D human pose from a single monoscopic video can be highly challenging due to factors such as low resolution, motion blur and occlusion, in addition to the fundamental ambiguity in estimating 3D from 2D. Approaches that directly regress the 3D pose from independent images can be particularly susceptible to these factors and result in jitter, noise and/or inconsistencies in skeletal estimation. Much of which can be overcome if the temporal evolution of the scene and skeleton are taken into account. However, rather than tracking body parts and trying to temporally smooth them, we propose a novel transformer based network that can learn a distribution over both pose and motion in an unsupervised fashion. We call our approach Skeletor. Skeletor overcomes inaccuracies in detection and corrects partial or entire skeleton corruption. Skeletor uses strong priors learn from on 25 million frames to correct skeleton sequences smoothly and consistently. Skeletor can achieve this as it implicitly learns the spatio-temporal context of human motion via a transformer based neural network. Extensive experiments show that Skeletor achieves improved performance on 3D human pose estimation and further provides benefits for downstream tasks such as sign language translation.

This document is the product of consultation between the University of Surrey, Signapse AI Ltd and representatives of from the Deaf community. It attempts to describe a taxonomy which can be used to compare and contrast the functionality of computational approaches that attempt to achieve automatic translation of spoken language into sign language. We have endeavoured to make this framework language unspecific so it can be used to compare contrasting approaches, that employ different technological solutions across different languages. It provides a roadmap to quantify the steps towards fully automatic translation.

In this work, we propose a framework to collect a large-scale, diverse sign language dataset that can be used to train automatic sign language recognition models.The first contribution of this work is SDTrack, a generic method for signer tracking and diarisation in the wild. Our second contribution is SeeHear, a dataset of 90 hours of British Sign Language (BSL) content featuring more than 1000 signers, and including interviews, monologues and debates. Using SDTrack, the SeeHear dataset is annotated with 35K active signing tracks, with corresponding signer identities and subtitles, and 40K automatically localised sign labels. As a third contribution, we provide benchmarks for signer diarisation and sign recognition on SeeHear.

Sign Language conveys information through multiple channels composed of manual (handshape, hand movement) and non-manual (facial expression, mouthing, body posture) components. Sign language assessment involves giving granular feedback to a learner, in terms of correctness of the manual and non-manual components, aiding the learner's progress. Existing methods rely on handcrafted skeleton-based features for hand movement within a KL-HMM framework to identify errors in manual components. However, modern deep learning models offer powerful spatio-temporal representations for videos to represent hand movement and facial expressions. Despite their success in classification tasks, these representations often struggle to attribute errors to specific sources, such as incorrect handshape, improper movement, or incorrect facial expressions. To address this limitation, we leverage and analyze the spatio-temporal representations from Inflated 3D Convolutional Networks (I3D) and integrate them into the KL-HMM framework to assess sign language videos on both manual and non-manual components. By applying masking and cropping techniques, we isolate and evaluate distinct channels of hand movement, and facial expressions using the I3D model and handshape using the CNN-based model. Our approach outperforms traditional methods based on handcrafted features, as validated through experiments on the SMILE-DSGS dataset, and therefore demonstrates that it can enhance the effectiveness of sign language learning tools.

Self-supervised monocular depth estimation (SS-MDE) has the potential to scale to vast quantities of data. Unfortunately, existing approaches limit themselves to the automotive domain, resulting in models incapable of generalizing to complex environments such as natural or indoor settings. To address this, we propose a large-scale SlowTV dataset curated from YouTube, containing an order of magnitude more data than existing automotive datasets. SlowTV contains 1.7M images from a rich diversity of environments, such as worldwide seasonal hiking, scenic driving and scuba diving. Using this dataset, we train an SS-MDE model that provides zero-shot generalization to a large collection of indoor/outdoor datasets. The resulting model outperforms all existing SSL approaches and closes the gap on supervised SoTA, despite using a more efficient architecture. We additionally introduce a collection of best-practices to further maximize performance and zero-shot generalization. This includes 1) aspect ratio augmentation, 2) camera intrinsic estimation, 3) support frame randomization and 4) flexible motion estimation.