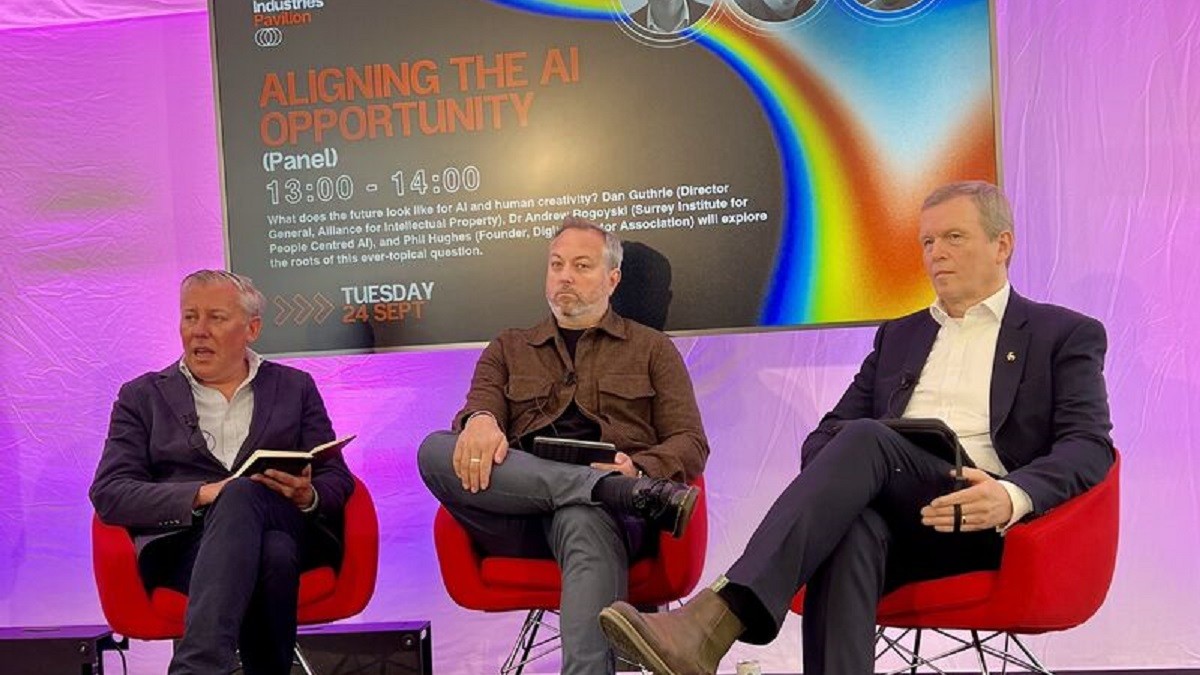

About the University of Surrey’s Institute for People-Centred AI and DECaDE

The Surrey Institute for People-Centred AI (PAI), launched in November 2021, builds on the nearly 40-year history of CVSSP (Centre for Vision, Speech and Signal Processing), the UK’s leading AI machine perception research group. The Institute promotes a people-centric approach to AI development, starting with the problems that face humanity, societies and individuals, identifying how AI can be leveraged to solve these challenges. As a pan-University Institute, PAI combines strengths across Surrey’s research centres to foster interdisciplinary solutions to complex problems. Collaboration with industry, policymakers and other institutions is a core capability. Examples of current high-profile projects include the AI4ME Prosperity Partnership with the BBC, and the UKRI Centre for Doctoral Training in AI for Digital Media Inclusion.

DECaDE is the UKRI Centre for the Decentralised Digital Economy, a multidisciplinary research centre led by the University of Surrey in partnership with the University of Edinburgh and the Digital Catapult. DECaDE is funded 2020-2026 by the UKRI/EPSRC. DECaDE’s mission is to explore how decentralised platforms and data centric technologies such as AI and Distributed Ledger Technology (DLT) can help create value in our future digital economy in which everyone is a producer and consumer of digital goods and services. DECaDE studies these questions primarily through the lens of the creative industries, which have shifted from monolithic content producers to a decentralised model where individuals and smaller production houses also increasingly produce and consume content disseminated via online platforms. As a multidisciplinary academic research centre, DECaDE brings together technical expertise in AI, DLT and Cyber security, with business, law, and human factors/design. DECaDE co-creates its research with over 30 commercial partners including several in the creative sector including Adobe and the BBC, and has worked extensively with the Cabinet Office and Scottish government.

Together we are delighted to respond to the UK Government’s consultation on AI and Copyright. N.B. DECaDE has also responded as an individual group to this consultation.

The UK context

The UK Government’s view, as set out in the AI Opportunities Plan, is that the AI revolution is radically changing how we live, work, and operate as a society and that it is incumbent on Government to work with partners in industry and academia to play a shaping role in where this future takes us.

The creative industries, one of the fastest growing sectors in the UK, has a critical role to play in the Government’s growth ambitions. The Copyright and AI consultation is an important part of the policy process to ensure that the UK’s creative IP, at an individual, collaborative and country level is protected and valued as we seek to grasp the benefits that AI can bring.

Indeed, it is in the interests of future AI systems to protect human creativity. Without it, the incentives for humans to create content which delights other human beings but may also be used to train ever more advanced AIs, decays away. Not only would this leave society poorer but the decline in human-inspired content would leave less material for training next generation AIs, which, combined with the rapidly growing volume of AI-generated content that is shared on the internet, may lead to “model collapse” as AIs are increasingly trained on their own data. This would lead to the eventual failure or plateauing of AI capabilities. In other words, we need to preserve the value of human content creation to support the future capabilities of AI.

The UK is well placed to play a leading role in shaping the future developments of AI. Stability and assurance are core components. Investors are attracted by business environments with good stable markets, protected by disciplined and well-tested legal systems, and access to high quality talent, all of which the UK has. Emerging AI and copyright tensions will need to be managed in a way that supports our ability to offer clarity and stability.

UK wealth and economic growth does not necessarily stem from “Big Tech” AI platforms like OpenAI, rather through the application of AI technologies in traditional industries, including the creative sector and extending to areas as diverse as aerospace, manufacturing and healthcare. The UK’s advantage will not stem from a race to the bottom in terms of being permissive about use of data for AI, instead from the creation of clear, consistent and will-implemented rules that guide the development and application of AI, ensuring that the UK economy and UK citizens are at the heart of future AI developments.

Recommendations

1. Support Data Mining Exception with Transparency

The UK should adopt Option 3, which offers a data mining exception allowing right holders to reserve their rights, supported by transparency measures. This approach would protect creators' rights while promoting large-scale data access essential for AI development, thus enhancing the UK's global competitiveness.

2. Introduce Granular Opt-Out Mechanisms

The government should implement a more nuanced opt-out system for creators. This would enable content owners to specify the types of AI processes from which they want to opt out, such as generative AI, while still permitting use for less intrusive purposes like content classification or search, noting that such granularity should not place unnecessary burdens on the content creators, in terms of understanding or applying such mechanisms.

3. Adopt Standardised Machine-Readable Markers for Rights Reservation

A standardised, machine-readable system (like C2PA) should be introduced to indicate content usage restrictions, ensuring that developers can easily identify whether data is off-limits for AI training. This would facilitate widespread, frictionless compliance and protect creators' rights across platforms.

4. Ensure Transparency and Due Diligence in AI Training

The system should require AI developers to take responsibility for respecting creators' opt-out reservations, including incorporating transparent metadata and performing due diligence. While it should not be expected that developers search the entire internet for opt-out content, they should ensure their systems handle the metadata appropriately.

5. Consider Retraining AI Models for Infringement

In cases where a rights reservation is ignored, legal remedies should be expanded to include the requirement for AI developers to retrain their models without infringing content.

6. Encourage the Adoption of Open, Patent-Free Standards for Rights Reservation

The UK government should endorse open standards, like C2PA and TDMRep, for rights reservation and provenance metadata. These standards will allow for seamless interoperability across industries while respecting intellectual property rights. By avoiding mandating a single proprietary approach, the government can foster innovation and flexibility in the rights management ecosystem, recognising that future AI developments may require such approaches to be adapted and updated.

7. Strengthen Legal Significance of Metadata and Rights Reservation

Compliance with metadata and rights reservation protocols should be legally significant. Platforms should be encouraged to avoid removing markers carrying AI labels, provenance and rights information (such as C2PA) unless necessitated to preserve individual or group safety and security. For particular verticals such as news websites the government could introduce legislation to enforce the retention of metadata for AI-generated works to ensure transparency, accountability, and respect for creators’ rights, while allowing for necessary privacy controls. In other circumstances including creative use cases it may not always be desirable to implement AI-generated content labelling. Collaboration between government, academic and industry is necessary to further map this space and to raise welcome debate about whether non-compliant media should be blocked, acknowledging concerns over censorship and security.

8. Support Collective Licensing Systems for AI Data

The UK government should incentivise the use of Extended Collective Licensing (ECL) schemes, like those used in Nordic countries, to help AI developers and data owners navigate the complexities of obtaining content licenses. This would encourage fair compensation for creators while facilitating access to large datasets for AI training. Clear, transparent standards for participation and representation in CMOs (Collective Management Organizations) should be introduced to address any potential misuse of the system.

9. Clarify Copyright Protection for Computer-Generated Works (CGWs)

The UK should maintain its current protection for CGWs, ensuring that AI-generated works have copyright protection. Clear legislative guidance should be provided to prevent ambiguity about the originality requirement for CGWs. This would provide legal certainty to businesses and encourage investment in creative AI systems, ensuring that CGWs are appropriately recognised and protected.

Consultation responses

B. Copyright and AI

B.4 Policy Options

1. Do you agree that option 3 - a data mining exception which allows right holders to reserve their rights, supported by transparency measures - is most likely to meet the objectives set out above?

Yes

Comments:

Any amendments to copyright and IP protection should adopt the principle of protecting the work and livelihood of human artists and content creators; minimise the overhead of applying protections by the human artist or content creator; minimise the disputability (and associated costs) of infringements; maximise the economic growth potential for human artists, content creators and UK AI companies.

We believe Option 3, on balance offers a solution that offers a people-centred approach, upholding creators’ rights while facilitating the large-scale data access needed for AI development.

2. Which option do you prefer and why?

• Option 0: Copyright and related laws remain as they are

• Option 1: Strengthen copyright requiring licensing in all cases

• Option 2: A broad data mining exception

• Option 3: A data mining exception which allows right holders to reserve their rights, supported by transparency measures

We prefer Option 3 because it prevents the added friction that an opt-in or licensed-only model would impose on research activities, especially for academic and startup ventures. AI training at scale requires access to large and diverse datasets. If creators were required to opt in—or if restrictive licensing were the only route—many smaller research teams would be unable to clear rights sufficiently quickly or at scale. This would slow progress, reducing the competitiveness of UK-based academia. In addition, Option 3 aligns with existing EU legislation i.e. the text and data mining (TDM) exception within the EU Copyright Directive, Article 4. Taking a stance that is less permissive (such as Options 1 and 2 in the consultation) would make the UK less competitive for AI research and commercial activity, than the EU.

C. Our Proposed Approach

C.1 Exception with rights reservation

3. Do you support the introduction of an exception along the lines outlined above?

Yes

4. If so, what aspects do you consider to be the most important? If not, what other approach do you propose and how would that achieve the intended balance of objectives?

The transparency and the granularity of the exception (opt-out), as well the level of due diligence required by researchers would be the most important.

Granularity: Allowing creators to specify the kinds of AI processes from which they want to opt out is important, and a feature that is absent in the TDM exception within the EU Copyright Act, which resembles the spirit of Option 3 but was written prior to the advent of Generative AI.

For example, a creator might be willing to have their content used for AI models that classify, enable the search of content, and recommend content. However they might be unwilling to have their content used to train a generative AI model i.e. a model that creates derivative content from their content. Other creators might take a different view. It is our experience that creators would appreciate this level of granularity in opt-out. The only issue this raises is clarity and consistency describing such granular options, where care would need to be taken to ensure that such options were easily understood and didn’t place undue burden on the content creator.

Transparency: It is important that standardised, machine-readable markers indicating the exception be adopted else an interoperable mechanism cannot be implemented at scale matching the volumes of data used by Generative AI. These standards must be open and transparent, so that any member of the public, creator or AI developer can readily establish the status of a data item without reliance on any third party or commercial gatekeeper such as a registry.

It is also important that standardised classes of usage of content are provided in the legislation so that mismatches between creator’s intentions and AI usage do not occur, and that further developments in AI do not render such measures unworkable. This will help ensure a common understanding of what the AI usage is.

Similarly, protection and enforcement measures need to be straightforward, easily implemented and well-policed, in order to gain credibility.

Due diligence: Data is often shared and replicated, in such a way that any opt-out markers associated with it may be accidently removed (‘stripped’) during distribution. For example, opt-out is being expressed in industry using the Coalition for Content Provenance and Authenticity (C2PA) metadata standard also known as ‘Content

Credentials’ [Adobe]. Various technical measures can be implemented to mitigate but not prevent the issue that C2PA metadata is stripped, such as Durable Content Credentials [NSA]. It should be expected that developers act upon any opt-out indicators they received within content, using major open standards such as Durable Content Credentials. But it should not be expected that developers are required to seek out all possible copies of data available on the internet, in order to perform due diligence to determine the opt out status of that data. Such checking could not be performed at sufficient scale, with sufficient accuracy using current technology.

References:

• [Adobe] Adobe Debuts Free Web App To Fight Misinformation And Protect Creators. Forbes Magazine. October 2024. https://www.forbes.com/sites/moorinsights/2024/10/16/adobe-debuts-free-…

• [NSA] Joint guidance on content credentials and strengthening multimedia integrity in the generative artificial intelligence era. January 2025. US National Security Agency. https://media.defense.gov/2025/Jan/29/2003634788/-1/-1/0/CSI-CONTENT-CR…

5. What influence, positive or negative, would the introduction of an exception along these lines have on you or your organisation? Please provide quantitative information where possible.

A well-specified opt-out exception would bolster confidence among our researchers, collaborators, and creative partners, as it clarifies the boundaries of lawful data usage in research.

6. What action should a developer take when a reservation has been applied to a copy of a work?

Developers should respect any clear, machine-readable markers indicating that a work is off-limits for AI training or analysis. At a minimum, they should honour any metadata attached to a data item (so called 'unit level’ opt-out) that indicates the reservation using a widely adopted open standard capable of expressing the same. At a minimum, site-level opt-out instructions (e.g., “no-scrape” flags) on websites similar to ‘robots.txt’ in the context of search engine scraping, should also be honoured.

7. What should be the legal consequences if a reservation is ignored?

Remedies for breaches of copyright are provided in existing law through financial penalties and would presumably be applied for breach of copyright when using data ignoring such a reservation. However, this favours larger commercial operators disproportionately, since it places them in a defacto licensing agreement for any content they wish simply by paying the penalty. In the context of AI model training, it is worth considering an additional remedy of requiring the model developer to retrain the model removing the infringing content. Otherwise, smaller operators such as academic or startups will be in a disadvantaged position, since they could not potentially afford to ignore such a reservation.

8. Do you agree that rights should be reserved in machine-readable formats? Where possible, please indicate what you anticipate the cost of introducing and/or complying with a rights reservation in machine-readable format would be.

Yes. Large-scale AI development demands automated checks. Machine-readable metadata (e.g., in open standards like C2PA [C2PA]) enables frictionless compliance, fostering trust and clarity across multiple platforms. Setup costs vary, but since open standards are unpatented, the implementation overhead primarily involves updating workflows, software, and training developers—likely manageable relative to the benefits.

References:

[C2PA] Coalition for Content Provenance and Authenticity, Technical Specification v2.1. Publisher: Linux Foundation. Available at: https://c2pa.org.

C.2 Technical Standards

9. Is there a need for greater standardisation of rights reservation protocols?

Yes, but without prescribing one single standard in legislation. Multiple protocols exist (e.g., TDMRep [TDMRep], C2PA [C2PA]), and convergence to the latter is likely as cross-industry adoption grows. Government can accelerate adoption by endorsing open, patent-free standards that enable seamless interoperability, rather than mandating a single proprietary approach.

References:

• [TDMRep] TDM Reservation Protocol (TDMRep). W3C Community Report. February 2024. Available at: https://www.w3.org/community/reports/tdmrep/CG-FINAL-tdmrep-20240202/

• [C2PA] Coalition for Content Provenance and Authenticity, Technical Specification v2.1. Publisher: Linux Foundation. Available at: https://c2pa.org.

10. How can compliance with standards be encouraged?

Compliance can be encouraged by making adherence to metadata and rights-reservation markers legally significant, so that failure to observe them raises the risk of infringement claims. This could include measures to deter the removal of such markers. Currently many content platforms (all social platforms) remove metadata of the kind that is likely to emerge as the dominant way of expressing unit level opt-out (e.g. provenance metadata such as C2PA [C2PA]). Although platforms should be able to remove metadata to fulfil requirements around privacy and user choice, they should not routinely remove such metadata, and legislation would be a promising way to encourage compliance.

11. Should the government have a role in ensuring this and, if so, what should that be?

Yes. The government can champion best practices by embedding provenance metadata in its own releases, incentivizing platforms to retain such metadata, and providing clear guidance on compliance. Regulatory frameworks can explicitly penalize deliberate metadata stripping and incentivize preservation, fostering a culture of transparency and respect for creators’ preferences.

C.3 Contracts and Licensing

12. Does current practice relating to the licensing of copyright works for AI training meet the needs of creators and performers?

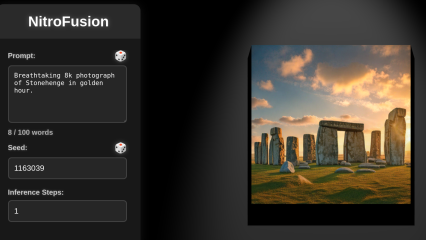

Current licensing norms are workable but could be improved. Many creators struggle with discovering and negotiating AI-related licences in a timely, cost-effective manner. Emerging provenance and payment tools (e.g., automated micropayments linked to model usage) could significantly enhance creator opportunities for fair compensation. For example, recent research [Balan 2023] at the University of Surrey led DECaDE research centre has explored how images re-used to train Generative AI models may be linked to rights and payment information of their creators, in order to create value in new ways for the creator economy.

Nevertheless, a balance needs to be struck that supports interests of creators on the one hand and AI developers on the other. With the scale of training datasets, the cost of compensating all of the content creators would act as a disincentive to AI developers using UK data. Or, if compensation was affordable, then the amount of money awarded to an individual creator would be vanishingly small. We have seen this take place in sectors like the music industry, where peer-to-peer disrupted traditional models of music distribution, to then be regularised and legalised by the likes of Spotify but at the cost to the content creator to the extent where it is difficult to make money from music production, and live venues are only economically viable at very large scale.

References:

• [Balan 2023] EKILA: Synthetic Media Provenance and Attribution for Generative Art. Balan et al. Proc. CVPR Workshop on Media Forensics. 2023.

14. Should measures be introduced to support good licensing practice?

Yes. Encouraging the use of robust provenance and rights management frameworks can lower transaction costs. By supporting new tools (e.g., automated rights registries, micropayment systems) and educating stakeholders, the government could help standardise fair licensing practices.

15. Should the government have a role in encouraging collective licensing and/or data aggregation services? If so, what role should it play?

Extended Collective Licensing (ECL) has been commonly used in the Nordic countries to address the high demand for data and the challenges around obtaining rightsholder consent. It was introduced in the UK in 2014, and the general practice allows rightsholders who are covered by the collective licensing to opt out if they do not want the licence to apply to their work.

Opting for a Collecting management organisation -based opt-out system for data mining would allow for better decision-making that accurately represents all stakeholders, more flexible and market-driven policies, and greater engagement when designing licensing schemes. This could be achieved, provided the Intellectual Property Office establishes clear and strict transparency measures. The risk of introducing an EU-style opt-out and an ECL-style opt-out is similar—mass opt-outs would undermine the purpose of the opt-out system. However, an ECL-driven opt-out structure would create a platform for negotiation and address challenges related to fair remuneration.

The digitalisation of industries and the rise of artificial intelligence present complex challenges, evolving business models, and shifting content needs. As these models change, the dynamics between stakeholders will also shift, requiring a renegotiation of existing frameworks. CMOs are well-positioned to manage these transitions and adapt to new challenges through the ECL.

ECL licensing is a complex initiative and government incentives should be provided to encourage CMO’s to explore ECL’s as an option.

Although the Soulier v Doke judgement no longer applies after Brexit, it is crucial that the guidance emphasises the importance of ensuring the CMO has effective methods in place to identify extended beneficiaries, so that license-earned revenues can be properly distributed to them.

The lack of clear standards for demonstrating evidence of representation may allow CMOs to determine what qualifies as "significant representation" without adequate oversight. This could lead to misleading claims about the true extent of a CMO's representativeness.

While CMOs are required to secure informed consent from their members, the policy offers flexibility in how this consent is obtained (e.g. through electronic surveys). These methods do not necessarily ensure that all members are adequately informed or fully understand the consequences of agreeing to the ECL scheme. Additionally, members whose works are not included in the scheme, but who may still be financially impacted, might not be adequately consulted.

The new 2025 ECL guidance policy permits CMOs to conceal the reasons why non-members opt not to participate in the scheme, even though these objections may indicate significant issues. This lack of transparency might lead to an ECL scheme being approved without thoroughly addressing the concerns of those outside the system.

The IPO should take measures to address these gaps by involving itself as impartial observers of CMO discussions, assessing the quality of decision-making.

C.4 Transparency

17. Do you agree that AI developers should disclose the sources of their training material?

Although in academic research it is often desirable to disclose the source of training data, the University of Surrey recognises that full disclosure training data may conflict with commercial confidentiality and trade secrets, particularly for spin-outs and larger enterprises.

The University of Surrey supports a system in which AI developers attest to having lawfully obtained and properly licensed data for AI training – including respecting any rights reservations. This approach strikes a balance between reproducibility, accountability and preserving the competitive edge that unique data selections may confer to AI models. There will be exceptions to this approach. In the case of AI development that is shared as open source, then the details of training datasets used to create the AI model should be published alongside the AI model, although the method by which the data was used to train the AI may not be required. In the case of AI models used in safety critical applications, then the datasets used to train the model, and the architecture of the training approach should be made available but perhaps not publicly. For example, there could be a role here for a trusted third party such as the UK’s AI Security Institute to be a repository to records of AI training data used for particular models.

18. If so, what level of granularity is sufficient and necessary for AI firms when providing transparency over the inputs to generative models?

A practical model would emphasize disclosing the overall nature and licensing status of the training data rather than every individual source. Summaries of data provenance— along with clear indications of how opt-out requests were handled—could offer adequate transparency. Such summaries enable regulators or third parties to verify compliance without forcing developers to reveal proprietary details or confidential data and might be enabled through open provenance standards such as C2PA. Exceptions may need to exist for safety critical applications and other special cases.

19. What transparency should be required in relation to web crawlers?

At a minimum, developers should respect site-level “no-scrape” flags. Tools or frameworks that reference open standards for expressing such flags (like the defacto standard ‘robots.txt’ or emerging ideas such as TDMRep) would help site owners set consistently respected usage permissions.

22. How can compliance with transparency requirements be encouraged, and does this require regulatory underpinning?

It is anticipated that existing regulatory powers would be sufficient to encourage transparency, if properly applied and policed by the regulator.

C.5 Wider clarification of copyright law

24. What steps can the government take to encourage AI developers to train their models in the UK and in accordance with UK law to ensure that the rights of right holders are respected?

Publishing a clear and consistent approach to AI regulation, at the earliest possible date, will attract AI developers on a global basis – uncertainty in regulatory approach is probably the biggest disincentive to training models in the UK. In addition, making available AI training datasets for various applications, ideally only useable in the UK, would also represent a significant attraction.

25. To what extent does the copyright status of AI models trained outside the UK require clarification to ensure fairness for AI developers and right holders?

This represents a significant challenge to both content creators and AI developers within the UK. Encouraging non-UK AI models to be compliant with UK copyright law, even if trained overseas, would go some way towards ensuring a level playing field.

C.6 Encouraging research and innovation

28. Does the existing data mining exception for non-commercial research remain fit for purpose?

The exception may have to be modified, depending on the adoption of some of the measures suggested herein. For example, introduction of granular opt-outs might include a “don’t use for research” flag which would have to be observed.

29. Should copyright rules relating to AI consider factors such as the purpose of an AI model, or the size of an AI firm?

Yes. Where applications relate to safety, security and privacy, the copyright rules may have to be amended appropriately.

D. AI Outputs

D.2 Policy Options

30. Are you in favour of maintaining current protection for computer-generated works? If yes, please explain whether and how you currently rely on this provision.

Yes, it is critical for the UK to maintain current protection for computer generated works (CGWs) for the benefit of the UK’s economy, AI ambitions, and international competitiveness.

CGWs increasingly have commercial value. Protection of these works with copyright is a vital incentive for people and businesses to invest in the development and use of creative AI systems. In turn, this benefits not only the UK’s creative industries, but the UK public by providing encouragement for the creation of new works, as well as the dissemination of those works.

aCDPA 9(3) has not yet been terribly impactful in the UK, but that is because until very recently CGWs have not had meaningful commercial value. They are now starting to, but given the speed at which AI capabilities are improving and industry adoption is increasing, CGWs are going to have far greater value in 5 and 10 years from now. Copyright protection will be increasingly critical as AI systems continue to improve with respect to their capabilities to output CGWs, and as creative industries such as the film and music industries increasingly rely on the use of AI. In the meantime, to encourage the necessary investments in creative AI systems there needs to be a legal framework today that provides adequate protection – and as there currently exists in the UK.

By contrast, absent such protection, CGWs may pass into the public domain in which case they cannot be effectively monetized, and thus there is minimal incentive to create them in the first place or invest in their dissemination.

For an extended review of the benefits of protecting CGWs, and other questions asked in this Section D1&2, please see the following research conducted at University of Surrey:

Ryan Abbott and Elizabeth Rothman, Disrupting Creativity: Copyright Law in the Age of Generative Artificial Intelligence, Florida Law Review (2023)

31. Do you have views on how the provision should be interpreted?

Please see the following research conducted at University of Surrey:

Ryan Abbott, Artificial Intelligence, Big Data and Intellectual Property: Protecting Computer-Generated Works in the United Kingdom, In RESEARCH HANDBOOK ON INTELLECTUAL PROPERTY AND DIGITAL TECHNOLOGIES (Tanya Aplin ed., 2020)

32. Would computer-generated works legislation benefit from greater legal clarity, for example to clarify the originality requirement? If so, how should it be clarified?

It may be helpful to clarify that the originality requirement is no bar to protection of CGWs in the UK. Dicta from European cases about originality using human-centric terms is not applicable to cases involving CGWs. Those cases did not involve CGWs. Moreover, the UK has a plain statutory framework for protecting CGWs in the form of CDPA 9(3) that such case law would not override. Parliament has clearly spoken on this issue. Unlike the UK, most European jurisdictions do not have explicit protection for CGWs.

33. Should other changes be made to the scope of computer-generated protection?

CGWs should be designated as CGWs rather than applying deemed authorship to the work’s producer. It is unfair for a person to take credit for work done by an AI system. It is not unfair to the AI, which has no interest in being acknowledged, but it is unfair to traditional authors to have their works equated to someone simply asking an AI system to generate a creative work.

35. Are you in favour of removing copyright protection for computer-generated works without a human author?

No, primarily for the reasons discussed in the answer to Q30 above.

In addition, if copyright protection is removed for CGWs, as a practical matter, individuals producing CGWs are likely to obfuscate about the origin of these works.

36. What would be the economic impact of doing this? Please provide quantitative information where possible.

The economic impact would be to eliminate the most important financial incentive to create and disseminate new works. As a result, the UK creative industries would be disadvantaged compared to industries in other jurisdictions that do allow protection of computer-generated works.

D.6 Digital replicas and other issues

43. To what extent would the approach(es) outlined in the first part of this consultation, in relation to transparency and text and data mining, provide individuals with sufficient control over the use of their image and voice in AI outputs?

We believe that individuals should have strong rights to protect their biometrics, including visual appearance, voice, and other representations of their person. This might be a case where Option 1 should be applied. This might be applied, for example, to the data that is acquired through devices like Meta/Ray-Ban sunglasses, where your image might be captured by a device wearer, without your knowledge or consent, and shared with the company and used for AI training.

D.7 Other emerging issues

45. Is the legal framework that applies to AI products that interact with copyright works at the point of inference clear? If it is not, what could the government do to make it clearer?

The EU legislation in place for Copyright is not specific about the kinds of AI data may be used for, or the specific action (training or inference) that it may be used for. This is problematic because it places in a grey area the potential use of an image in a digital editing tool, since such tools are trending toward the use of AI for routine image manipulations. Yet running inference on an image in such tools might be prohibited by AI opt-out. Similarly, most content recommendation and search systems will extract descriptors from content in order to make it discoverable. This is usually a net gain for creatives yet might be legally questionable given a blanket TDM opt-out. This is one reason why the University believes greater specificity and granularity is required in opt-out legislation. Nevertheless, there is an inherent challenge that the rapid pace of change in the field of AI means that legislation is often catching up at a slower pace.

A question to consider here is would the UK forbid access to AI that had been trained on what we consider to be unacceptable terms to the content creators?

46. What are the implications of the use of synthetic data to train AI models and how could this develop over time, and how should the government respond?

Using synthetic data for AI training can be of significant benefit in some application areas. However, they can also pose risks to model accuracy and reliability, especially when widely shared and inadvertently subsumed into other training datasets, a phenomenon sometimes referred to as “model collapse,” which occurs when generative models progressively re-train on their own outputs. This may dilute originality and utility over successive training cycles, ultimately harming the quality and potential of AI systems. As more online content includes AI-generated material, safeguarding datasets against such inadvertent self-contamination becomes increasingly important. Provenance standards (e.g., C2PA [C2PA]) can help AI developers distinguish authentic from synthetic media and selectively curate training sets. Government guidance on metadata preservation and content labelling—coupled with support for open-source or industry-led registries—may help ensure the integrity of training data, thereby fostering more robust and trustworthy AI development in the UK.