Data

Creating machines that can understand images and videos

Data research theme addresses the application of AI for audio-visual information search, understanding and preservation including visual recognition, distributed ledger technologies and the understanding of AI systems.

Research leads

Professor Miroslaw Bober

Professor of Video Processing

From information to knowledge

In our digital age, when we face a never ending torrent of unstructured data, Albert Einstein’s famous remark, ‘Information is not knowledge,’ has never been more relevant. For machines to ‘think’ independently, or just support us in challenging decisions, we need to understand how humans extract knowledge so that we can develop novel machine learning algorithms and architectures which can mimic (and ultimately improve) this process.

Visual recognition is a fundamental capability which humans take for granted, but one that computers still struggle to perform quickly and reliably. However it is indispensable in numerous application areas including robotics, automation, healthcare, A/V databases, security/CCTV, big data and many more.

Despite huge R&D investment into visual recognition, there is still a wide performance gap – as anyone who has tried to find images or videos of a specific object using a search engine such as Google will testify.

Professor Miroslaw Bober of CVSSP has recently led the development of a novel deep Convolutional Neural Network (CNN) architecture called REMAP, which enables instantaneous recognition of large volumes (potentially billions) of objects in images and videos. The unique feature of this approach is that the objects of interest are not known at the training stage nor when the database is analysed. The system learns to recognise any object instantly from a single example (an image) at the query stage, just like humans do.

Visual recognition applied to the real world

Our Centre’s recognition technology has recently been deployed in a revolutionary ‘virtual journey assistant’ called iTravel, an app which provides end-to-end routing with proactive contextual information and supports real-time visual recognition of landmarks, buildings and locations through the smartphone's camera. The app allows users to get a visual fix on their position and see an augmented view of their surroundings, which is particularly useful when GPS errors impact on our ability to navigate – frequent in built-up areas.

Our recognition technology has also been commercialised to deliver content analysis for national broadcasters, and has been used by Metropolitan Police to track criminals across CCTV images based on their clothing.

1st place in Google Landmark Retrieval Challenge

Our position at the forefront of image recognition was confirmed when we won first place among 218 teams from all over the world in the fiercely competitive Google Landmark Retrieval Challenge. Run on the Kaggle platform, the Challenge was to develop the world’s most accurate AI technology to automatically identify landmarks and retrieve relevant images from a database, with evaluation performed on a dataset of over a million photos.

Towards thinking machines – understanding video semantics

When we look at the world, our brains use thousands of categories, relations and concepts to interpret events and take decisions, combining visual and aural events with our prior knowledge of the world. We are developing ways for AI systems to understand the semantics of images, soundtracks and videos to improve communication and learning.

What can we learn from 55 years of watching videos? Can we understand what the video is about, why it was made and what it teaches us? Does it tell the truth, or is it fake news? Understanding the true semantics has the potential to touch all aspects of human life, from learning and communication to fun and play. It can be used to find required content, filter inappropriate material, find fake news, or build-up our knowledge of the world.

Yet understanding videos, utilising a rich vocabulary of sophisticated semantic terms, remains a challenging problem even for humans. One of the contributing factors is the complexity and ambiguity of the interrelations between linguistic terms and the actual audio-visual content of the video. For example, while a ‘travel’ video can depict any location with any accompanying sound, it is the intent of the producer or even the perception of the viewer that makes it a ‘travel’ video, as opposed to a ‘news’ or ‘real estate’ clip.

We have joined other universities in the MURI US-UK collaboration, developing new fundamental principles and information theory underpinning effective ways to characterise 'information semantics’.

Professor of Video Processing, Miroslaw Bober, explains: “Thanks to this type of research, we can rapidly analyse large volumes of multimedia content such as video, images or soundtracks, which makes it possible to quickly find content of interest, without the need for laborious manual annotation.”

CVSSP team wins gold medal in Google YouTube-8M Video Understanding Challenge

The design of an AI system which learns to understand the story behind a video and give a short verbal summary of what it is about earned us a place in the top ten in a competition, organised by Google on its Kaggle platform, out of 650 entries.

We developed the world’s top deep neural architecture that combines audio, video and text information to perform the semantic analysis of videos. The work is based around the YouTube-8M dataset, with 7 million videos, corresponding to almost half a million hours of footage. This dataset contains over 2.6 billion audio-visual features and is annotated with a rich vocabulary of 4,716 semantic labels, based on the Google knowledge graph.

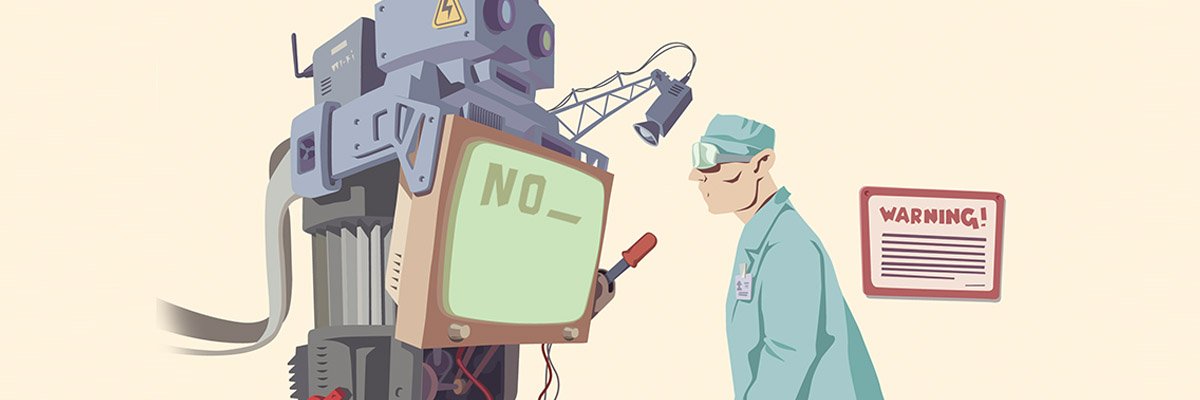

Understanding AI systems

As we deploy machine learning in the real world, it's important that we understand what algorithms are doing in order to avoid AI systems making the wrong decisions. We are at the forefront of research into both understanding how our algorithms make decisions and controlling what they learn.

The ability of models to adapt quickly to new conditions and changes in the environment plays a key role in the development of machine intelligence, but this becomes problematic in dynamic environments where – in the process of adaptation – machines often lose track of previously learned skills.

“This is an inevitable problem when applying machine learning techniques to real-world data in domains such as healthcare,” explains Professor of Machine Intelligence Payam Barnaghi.

To combat this issue, researchers in CVSSP are developing a ‘probabilistic’ machine learning technique which can preserve high accuracy of deep neural networks in non-stationary environments, enabling it to learn different tasks without forgetting previously learned ones.

Another area currently being explored is the need to control what a machine learning algorithm learns, in order to avoid systems making decisions based on a predicted bias. For example, naïve algorithms trained on historic police stop and search data will inherit biases from how the police chose to stop people.

In order to challenge an AI system’s decision, however, we first need to understand its decision-making process. New research led by Dr Chris Russell, Reader in Computer Vision and Machine Learning in CVSSP, aims to arm authorities and customers with this information.

Challenging ‘computer says no’

In the future, financial decisions such as whether you have been approved for a mortgage may be made by algorithms that combine complex data such as your income, spending patterns, and even your internet browsing history. The problem is that these algorithms will be so complex that even the people who devised them cannot confirm why a particular decision has been made.

One possible solution is to use a ‘Counterfactual Explanation’ – an ‘AI plugin’ that can analyse any existing algorithm and explain what the algorithm is doing, and how you can change its decision.

Our work alongside the Oxford Internet Institute has shown that these explanations can be found easily, using the same methods that train the complex algorithms. This is now part of one of the most widely used machine learning toolkits in the world, Google’s TensorFlow, and has been referenced in guidelines to GDPR, in a House of Commons Select Committee report, and in evidence to the US Senate.

AI for animal health

In many areas in the world, the poultry industry plays an important economic role, especially in low income countries. An outbreak of disease can have a devastating impact, so early and effective detection of disease is therefore critical.

Poultry can be affected by many viruses and bacteria at the same time, making it difficult to detect – and therefore treat – individual diseases. Currently there is no effective diagnostic solution to limit the spread of diseases and to prevent pathogens entering the human food chain. Current standard molecular tests are not appropriate in poorer countries – instruments are expensive, mobile instruments are rare, and lab results can take a long time, giving time for disease to spread further.

We have secured funding from the BBSRC Newton Fund to develop a quick diagnostic system to identify bacterial and viral pathogens in poultry. Recent developments in isothermal amplification of DNA have enabled assays to be performed rapidly at the point of need with minimal equipment. This, combined with lab-on-a-chip technologies, mobile communication and miniaturised instrumentation, offer a solution, especially where access to affordable tests is limited.

The project has developed a diagnostic test consisting of a sample collection-and-preparation device, and a small instrument which wirelessly connects to a smartphone. An app runs the test and displays the results, which is then sent to a central database and used for disease surveillance purposes. The whole process takes less than an hour.

Dr Anil Fernando, Reader in Video Communication at CVSSP, says: “This will make a huge change to the poultry industry, specifically in low income countries, and will help to generate wealth to the UK economy through its intellectual property rights. I am pleased to lead the Surrey team.”

Latest research news

Research projects

- InnovateUK: iTravel (2017-2019) - Surrey-Visual Atoms - iGeolise (£893k) “A Virtual Journey Assistant”

- InnovateUK: RetinaScan (2018-2020) – Surrey – Retinopathy Answer Ltd.(£993k) “RetinaScan: AI enabled automated image assessment system for diabetic retinopathy screening”

- BRIDGET H2020: (2014-2017) – Surrey–Huawei-RAI-Telecom Italia (£3.8m) “Bridging the gap for enhanced broadcast.”