Professor Adrian Hilton FREng FIAPR FIET

About

Biography

In January 2012 I became Director of the Centre for Vision, Speech and Signal Processing (CVSSP) at the University of Surrey. CVSSP is one of the largest UK research groups in Audio-Visual Machine Perception with 170 researchers and a grant portfolio in excess of £31M. CVSSP research spans audio and video processing, computer vision, machine learning, spatial audio, 3D/4D video, medical image analysis and multimedia communication systems with strong industry collaboration. The centre has an outstanding track-record of pioneering research leading to successful technology transfer with UK industry.

The goal of my research is to develop seeing machines with the visual sense to understand and model dynamic real-world scenes. For example, measuring from video the biomechanics of an Olympic athlete performing a world-record high-jump.

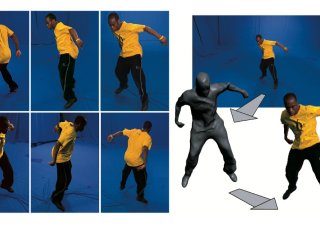

My research is pioneering the next generation of 4D computer vision, capable of sensing both 3D shape and motion, to enable seeing machines that can understand and model dynamic scenes. Recent research introduced 4D vision for analysis in sports, for instance, measurement of football players from live TV cameras. This technology has been used by the BBC in sports commentary to visualise the action from novel directions, such as the referee or goalkeepers view.

I have successfully commercialised technologies for 3D and 4D shape capture exploited in entertainment, manufacture & health, receiving two EU IST Innovation Prizes, a Manufacturing Industry Achievement Award, and a Royal Society RS Industry Fellowship with Framestore on Digital Doubles for Film (2008-11). I am a Royal Society Wolfson Research Merit Award holder in 4D Vision (2013-18) and is currently PI on the S3A Programme Grant in Future Spatial Audio at Home combining audio and vision expertise and InnovateUK project ALIVE, led by The Foundry, developing tools for 360 video reconstruction and editing.

I lead the Visual Media Research (V-Lab) in CVSSP, which is conducting research in video analysis, computer vision and graphics for next generation communication and entertainment applications.

My research combines the fields of computer vision, machine learning, graphics and animation to investigate new methods for reconstruction, modelling and understanding of the real world from images and video.Applications include: sports analysis (soccer, rugby, athletics), 3D TV and film production, visual effects, character animation for games, digital doubles for film and facial animation for visual communication.

Current research is focused on video-based measurement in sports, multiple camera systems in film and TV production, and 3D video for highly realistic animation of people and faces. Research is conducted in collaboration with UK companies in the creative industries.From 2008-12 I was supported by a Royal Society Industry Fellowship to conduct research with leading visual-effects company Framestore investigating 4D technologies for Digital Doubles in film production.

Please contact me if you are interested in current PhD and post-doctoral research opportunities.

Areas of specialism

University roles and responsibilities

- Director, Centre for Vision, Speech and Signal Processing (CVSSP)

- Distinguished Professor of Computer Vision

- Head of the Visual Media Research Lab. (V-Lab)

Previous roles

Affiliations and memberships

Business, industry and community links

News

ResearchResearch interests

I lead AI@Surrey a cross-University initiative bringing together an interdisciplinary network of over 300 academic and research staff at Surrey active in AI related research from fundamental theory to practical applications which are of benefit to society. AI@Surrey spans almost every area of our research, from medical imaging to improve cancer detection and IoT sensor network to support healthy ageing, to law and ethics for the the regulation of autonomous decision making systems. The University leads on both the fundamental AI theory - pioneering new machine learning algorithms and understanding of blackbox AI system - through to applications in healthcare, security, transport, education, entertainment, communication and scientific discovery.

My current research is focused on Audio-Visual Machine Perception to enable machines to understand and interact with dynamic real-world scenes. Machine Perception is the bridge between Artificial Intelligence and Sensing (seeing, hearing and other senses). The goal is to enable machines to This research brings together expertise in audio, vision, machine learning and AI to pioneer future intelligent sensing technologies in collaboration with UK industry.

Machine perception of people, and the everyday environments that we live and work in, is a key enabling technology for future intelligent machines. This will impact and benefit many aspects of our lives, from robot assistants able to work safely alongside people, personalised healthcare at home, improved safety and security, safe autonomous vehicles and automated animal welfare monitoring, through to improved social communication and new forms of immersive audio-visual entertainment. The central research challenge for all of these technologies is to enable machines to understand complex real-world dynamic scenes. Reliable Machine Perception of complex scenes requires the fusion of multiple sensing modalities with complementary information, such as seeing and hearing.

Towards this goal I lead research in the area of 4D Vision to understand and model dynamic scenes such as people. Over the past decade we have introduced world-leading technology for 4D dynamic scene capture and reconstruction from multiple view video. This technology underpins future machine perception and is also enabling creation of video-realistic content for film, TV and Virtual Reality.

Our research has pioneered several new technologies including:

- 4D Performance Capture and Animation: Volumetric capture of actor performance enabling video-realistic replay with real-time control of movement. This technology bridges-the-gap between real and computer generated imagery of people. Current research is enabling the use of this technology in Virtual and Augmented Reality to create cinematic experiences.

- Real-time full-body motion capture outdoors: Our research has introduced methods for production quality full-body motion capture in unconstrained outdoor environments (such as a film set) from video + IMU. This technology is enabling content production for film and TV.

- Free-viewpoint video for sports TV production: Research in collaboration with the BBC has introduced technology to produce virtual camera views and 3D stereo of sports events from the broadcast TV cameras. This has been used in soccer and rugby to produce views such as the referees viewpoint or a virtual view along the offside line.

- Video-rate 4D capture of facial shape and motion: The first video system to simultaneously capture facial shape and appearance, used to produce highly realistic animated face models of real people for games and communication. This technology is now used in medicine for analysis of facial shape, in security for face recognition and in the creative industries to create highly realistic digital doubles of actors.

- AvatarMe: A 3D photo-booth to capture realistic 3D animated models of people for games. This technology was used to produce animated models of over 300,000 members of the general public.

This research has received several awards for outstanding academic publications and technical innovation. Research has enabled a number of award winning commercial systems for industrial design, computer animation, broadcast production, games and visual communication.

Current research is pursuing video-realistic reproduction and behavioural modelling of dynamic scenes such as moving people, sports and other natural phenomena. Ultimately the challenge is to capture representations which produce the appearance and interactive behaviour of real people.

If you are interested in pursuing related PhD or post-doctoral research in the area of vision, graphics or animation research contact me for current vacancies.

Research projects

EPSRC Programme Grant (2013-2019)

Audio-Visual Media Research PlatformEPSRC Platform Grant (2017-2022) supporting research in audio-visual machine perception

InnovateUK - Foundary and Figment Productions

InnovateUK - Numerion and The Imaginarium

EU H2020 - BrainStorm

Research collaborations

Collaborators on this research include companies from the film, broadcast, games. communication and consumer electronics industries:

- BBC

- BT

- Sony

- Philips

- Mitsubishi

- Framestore

- DNeg

- The Foundry

- Filmlight

- Hensons

- 3D Scanners

- Avatar-Me

- BUF

- QD

- Rebellion

- IKinema.

Research interests

I lead AI@Surrey a cross-University initiative bringing together an interdisciplinary network of over 300 academic and research staff at Surrey active in AI related research from fundamental theory to practical applications which are of benefit to society. AI@Surrey spans almost every area of our research, from medical imaging to improve cancer detection and IoT sensor network to support healthy ageing, to law and ethics for the the regulation of autonomous decision making systems. The University leads on both the fundamental AI theory - pioneering new machine learning algorithms and understanding of blackbox AI system - through to applications in healthcare, security, transport, education, entertainment, communication and scientific discovery.

My current research is focused on Audio-Visual Machine Perception to enable machines to understand and interact with dynamic real-world scenes. Machine Perception is the bridge between Artificial Intelligence and Sensing (seeing, hearing and other senses). The goal is to enable machines to This research brings together expertise in audio, vision, machine learning and AI to pioneer future intelligent sensing technologies in collaboration with UK industry.

Machine perception of people, and the everyday environments that we live and work in, is a key enabling technology for future intelligent machines. This will impact and benefit many aspects of our lives, from robot assistants able to work safely alongside people, personalised healthcare at home, improved safety and security, safe autonomous vehicles and automated animal welfare monitoring, through to improved social communication and new forms of immersive audio-visual entertainment. The central research challenge for all of these technologies is to enable machines to understand complex real-world dynamic scenes. Reliable Machine Perception of complex scenes requires the fusion of multiple sensing modalities with complementary information, such as seeing and hearing.

Towards this goal I lead research in the area of 4D Vision to understand and model dynamic scenes such as people. Over the past decade we have introduced world-leading technology for 4D dynamic scene capture and reconstruction from multiple view video. This technology underpins future machine perception and is also enabling creation of video-realistic content for film, TV and Virtual Reality.

Our research has pioneered several new technologies including:

- 4D Performance Capture and Animation: Volumetric capture of actor performance enabling video-realistic replay with real-time control of movement. This technology bridges-the-gap between real and computer generated imagery of people. Current research is enabling the use of this technology in Virtual and Augmented Reality to create cinematic experiences.

- Real-time full-body motion capture outdoors: Our research has introduced methods for production quality full-body motion capture in unconstrained outdoor environments (such as a film set) from video + IMU. This technology is enabling content production for film and TV.

- Free-viewpoint video for sports TV production: Research in collaboration with the BBC has introduced technology to produce virtual camera views and 3D stereo of sports events from the broadcast TV cameras. This has been used in soccer and rugby to produce views such as the referees viewpoint or a virtual view along the offside line.

- Video-rate 4D capture of facial shape and motion: The first video system to simultaneously capture facial shape and appearance, used to produce highly realistic animated face models of real people for games and communication. This technology is now used in medicine for analysis of facial shape, in security for face recognition and in the creative industries to create highly realistic digital doubles of actors.

- AvatarMe: A 3D photo-booth to capture realistic 3D animated models of people for games. This technology was used to produce animated models of over 300,000 members of the general public.

This research has received several awards for outstanding academic publications and technical innovation. Research has enabled a number of award winning commercial systems for industrial design, computer animation, broadcast production, games and visual communication.

Current research is pursuing video-realistic reproduction and behavioural modelling of dynamic scenes such as moving people, sports and other natural phenomena. Ultimately the challenge is to capture representations which produce the appearance and interactive behaviour of real people.

If you are interested in pursuing related PhD or post-doctoral research in the area of vision, graphics or animation research contact me for current vacancies.

Research projects

EPSRC Programme Grant (2013-2019)

EPSRC Platform Grant (2017-2022) supporting research in audio-visual machine perception

InnovateUK - Foundary and Figment Productions

InnovateUK - Numerion and The Imaginarium

EU H2020 - BrainStorm

Research collaborations

Collaborators on this research include companies from the film, broadcast, games. communication and consumer electronics industries:

- BBC

- BT

- Sony

- Philips

- Mitsubishi

- Framestore

- DNeg

- The Foundry

- Filmlight

- Hensons

- 3D Scanners

- Avatar-Me

- BUF

- QD

- Rebellion

- IKinema.

Publications

In recent years, there has been a growing interest towards developing personalized human avatars, for applications ranging from virtual reality, movie production, gaming and social telepresence. In the future, these avatars will be expected to also interact with everyday objects. Achieving this requires not only accurate human reconstruction, but also a joint understanding of surrounding objects and their interactions, making 3D human-object reconstruction key to its success. Existing methods that can jointly reconstruct 3D humans and objects from a single RGB image produce only coarse or template-based shapes, thus failing to capture realistic details in the reconstruction, such as loose clothing on the human body. In this work, for the first time, we propose an approach to jointly reconstruct 3D clothed humans and objects, given a monocular image of a human-object scene. At the core of our framework, is a novel attention-based model that jointly learns an implicit function for the human and the object. Given a query point, our model utilizes pixel-aligned features from the input human-object image as well as from separate, non-occluded views of the human and the object as synthesized by a diffusion model. This allows the model to reason about human-object spatial relationships as well as to recover details from both visible and occluded regions, enabling realistic reconstruction. To guide the reconstruction, we condition the neural implicit model on human-object pose estimation priors. To support training and evaluation, we also introduce a synthetic human-object dataset. We demonstrate on real-world datasets that our approach significantly improves the perceptual quality of 3D human-object reconstruction.

We present a novel framework for scene representation and rendering that combines 3D Gaussian splatting with dual spherical harmonics decomposition to enable high-quality novel view synthesis under arbitrary lighting conditions. Our key innovation lies in separately modeling diffuse and specular reflection components using distinct directional parameterizations: view direction for diffuse components and reflection direction for specular components. This dual spherical harmonic (SH) decomposition, enables realistic rendering of reflective surfaces while maintaining the computational efficiency of Gaussian splatting. To address geometric fidelity challenges, our method incorporates a comprehensive optimization strategy including silhouette-guided geometric constraints for sharp opacity boundaries, HDR saturation loss for handling bright regions in high dynamic range environments, and multi-faceted normal vector optimization with photometric and physics-based supervision. Experimental validation on the DiLiGenT-MV benchmark demonstrates superior performance compared to existing methods, with significant improvements in PSNR, SSIM, and LPIPS metrics while achieving fast optimisation and real-time rendering performance suitable for interactive applications.

The field of Novel View Synthesis has been revolutionized by 3D Gaussian Splatting (3DGS), which enables high-quality scene reconstruction that can be rendered in real-time. 3DGS-based techniques typically suffer from high GPU memory and disk storage requirements which limits their practical application on consumer-grade devices. We propose Opti3DGS, a novel frequency-modulated coarse-to-fine optimization framework that aims to minimize the number of Gaussian primitives used to represent a scene, thus reducing memory and storage demands. Opti3DGS leverages image frequency modulation, initially enforcing a coarse scene representation and progressively refining it by modulating frequency details in the training images. On the baseline 3DGS [Kerbl et al. 2023], we demonstrate an average reduction of 62% in Gaussians, a 40% reduction in the training GPU memory requirements and a 20% reduction in optimization time without sacrificing the visual quality. Furthermore, we show that our method integrates seamlessly with many 3DGS-based techniques [Fang and Wang 2024; Girish et al. 2024; Lee et al. 2024; Mallick et al. 2024; Papantonakis et al. 2024], consistently reducing the number of Gaussian primitives while maintaining, and often improving, visual quality.

Leveraging machine learning techniques, in the context of object-based media production, could enable provision of personalized media experiences to diverse audiences. To fine-tune and evaluate techniques for personalization applications, as well as more broadly, datasets which bridge the gap between research and production are needed. We introduce and publicly release such a dataset, themed around a UK weather forecast and shot against a blue-screen background, of three professional actors/presenters – one male and one female (English) and one female (British Sign Language). Scenes include both production and research-oriented examples, with a range of dialogue, motions, and actions. Capture techniques consisted of a synchronized 4K resolution 16-camera array, production-typical microphones plus professional audio mix, a 16-channel microphone array with collocated Grasshopper3 camera, and a photogrammetry array. We demonstrate applications relevant to virtual production and creation of personalized media including neural radiance fields, shadow casting, action/event detection, speaker source tracking and video captioning.

Recent illumination estimation methods have focused on enhancing the resolution and improving the quality and diversity of the generated textures. However, few have explored tailoring the neural network architecture to the Equirectangular Panorama (ERP) format utilised in image-based lighting. Consequently, high dynamic range images (HDRI) results usually exhibit a seam at the side borders and textures or objects that are warped at the poles. To address this shortcoming we propose a novel architecture, 360U-Former, based on a U-Net style Vision-Transformer which leverages the work of PanoSWIN, an adapted shifted window attention tailored to the ERP format. To the best of our knowledge, this is the first purely Vision-Transformer model used in the field of illumination estimation. We train 360U-Former as a GAN to generate HDRI from a limited field of view low dynamic range image (LDRI). We evaluate our method using current illumination estimation evaluation protocols and datasets, demonstrating that our approach outperforms existing and state-of-the-art methods without the artefacts typically associated with the use of the ERP format.

A common problem in the 4D reconstruction of people from multi-view video is the quality of the captured dynamic texture appearance which depends on both the camera resolution and capture volume. Typically the requirement to frame cameras to capture the volume of a dynamic performance (> 50m(3)) results in the person occupying only a small proportion < 10% of the field of view. Even with ultra high-definition 4k video acquisition this results in sampling the person at less-than standard definition 0.5k video resolution resulting in low-quality rendering. In this paper we propose a solution to this problem through super-resolution appearance transfer from a static high-resolution appearance capture rig using digital stills cameras (> 8k) to capture the person in a small volume (< 8m(3)). A pipeline is proposed for super-resolution appearance transfer from high-resolution static capture to dynamic video performance capture to produce super-resolution dynamic textures. This addresses two key problems: colour mapping between different camera systems; and dynamic texture map super-resolution using a learnt model. Comparative evaluation demonstrates a significant qualitative and quantitative improvement in rendering the 4D performance capture with super-resolution dynamic texture appearance. The proposed approach reproduces the high-resolution detail of the static capture whilst maintaining the appearance dynamics of the captured video.

In immersive and interactive audio-visual content, there is very significant scope for spatial misalignment between the two main modalities. So, in productions that have both 3D video and spatial audio, the positioning of sound sources relative to the visual display requires careful attention. This may be achieved in the form of object-based audio, moreover allowing the producer to maintain control over individual elements within the mix. Yet each object?s metadata is needed to define its position over time. In the present study, audio-visual studio recordings were made of short scenes representing three genres: drama, sport and music. Foreground video was captured by a light-field camera array, which incorporated a microphone array, alongside more conventional sound recording by spot microphones and a first-order ambisonic room microphone. In the music scenes, a direct feed from the guitar pickup was also recorded. Video data was analysed to form a 3D reconstruction of the scenes, and human figure detection was applied to the 2D frames of the central camera. Visual estimates of the sound source positions were used to provide ground truth. Position metadata were encoded within audio definition model (ADM) format audio files, suitable for standard object-based rendering. The steered response power of the acoustical signals at the microphone array were used, with the phase transform (SRP-PHAT), to determine the dominant source position(s) at any time, and given as input to a Sequential Monte Carlo Probability Hypothesis Density (SMC-PHD) tracker. The output of this was evaluated in relation to the ground truth. Results indicate a hierarchy of accuracy in azimuth, elevation and range, in accordance with human spatial auditory perception. Azimuth errors were within the tolerance bounds reported by studies of the Ventriloquism Effect, giving an initial promising indication that such an approach may open the door to object-based production for live events.

In the multi-view domain, it is challenging to correctly label multiple people across viewpoints because of occlusions, visual ambiguities, appearance variation, etc. Deep learning, although having witnessed remarkable success in computer vision tasks, still remains underexplored for the multi-view labelling task, due to the lack of labelled multi-view datasets. In this paper, we propose a novel end-to-end deep neural network named Multi-View Labelling network (MVL-net) that addresses this issue. To overcome the dataset shortage, a large-scale multi-view dataset is generated by combining 3D human models and panoramic backgrounds, along with human poses and realistic rendering. In the proposed MVL-net, we first incorporate Transformer blocks to capture the non-local information for multi-view feature extraction. A matching net is then introduced to achieve multiple people labelling, by predicting matching confidence scores for pairwise instances from two views, thus addressing the problem of the unknown number of people when labelling across views. An additional geometry feature obtained from the epipolar geometry is integrated to leverage multi-view cues during training. To the best of our knowledge, the MVL-net is the first work using deep learning to train a multi-view labelling network. Comprehensive experiments on both synthetic and real-world datasets demonstrate the effectiveness of the proposed method, which outperforms the existing state-of-the-art approaches.

We present a novel framework to reconstruct complete 3D human shapes from a given target image by leveraging monocular unconstrained images. The objective of this work is to reproduce high-quality details in regions of the reconstructed human body that are not visible in the input target. The proposed methodology addresses the limitations of existing approaches for reconstructing 3D human shapes from a single image, which cannot reproduce shape details in occluded body regions. The missing information of the monocular input can be recovered by using multiple views captured from multiple cameras. However, multi-view reconstruction methods necessitate accurately calibrated and registered images, which can be challenging to obtain in real-world scenarios. Given a target RGB image and a collection of multiple uncalibrated and unregistered images of the same individual, acquired using a single camera, we propose a novel framework to generate complete 3D human shapes. We introduce a novel module to generate 2D multi-view normal maps of the person registered with the target input image. The module consists of body part-based reference selection and body part-based registration. The generated 2D normal maps are then processed by a multi-view attention-based neural implicit model that estimates an implicit representation of the 3D shape, ensuring the reproduction of details in both observed and occluded regions. Extensive experiments demonstrate that the proposed approach estimates higher quality details in the non-visible regions of the 3D clothed human shapes compared to related methods, without using parametric models.

Weakly supervised audio-visual video parsing (AVVP) methods aim to detect audible-only, visible-only, and audible-visible events using only video-level labels. Existing approaches tackle this by leveraging unimodal and cross-modal contexts. However, we argue that while cross-modal learning is beneficial for detecting audible-visible events, in the weakly supervised scenario, it negatively impacts unaligned audible or visible events by introducing irrelevant modality information. In this paper, we propose CoLeaF, a novel learning framework that optimizes the integration of cross-modal context in the embedding space such that the network explicitly learns to combine cross-modal information for audible-visible events while filtering them out for unaligned events. Additionally, as videos often involve complex class relationships, modelling them improves performance. However, this introduces extra computational costs into the network. Our framework is designed to leverage cross-class relationships during training without incurring additional computations at inference. Furthermore, we propose new metrics to better evaluate a method’s capabilities in performing AVVP. Our extensive experiments demonstrate that CoLeaF significantly improves the state-of-the-art results by an average of 1.9% and 2.4% F-score on the LLP and UnAV-100 datasets, respectively.

About 1.7 million new cases of breast cancer were estimated by the World Health Organization (WHO) in 2012, accounting for 23 percent of all female cancers. In the UK 33 percent of women aged 50 and above were diagnosed in the same year, thus positioning the UK as the 6th highest in breast cancer amongst the European countries. The national Screening programme in the UK has been focused on the procedure of early detection and to improve prognosis by timely intervention to extend the life span of patients. To this end, the National Health Service Breast Screening Programme (NHSBSP) employs 2-D planar mammography because it is considered to be the gold standard technique for early breast cancer detection worldwide. Breast tomosynthesis has shown great promise as an alternative method for removing the intrinsic overlying clutter seen in conventional 2D imaging. However, preliminary work in breast CT has provided a number of compelling aspects that motivates the work featured in this thesis. These advantages include removal of the need to mechanically compress the breast which is a source of screening non-attendances, and that it provides unique cross sectional images that removes almost all the overlying clutter seen in 2D. This renders lesions more visible and hence aids in early detection of malignancy. However work in Breast CT to date has been focused on using scaled down versions of standard clinical CT systems. By contrast, this thesis proposes using a photon counting approach. The work of this thesis focuses on investigating photoncounting detector technology and comparing it to conventional CT in terms of contrast visualization. Results presented from simulation work developed in this thesis has demonstrated the ability of photoncounting detector technology to utilize data in polychromatic beam where contrast are seen to decrease with increasing photon energy and compared to the conventional CT approach which is the standard clinical CT system.

This paper proposes to learn self-shadowing on full-body, clothed human postures from monocular colour image input, by supervising a deep neural model. The proposed approach implicitly learns the articulated body shape in order to generate self-shadow maps without seeking to reconstruct explicitly or estimate parametric 3D body geometry. Furthermore, it is generalisable to different people without per-subject pre-training, and has fast inference timings. The proposed neural model is trained on self-shadow maps rendered from 3D scans of real people for various light directions. Inference of shadow maps for a given illumination is performed from only 2D image input. Quantitative and qualitative experiments demonstrate comparable results to the state of the art whilst being monocular and achieving a considerably faster inference time. We provide ablations of our methodology and further show how the inferred self-shadow maps can benefit monocular full-body human relighting.

To overcome the shortage of real-world multi-view multiple people, we introduce a new synthetic multi-view multiple people labelling dataset named Multi-View 3D Humans (MV3DHumans). This dataset is a large-scale synthetic image dataset that was generated for multi-view multiple people detection, labelling and segmentation tasks. The MV3DHumans dataset contains 1200 scenes captured by multiple cameras, with 4, 6, 8 or 10 people in each scene. Each scene is captured by 16 cameras with overlapping field of views. The MV3DHumans dataset provides RGB images with resolution of 640 × 480. Ground truth annotations including bounding boxes, instance masks and multi-view correspondences, as well as camera calibrations are provided in the dataset.

This landing page contains the datasets presented in the paper "Finite Aperture Stereo". The datasets are intended for defocus-based 3D reconstruction and analysis. Each download link contains images of a static scene, captured from multiple viewpoints and with different focus settings. The captured objects exhibit a range of reflectance properties and are physically small in scale. Calibration images are also available. A CC BY-NC licence is in effect. Use of this data must be for non-commercial research purposes. Acknowledgement must be given to the original authors by referencing the dataset DOI, the dataset web address, and the aforementioned publication. Re-distribution of this data is prohibited. Before downloading, you must agree with these conditions as presented on the dataset webpage.

3DVHshadow contains images of diverse synthetic humans generated to evaluate the performance of cast hard shadow algorithms for humans. Each dataset entry includes (a) a rendering of the subject from the camera view point, (b) its binary segmentation mask, and (c) its binary cast shadow mask on a planar surface -- in total 3 images. The respective rendering metadata such as point light source position, camera pose, camera calibration, etc. is also provided alongside the images. Please refer to the corresponding publication for details of the dataset generation.

Data accompanying the paper "Evaluation of Spatial Audi Reproduction Methods (Part2): Analysis of Listener Preference.

Leveraging machine learning techniques, in the context of object-based media production, could enable provision of personalized media experiences to diverse audiences. To fine-tune and evaluate techniques for personalization applications, as well as more broadly, datasets which bridge the gap between research and production are needed. We introduce and publicly release such a dataset, themed around a UK weather forecast and shot against a blue-screen background, of three professional actors/presenters – one male and one female (English) and one female (British Sign Language). Scenes include both production and research-oriented examples, with a range of dialogue, motions, and actions. Capture techniques consisted of a synchronized 4K resolution 16-camera array, production-typical microphones plus professional audio mix, a 16-channel microphone array with collocated Grasshopper3 camera, and a photogrammetry array. We demonstrate applications relevant to virtual production and creation of personalized media including neural radiance fields, shadow casting, action/event detection, speaker source tracking and video captioning.

This is the dataset used for the accompanying paper "Automatic text clustering for audio attribute elicitation experiment responses".

Immersive audio-visual perception relies on the spatial integration of both auditory and visual information which are heterogeneous sensing modalities with different fields of reception and spatial resolution. This study investigates the perceived coherence of audiovisual object events presented either centrally or peripherally with horizontally aligned/misaligned sound. Various object events were selected to represent three acoustic feature classes. Subjective test results in a simulated virtual environment from 18 participants indicate a wider capture region in the periphery, with an outward bias favoring more lateral sounds. Centered stimulus results support previous findings for simpler scenes.

—Active speaker detection (ASD) is a multi-modal task that aims to identify who, if anyone, is speaking from a set of candidates. Current audiovisual approaches for ASD typically rely on visually pre-extracted face tracks (sequences of consecutive face crops) and the respective monaural audio. However, their recall rate is often low as only the visible faces are included in the set of candidates. Monaural audio may successfully detect the presence of speech activity but fails in localizing the speaker due to the lack of spatial cues. Our solution extends the audio front-end using a microphone array. We train an audio convolutional neural network (CNN) in combination with beamforming techniques to regress the speaker's horizontal position directly in the video frames. We propose to generate weak labels using a pre-trained active speaker detector on pre-extracted face tracks. Our pipeline embraces the " student-teacher " paradigm, where a trained " teacher " network is used to produce pseudo-labels visually. The " student " network is an audio network trained to generate the same results. At inference, the student network can independently localize the speaker in the visual frames directly from the audio input. Experimental results on newly collected data prove that our approach significantly outperforms a variety of other baselines as well as the teacher network itself. It results in an excellent speech activity detector too.

IEEE VR 2020 Immersive audio-visual perception relies on the spatial integration of both auditory and visual information which are heterogeneous sensing modalities with different fields of reception and spatial resolution. This study investigates the perceived coherence of audiovisual object events presented either centrally or peripherally with horizontally aligned/misaligned sound. Various object events were selected to represent three acoustic feature classes. Subjective test results in a simulated virtual environment from 18 participants indicate a wider capture region in the periphery, with an outward bias favoring more lateral sounds. Centered stimulus results support previous findings for simpler scenes.

In the context of Audio Visual Question Answering (AVQA) tasks, the audio and visual modalities could be learnt on three levels: 1) Spatial, 2) Temporal, and 3) Semantic. Existing AVQA methods suffer from two major shortcomings; the audiovisual (AV) information passing through the network isn't aligned on Spatial and Temporal levels; and, intermodal (audio and visual) Semantic information is often not balanced within a context; this results in poor performance. In this paper, we propose a novel end-to-end Contextual Multi-modal Alignment (CAD) network that addresses the challenges in AVQA methods by i) introducing a parameter-free stochastic Contextual block that ensures robust audio and visual alignment on the Spatial level; ii) proposing a pre-training technique for dynamic audio and visual alignment on Temporal level in a self-supervised setting , and iii) introducing a cross-attention mechanism to balance audio and visual information on Semantic level. The proposed novel CAD network improves the overall performance over the state-of-the-art methods on average by 9.4% on the MUSIC-AVQA dataset. We also demonstrate that our proposed contributions to AVQA can be added to the existing methods to improve their performance without additional complexity requirements.

Immersive audio-visual perception relies on the spatial integration of both auditory and visual information which are heterogeneous sensing modalities with different fields of reception and spatial resolution. This study investigates the perceived coherence of audio-visual object events presented either centrally or peripherally with horizontally aligned/misaligned sound. Various object events were selected to represent three acoustic feature classes. Subjective test results in a simulated virtual environment from 18 participants indicate a wider capture region in the periphery, with an outward bias favoring more lateral sounds. Centered stimulus results support previous findings for simpler scenes.

In this paper we present a method for single-view illumination estimation of indoor scenes, using image-based lighting, that incorporates state-of-the-art outpainting methods. Recent advancements in illumination estimation have focused on improving the detail of the generated environment map so it can realistically light mirror reflective surfaces. These generated maps often include artefacts at the borders of the image where the panorama wraps around. In this work we make the key observation that inferring the panoramic HDR illumination of a scene from a limited field of view LDR input can be framed as an outpainting problem (whereby the original image must be expanded beyond its original borders). We incorporate two key techniques used in outpainting tasks: i) separating the generation into multiple networks (a diffuse lighting network and a high-frequency detail network) to reduce the amount to be learnt by a single network, ii) utilising an inside-out method of processing the input image to reduce the border artefacts. Further to incorporating these outpainting methods we also introduce circular padding before the network to help remove the border artefacts. Results show the proposed approach is able to relight diffuse, specular and mirror surfaces more accurately than existing methods in terms of the position of the light sources and pixelwise accuracy, whilst also reducing the artefacts produced at the borders of the panorama.

Accurate camera synchronisation is indispensable for many video processing tasks, such as surveillance and 3D modelling. Video-based synchronisation facilitates the design and setup of networks with moving cameras or devices without an external synchronisation capability, such as low-cost web cameras, or Kinects. In this paper, we present an algorithm which can work with such heterogeneous networks. The algorithm first finds the corresponding frame indices between each camera pair, by the help of image feature correspondences and epipolar geometry. Then, for each pair, a relative frame rate and offset are computed by fitting a 2D line to the index correspondences. These pairwise relations define a graph, in which each spanning cycle comprises an absolute synchronisation hypothesis. The optimal solution is found by an exhaustive search over the spanning cycles. The algorithm is experimentally demonstrated to yield highly accurate estimates in a number of scenarios involving static and moving cameras, and Kinect.

Digital content production traditionally requires highly skilled artists and animators to first manually craft shape and appearance models and then instill the models with a believable performance. Motion capture technology is now increasingly used to record the articulated motion of a real human performance to increase the visual realism in animation. Motion capture is limited to recording only the skeletal motion of the human body and requires the use of specialist suits and markers to track articulated motion. In this paper we present surface capture, a fully automated system to capture shape and appearance as well as motion from multiple video cameras as a basis to create highly realistic animated content from an actor’s performance in full wardrobe. We address wide-baseline scene reconstruction to provide 360 degree appearance from just 8 camera views and introduce an efficient scene representation for level of detail control in streaming and rendering. Finally we demonstrate interactive animation control in a computer games scenario using a captured library of human animation, achieving a frame rate of 300fps on consumer level graphics hardware.

This paper presents a 3D human pose estimation system that uses a stereo pair of 360° sensors to capture the complete scene from a single location. The approach combines the advantages of omnidirectional capture, the accuracy of multiple view 3D pose estimation and the portability of monocular acquisition. Joint monocular belief maps for joint locations are estimated from 360° images and are used to fit a 3D skeleton to each frame. Temporal data association and smoothing is performed to produce accurate 3D pose estimates throughout the sequence. We evaluate our system on the Panoptic Studio dataset, as well as real 360° video for tracking multiple people, demonstrating an average Mean Per Joint Position Error of 12.47cm with 30cm baseline cameras. We also demonstrate improved capabilities over perspective and 360° multi-view systems when presented with limited camera views of the subject.

This paper introduces Deep4D a compact generative representation of shape and appearance from captured 4D volumetric video sequences of people. 4D volumetric video achieves highly realistic reproduction, replay and free-viewpoint rendering of actor performance from multiple view video acquisition systems. A deep generative network is trained on 4D video sequences of an actor performing multiple motions to learn a generative model of the dynamic shape and appearance. We demonstrate the proposed generative model can provide a compact encoded representation capable of high-quality synthesis of 4D volumetric video with two orders of magnitude compression. A variational encoder-decoder network is employed to learn an encoded latent space that maps from 3D skeletal pose to 4D shape and appearance. This enables high-quality 4D volumetric video synthesis to be driven by skeletal motion, including skeletal motion capture data. This encoded latent space supports the representation of multiple sequences with dynamic interpolation to transition between motions. Therefore we introduce Deep4D motion graphs, a direct application of the proposed generative representation. Deep4D motion graphs allow real-tiome interactive character animation whilst preserving the plausible realism of movement and appearance from the captured volumetric video. Deep4D motion graphs implicitly combine multiple captured motions from a unified representation for character animation from volumetric video, allowing novel character movements to be generated with dynamic shape and appearance detail.

This paper presents a method to combine unreliable player tracks from multiple cameras to obtain a unique track for each player. A recursive graph optimisation algorithm is introduced to evaluate the best association between player tracks.

The surface normals of a 3D human model, generated form multiple viewpoint capture, are refined using an iterative variant of the shape-from-shading technique to recover fine details of clothing enabling relighting of the model according to a new virtual environment. The method requires the images to consist of a number of uniformly coloured surface patches.

This paper presents the first dynamic 3D FACS data set for facial expression research, containing 10 subjects performing between 19 and 97 different AUs both individually and incombination. In total the corpus contains 519 AU sequences. The peak expression frame of each sequence has been manually FACS coded by certified FACS experts. This provides a ground truth for 3D FACS based AU recognition systems. In order to use this data, we describe the first framework for building dynamic 3D morphable models. This includes a novel Active Appearance Model (AAM) based 3D facial registration and mesh correspondence scheme. The approach overcomes limitations in existing methods that require facial markers or are prone to optical flow drift. We provide the first quantitative assessment of such 3D facial mesh registration techniques and show how our proposed method provides more reliable correspondence.

Light fields are becoming an increasingly popular method of digital content production for visual effects and virtual/augmented reality as they capture a view dependent representation enabling photo realistic rendering over a range of viewpoints. Light field video is generally captured using arrays of cameras resulting in tens to hundreds of images of a scene at each time instance. An open problem is how to efficiently represent the data preserving the view-dependent detail of the surface in such a way that is compact to store and efficient to render. In this paper we show that constructing an Eigen texture basis representation from the light field using an approximate 3D surface reconstruction as a geometric proxy provides a compact representation that maintains view-dependent realism. We demonstrate that the proposed method is able to reduce storage requirements by > 95% while maintaining the visual quality of the captured data. An efficient view-dependent rendering technique is also proposed which is performed in eigen space allowing smooth continuous viewpoint interpolation through the light field.

In this paper we present an automatic key frame selection method to summarise 3D video sequences. Key-frame selection is based on optimisation for the set of frames which give the best representation of the sequence according to a rate-distortion trade-off. Distortion of the summarization from the original sequence is based on measurement of self-similarity using volume histograms. The method evaluates the globally optimal set of key-frames to represent the entire sequence without requiring pre-segmentation of the sequence into shots or temporal correspondence. Results demonstrate that for 3D video sequences of people wearing a variety of clothing the summarization automatically selects a set of key-frames which represent the dynamics. Comparative evaluation of rate-distortion characteristics with previous 3D video summarization demonstrates improved performance.

The Facial Action Coding System (FACS) [Ekman et al. 2002] has become a popular reference for creating fully controllable facial models that allow the manipulation of single actions or so-called Action Units (AUs). For example, realistic 3D models based on FACS have been used for investigating the perceptual effects of moving faces, and for character expression mapping in recent movies. However, since none of the facial actions (AUs) in these models are validated by FACS experts it is unclear how valid the model would be in situations where the accurate production of an AU is essential [Krumhuber and Tamarit 2010]. Moreover, previous work has employed motion capture data representing only sparse 3D facial positions which does not include dense surface deformation detail.

We propose an environment modelling method using high-resolution spherical stereo colour imaging. We capture indoor or outdoor scenes with line scanning by a rotating spherical camera and recover depth information from a stereo image pair using correspondence matching and spherical cross-slits stereo geometry. The existing single spherical imaging technique is extended to stereo geometry and a hierarchical PDE-based sub-pixel disparity estimation method for large images is proposed. The estimated floating-point disparity fields are used for generating an accurate and smooth depth. Finally, the 3D environments were reconstructed using triangular meshes from the depth field. Through experiments, we evaluate the accuracy of reconstruction against ground-truth and analyze the behaviour of errors for spherical stereo imaging.

Recent progresses in Virtual Reality (VR) and Augmented Reality (AR) allow us to experience various VR/AR applications in our daily life. In order to maximise the immersiveness of user in VR/AR environments, a plausible spatial audio reproduction synchronised with visual information is essential. In this paper, we propose a simple and efficient system to estimate room acoustic for plausible reproducton of spatial audio using 360° cameras for VR/AR applications. A pair of 360° images is used for room geometry and acoustic property estimation. A simplified 3D geometric model of the scene is estimated by depth estimation from captured images and semantic labelling using a convolutional neural network (CNN). The real environment acoustics are characterised by frequency-dependent acoustic predictions of the scene. Spatially synchronised audio is reproduced based on the estimated geometric and acoustic properties in the scene. The reconstructed scenes are rendered with synthesised spatial audio as VR/AR content. The results of estimated room geometry and simulated spatial audio are evaluated against the actual measurements and audio calculated from ground-truth Room Impulse Responses (RIRs) recorded in the rooms.

We propose a framework for 2D/3D multi-modal data registration and evaluate 3D feature descriptors for registration of 3D datasets from different sources. 3D datasets of outdoor environments can be acquired using a variety of active and passive sensor technologies including laser scanning and video cameras. Registration of these datasets into a common coordinate frame is required for subsequent modelling and visualisation. 2D images are converted into 3D structure by stereo or multi-view reconstruction techniques and registered to a unified 3D domain with other datasets in a 3D world. Multi-modal datasets have different density, noise, and types of errors in geometry. This paper provides a performance benchmark for existing 3D feature descriptors across multi-modal datasets. Performance is evaluated for the registration of datasets obtained from high-resolution laser scanning with reconstructions obtained from images and video. This analysis highlights the limitations of existing 3D feature detectors and descriptors which need to be addressed for robust multi-modal data registration. We analyse and discuss the performance of existing methods in registering various types of datasets then identify future directions required to achieve robust multi-modal 3D data registration.

We present a novel hybrid representation for character animation from 4D Performance Capture (4DPC) data which combines skeletal control with surface motion graphs. 4DPC data are temporally aligned 3D mesh sequence reconstructions of the dynamic surface shape and associated appearance from multiple view video. The hybrid representation supports the production of novel surface sequences which satisfy constraints from user specified key-frames or a target skeletal motion. Motion graph path optimisation concatenates fragments of 4DPC data to satisfy the constraints whilst maintaining plausible surface motion at transitions between sequences. Spacetime editing of the mesh sequence using a learnt part-based Laplacian surface deformation model is performed to match the target skeletal motion and transition between sequences. The approach is quantitatively evaluated for three 4DPC datasets with a variety of clothing styles. Results for key-frame animation demonstrate production of novel sequences which satisfy constraints on timing and position of less than 1% of the sequence duration and path length. Evaluation of motion capture driven animation over a corpus of 130 sequences shows that the synthesised motion accurately matches the target skeletal motion. The combination of skeletal control with the surface motion graph extends the range and style of motion which can be produced whilst maintaining the natural dynamics of shape and appearance from the captured performance.

Object-based audio is an emerging representation for audio content, where content is represented in a reproductionformat- agnostic way and thus produced once for consumption on many different kinds of devices. This affords new opportunities for immersive, personalized, and interactive listening experiences. This article introduces an end-to-end object-based spatial audio pipeline, from sound recording to listening. A high-level system architecture is proposed, which includes novel audiovisual interfaces to support object-based capture and listenertracked rendering, and incorporates a proposed component for objectification, i.e., recording content directly into an object-based form. Text-based and extensible metadata enable communication between the system components. An open architecture for object rendering is also proposed. The system’s capabilities are evaluated in two parts. First, listener-tracked reproduction of metadata automatically estimated from two moving talkers is evaluated using an objective binaural localization model. Second, object-based scene capture with audio extracted using blind source separation (to remix between two talkers) and beamforming (to remix a recording of a jazz group), is evaluated with perceptually-motivated objective and subjective experiments. These experiments demonstrate that the novel components of the system add capabilities beyond the state of the art. Finally, we discuss challenges and future perspectives for object-based audio workflows.

We consider the problem of geometric integration and representation of multiple views of non-rigidly deforming 3D surface geometry captured at video rate. Instead of treating each frame as a separate mesh we present a representation which takes into consideration temporal and spatial coherence in the data where possible. We first segment gross base transformations using correspondence based on a closest point metric and represent these motions as piecewise rigid transformations. The remaining residual is encoded as displacement maps at each frame giving a displacement video. At both these stages occlusions and missing data are interpolated to give a representation which is continuous in space and time. We demonstrate the integration of multiple views for four different non-rigidly deforming scenes: hand, face, cloth and a composite scene. The approach achieves the integration of multiple-view data at different times into one representation which can processed and edited.

This paper proposes a novel Attention-based Multi-Reference Super-resolution network (AMRSR) that, given a low-resolution image, learns to adaptively transfer the most similar texture from multiple reference images to the super-resolution output whilst maintaining spatial coherence. The use of multiple reference images together with attention-based sampling is demonstrated to achieve significantly improved performance over state-of-the-art reference super-resolution approaches on multiple benchmark datasets. Reference super-resolution approaches have recently been proposed to overcome the ill-posed problem of image super-resolution by providing additional information from a high-resolution reference image. Multi-reference super-resolution extends this approach by providing a more diverse pool of image features to overcome the inherent information deficit whilst maintaining memory efficiency. A novel hierarchical attention-based sampling approach is introduced to learn the similarity between low-resolution image features and multiple reference images based on a perceptual loss. Ablation demonstrates the contribution of both multi-reference and hierarchical attention-based sampling to overall performance. Perceptual and quantitative ground-truth evaluation demonstrates significant improvement in performance even when the reference images deviate significantly from the target image.

Simultaneous semantically coherent object-based long-term 4D scene flow estimation, co-segmentation and reconstruction is proposed exploiting the coherence in semantic class labels both spatially, between views at a single time instant, and temporally, between widely spaced time instants of dynamic objects with similar shape and appearance. In this paper we propose a framework for spatially and temporally coherent semantic 4D scene flow of general dynamic scenes from multiple view videos captured with a network of static or moving cameras. Semantic coherence results in improved 4D scene flow estimation, segmentation and reconstruction for complex dynamic scenes. Semantic tracklets are introduced to robustly initialize the scene flow in the joint estimation and enforce temporal coherence in 4D flow, semantic labelling and reconstruction between widely spaced instances of dynamic objects. Tracklets of dynamic objects enable unsupervised learning of long-term flow, appearance and shape priors that are exploited in semantically coherent 4D scene flow estimation, co-segmentation and reconstruction. Comprehensive performance evaluation against state-of-the-art techniques on challenging indoor and outdoor sequences with hand-held moving cameras shows improved accuracy in 4D scene flow, segmentation, temporally coherent semantic labelling, and reconstruction of dynamic scenes.

In this paper we propose a framework for spatially and temporally coherent semantic co-segmentation and reconstruction of complex dynamic scenes from multiple static or moving cameras. Semantic co-segmentation exploits the coherence in semantic class labels both spatially, between views at a single time instant, and temporally, between widely spaced time instants of dynamic objects with similar shape and appearance. We demonstrate that semantic coherence results in improved segmentation and reconstruction for complex scenes. A joint formulation is proposed for semantically coherent object-based co-segmentation and reconstruction of scenes by enforcing consistent semantic labelling between views and over time. Semantic tracklets are introduced to enforce temporal coherence in semantic labelling and reconstruction between widely spaced instances of dynamic objects. Tracklets of dynamic objects enable unsupervised learning of appearance and shape priors that are exploited in joint segmentation and reconstruction. Evaluation on challenging indoor and outdoor sequences with hand-held moving cameras shows improved accuracy in segmentation, temporally coherent semantic labelling and 3D reconstruction of dynamic scenes.

Correct labelling of multiple people from different viewpoints in complex scenes is a challenging task due to occlusions, visual ambiguities, as well as variations in appearance and illumination. In recent years, deep learning approaches have proved very successful at improving the performance of a wide range of recognition and labelling tasks such as person re-identification and video tracking. However, to date, applications to multi-view tasks have proved more challenging due to the lack of suitably labelled multi-view datasets, which are difficult to collect and annotate. The contributions of this paper are two-fold. First, a synthetic dataset is generated by combining 3D human models and panoramas along with human poses and appearance detail rendering to overcome the shortage of real dataset for multi-view labelling. Second, a novel framework named Multi-View Labelling network (MVL-net) is introduced to leverage the new dataset and unify the multi-view multiple people detection, segmentation and labelling tasks in complex scenes. To the best of our knowledge, this is the first work using deep learning to train a multi-view labelling network. Experiments conducted on both synthetic and real datasets demonstrate that the proposed method outperforms the existing state-of-the-art approaches.

We propose an approach to accurately esti- mate 3D human pose by fusing multi-viewpoint video (MVV) with inertial measurement unit (IMU) sensor data, without optical markers, a complex hardware setup or a full body model. Uniquely we use a multi-channel 3D convolutional neural network to learn a pose em- bedding from visual occupancy and semantic 2D pose estimates from the MVV in a discretised volumetric probabilistic visual hull (PVH). The learnt pose stream is concurrently processed with a forward kinematic solve of the IMU data and a temporal model (LSTM) exploits the rich spatial and temporal long range dependencies among the solved joints, the two streams are then fused in a final fully connected layer. The two complemen- tary data sources allow for ambiguities to be resolved within each sensor modality, yielding improved accu- racy over prior methods. Extensive evaluation is per- formed with state of the art performance reported on the popular Human 3.6M dataset [26], the newly re- leased TotalCapture dataset and a challenging set of outdoor videos TotalCaptureOutdoor. We release the new hybrid MVV dataset (TotalCapture) comprising of multi- viewpoint video, IMU and accurate 3D skele- tal joint ground truth derived from a commercial mo- tion capture system. The dataset is available online at http://cvssp.org/data/totalcapture/.

The probability hypothesis density (PHD) filter based on sequential Monte Carlo (SMC) approximation (also known as SMC-PHD filter) has proven to be a promising algorithm for multi-speaker tracking. However, it has a heavy computational cost as surviving, spawned and born particles need to be distributed in each frame to model the state of the speakers and to estimate jointly the variable number of speakers with their states. In particular, the computational cost is mostly caused by the born particles as they need to be propagated over the entire image in every frame to detect the new speaker presence in the view of the visual tracker. In this paper, we propose to use audio data to improve the visual SMC-PHD (VSMC- PHD) filter by using the direction of arrival (DOA) angles of the audio sources to determine when to propagate the born particles and re-allocate the surviving and spawned particles. The tracking accuracy of the AV-SMC-PHD algorithm is further improved by using a modified mean-shift algorithm to search and climb density gradients iteratively to find the peak of the probability distribution, and the extra computational complexity introduced by mean-shift is controlled with a sparse sampling technique. These improved algorithms, named as AVMS-SMCPHD and sparse-AVMS-SMC-PHD respectively, are compared systematically with AV-SMC-PHD and V-SMC-PHD based on the AV16.3, AMI and CLEAR datasets.

Surface motion capture (SurfCap) of actor performance from multiple view video provides reconstruction of the natural nonrigid deformation of skin and clothing. This paper introduces techniques for interactive animation control of SurfCap sequences which allow the flexibility in editing and interactive manipulation associated with existing tools for animation from skeletal motion capture (MoCap). Laplacian mesh editing is extended using a basis model learned from SurfCap sequences to constrain the surface shape to reproduce natural deformation. Three novel approaches for animation control of SurfCap sequences, which exploit the constrained Laplacian mesh editing, are introduced: 1) space-time editing for interactive sequence manipulation; 2) skeleton-driven animation to achieve natural nonrigid surface deformation; and 3) hybrid combination of skeletal MoCap driven and SurfCap sequence to extend the range of movement. These approaches are combined with high-level parametric control of SurfCap sequences in a hybrid surface and skeleton-driven animation control framework to achieve natural surface deformation with an extended range of movement by exploiting existing MoCap archives. Evaluation of each approach and the integrated animation framework are presented on real SurfCap sequences for actors performing multiple motions with a variety of clothing styles. Results demonstrate that these techniques enable flexible control for interactive animation with the natural nonrigid surface dynamics of the captured performance and provide a powerful tool to extend current SurfCap databases by incorporating new motions from MoCap sequences.

Existing systems for 3D reconstruction from multiple view video use controlled indoor environments with uniform illumination and backgrounds to allow accurate segmentation of dynamic foreground objects. In this paper we present a portable system for 3D reconstruction of dynamic outdoor scenes which require relatively large capture volumes with complex backgrounds and non-uniform illumination. This is motivated by the demand for 3D reconstruction of natural outdoor scenes to support film and broadcast production. Limitations of existing multiple view 3D reconstruction techniques for use in outdoor scenes are identified. Outdoor 3D scene reconstruction is performed in three stages: (1) 3D background scene modelling using spherical stereo image capture; (2) multiple view segmentation of dynamic foreground objects by simultaneous video matting across multiple views; and (3) robust 3D foreground reconstruction and multiple view segmentation refinement in the presence of segmentation and calibration errors. Evaluation is performed on several outdoor productions with complex dynamic scenes including people and animals. Results demonstrate that the proposed approach overcomes limitations of previous indoor multiple view reconstruction approaches enabling high-quality free-viewpoint rendering and 3D reference models for production.

Generating grammatically and semantically correct captions in video captioning is a challenging task.. The captions generated from the existing methods are either word-by-word that do not align with grammatical structure or miss key information from the input videos. To address these issues, we introduce a novel global-local fusion network, with a Global-Local Fusion Block (GLFB) that encodes and fuses features from different parts of speech (POS) components with visual-spatial features. We use novel combinations of different POS components - 'determinant + subject', 'auxiliary verb', 'verb', and 'determinant + object' for supervision of the POS blocks - Det + Subject, Aux Verb, Verb, and Det + Object respectively. The novel global-local fusion network together with POS blocks helps align the visual features with language description to generate grammatically and semantically correct captions. Extensive qualitative and quantitative experiments on benchmark MSVD and MSRVTT datasets demonstrate that the proposed approach generates more grammatically and semantically correct captions compared to the existing methods, achieving the new state-of-the-art. Ablations on the POS blocks and the GLFB demonstrate the impact of the contributions on the proposed method.

Research on 3D video processing has gained a tremendous amount of momentum due to advances in video communications, broadcasting and entertainment technology (e.g., animation blockbusters like Avatar and Up). There is an increasing need for reliable technologies capable of visualizing 3-D content from viewpoints decided by the user; the 2010 football World Cup in South Africa has made very evident the need to replay crucial football footage from new viewpoints to decide whether the ball has or has not crossed the goal line. Remote videoconferencing prototypes are introducing a sense of presence into large- and small-scale (PC-based) systems alike by manipulating single and multiple video sequences to improve eye contact and place participants in convincing virtual spaces. All this, and more, is pushing the introduction of 3D services and the development of high-quality 3D displays to be available in a future which is drawing nearer and nearer.

Conventional view-dependent texture mapping techniques produce composite images by blending subsets of input images, weighted according to their relative influence at the rendering viewpoint, over regions where the views overlap. Geometric or camera calibration errors often result in a los s of detail due to blurring or double exposure artefacts which tends to be exacerbated by the number of blending views considered. We propose a novel view-dependent rendering technique which optimises the blend region dynamically at rendering time, and reduces the adverse effects of camera calibration or geometric errors otherwise observed. The technique has been successfully integrated in a rendering pipeline which operates at interactive frame rates. Improvement over state-of-the-art view-dependent texture mapping techniques are illustrated on a synthetic scene as well as real imagery of a large scale outdoor scene where large camera calibration and geometric errors are present.

Multi-view stereo remains a popular choice when recovering 3D geometry, despite performance varying dramatically according to the scene content. Moreover, typical pinhole camera assumptions fail in the presence of shallow depth of field inherent to macro-scale scenes; limiting application to larger scenes with diffuse reflectance. However, the presence of defocus blur can itself be considered a useful reconstruction cue, particularly in the presence of view-dependent materials. With this in mind, we explore the complimentary nature of stereo and defocus cues in the context of multi-view 3D reconstruction; and propose a complete pipeline for scene modelling from a finite aperature camera that encompasses image formation, camera calibration and reconstruction stages. As part of our evaluation, an ablation study reveals how each cue contributes to the higher performance observed over a range of complex materials and geometries. Though of lesser concern with large apertures, the effects of image noise are also considered. By introducing pre-trained deep feature extraction into our cost function, we show a step improvement over per-pixel comparisons; as well as verify the cross-domain applicability of networks using largely in-focus training data applied to defocused images. Finally, we compare to a number of modern multi-view stereo methods, and demonstrate how the use of both cues leads to a significant increase in performance across several synthetic and real datasets.

In computer vision, matting is the process of accurate foreground estimation in images and videos. In this paper we presents a novel patch based approach to video matting relying on non-parametric statistics to represent image variations in appearance. This overcomes the limitation of parametric algorithms which only rely on strong colour correlation between the nearby pixels. Initially we construct a clean background by utilising the foreground object’s movement across the background. For a given frame, a trimap is constructed using the background and the last frame’s trimap. A patch-based approach is used to estimate the foreground colour for every unknown pixel and finally the alpha matte is extracted. Quantitative evaluation shows that the technique performs better, in terms of the accuracy and the required user interaction, than the current state-of-the-art parametric approaches.

Synchronisation is an essential requirement for multiview 3D reconstruction of dynamic scenes. However, the use of HD cameras and large set-ups put a considerable stress on hardware and cause frame drops, which is usually detected by manually verifying very large amounts of data. This paper improves [9], and extends it with frame-drop detection capability. In order to spot frame-drop events, the algorithm fits a broken line to the frame index correspondences for each camera pair, and then fuses the pairwise drop hypotheses into a consistent, absolute frame-drop estimate. The success and the practical utility of the the improved pipeline is demonstrated through a number of experiments, including 3D reconstruction and free-viewpoint video rendering tasks.

A real-time full-body motion capture system is presented which uses input from a sparse set of inertial measurement units (IMUs) along with images from two or more standard video cameras and requires no optical markers or specialized infra-red cameras. A real-time optimization-based framework is proposed which incorporates constraints from the IMUs, cameras and a prior pose model. The combination of video and IMU data allows the full 6-DOF motion to be recovered including axial rotation of limbs and drift-free global position. The approach was tested using both indoor and outdoor captured data. The results demonstrate the effectiveness of the approach for tracking a wide range of human motion in real time in unconstrained indoor/outdoor scenes.

In this paper we address the problem of reliable real-time 3D-tracking of multiple objects which are observed in multiple wide-baseline camera views. Establishing the spatio-temporal correspondence is a problem with combinatorial complexity in the number of objects and views. In addition vision-based tracking suffers from the ambiguities introduced by occlusion, clutter and irregular 3D motion. In this paper we present a discrete relaxation algorithm for reducing the intrinsic combinatorial complexity by pruning the decision tree based on unreliable prior information from independent 2D-tracking for each view. The algorithm improves the reliability of spatio-temporal correspondence by simultaneous optimisation over multiple views in the case where 2D-tracking in one or more views are ambiguous. Application to the 3D reconstruction of human movement, based on tracking of skin-coloured regions in three views, demonstrates considerable improvement in reliability and performance. Results demonstrate that the optimisation over multiple views gives correct 3D reconstruction and object labelling in the presence of incorrect 2D-tracking whilst maintaining real-time performance.

This paper approaches the problem of compressing temporally consistent 3D video mesh sequences with the aim of reducing the storage cost. We present an evaluation of compression techniques which apply Principal Component Analysis to the representation of the mesh in different domain spaces, and demonstrate the applicability of mesh deformation algorithms for compression purposes. A novel layered mesh representation is introduced for compression of 3D video sequences with an underlying articulated motion, such as a person with loose clothing. Comparative evaluation on captured mesh sequences of people demonstrates that this representation achieves a significant improvement in compression compared to previous techniques. Results show a compression ratio of 8-15 for an RMS error of less than 5mm.

We introduce the concept of 4D model flow for the precomputed alignment of dynamic surface appearance across 4D video sequences of different motions reconstructed from multi-view video. Precomputed 4D model flow allows the efficient parametrization of surface appearance from the captured videos, which enables efficient real-time rendering of interpolated 4D video sequences whilst accurately reproducing visual dynamics, even when using a coarse underlying geometry. We estimate the 4D model flow using an image-based approach that is guided by available geometry proxies. We propose a novel representation in surface texture space for efficient storage and online parametric interpolation of dynamic appearance. Our 4D model flow overcomes previous requirements for computationally expensive online optical flow computation for data-driven alignment of dynamic surface appearance by precomputing the appearance alignment. This leads to an efficient rendering technique that enables the online interpolation between 4D videos in real time, from arbitrary viewpoints and with visual quality comparable to the state of the art.